Before starting the analysis, we need to load the essential R libraries required for data manipulation, visualization, and performing differential gene expression (DEG) analysis.

# Load necessary libraries for RNA-seq analysis and visualization

library(GEOquery) # Load the GEOquery package to access GEO datasets

library(DESeq2) # For differential expression analysis

library(data.table) # Efficient data manipulation

library(umap) # Dimensionality reduction for visualization

library(ggplot2) # General-purpose plotting

library(clusterProfiler) # For functional enrichment analysis

library(org.Hs.eg.db) # Human genome annotations (the Human Genome Annotation package)

library(VennDiagram) # Venn diagram visualization

library(knitr) # Report generation and table formatting (for kable)

library(tinytex) # LaTeX support for report generation

Code Block 2.1. Retrieving Data from GEO

# ===================================================================

# Step 1. Download Supplementary Files for the Count Matrix

# ===================================================================

# Define the GEO accession ID

gse_id <- "GSE157194"

# Download the supplementary files for this GEO dataset

getGEOSuppFiles(gse_id)

# Check the list of files downloaded

list.files(gse_id)

# ================================

# Path to the downloaded file

supp_file_path <- paste0(gse_id, "/", "GSE157194_Raw_gene_counts_matrix.txt.gz")

# Unzip and load the file into R as a data frame (counts matrix)

counts_matrix <- read.table(gzfile(supp_file_path), header = TRUE, row.names = 1)

# ===================================================================

# Step 2. Download Phenotype Data

# ===================================================================

# Specify the GEO accession number

gse_id <- "GSE157194"

# Download the phenotype data (GEO series matrix)

gse_data <- getGEO(gse_id, GSEMatrix = TRUE, AnnotGPL = TRUE)

# If multiple series are available, choose the first one

gse_data <- gse_data[[1]]

# Extract metadata from the GEO dataset

# We access the metadata (also known as phenotype data) stored in the 'phenoData' slot of the GEO object.

metadata <- gse_data@phenoData@data

# ===================================================================

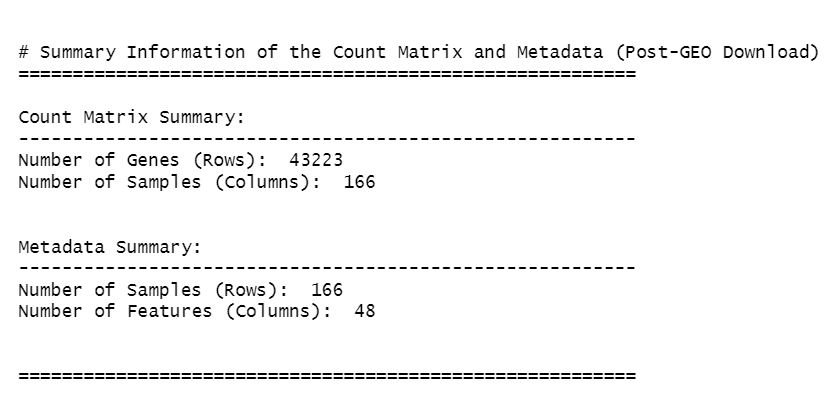

# Step 3. Print Summary Information about the Count Matrix and Metadata

# ===================================================================

cat("\n\n# Summary Information of the Count Matrix and Metadata (Post-GEO Download)\n")

cat("=========================================================\n")

# Count matrix summary

cat("\nCount Matrix Summary:\n")

cat("---------------------------------------------------------\n")

cat("Number of Genes (Rows): ", nrow(counts_matrix), "\n")

cat("Number of Samples (Columns): ", ncol(counts_matrix), "\n\n")

# Metadata summary

cat("\nMetadata Summary:\n")

cat("---------------------------------------------------------\n")

cat("Number of Samples (Rows): ", nrow(metadata), "\n")

cat("Number of Features (Columns): ", ncol(metadata), "\n\n")

cat("\n=========================================================\n")

Code Block 2.2.1: Identifying and Removing Outlier Samples, and Visualizing the Impact

# ========================================================

# Load necessary libraries

# ========================================================

library(ggplot2)

library(gridExtra) # For arranging multiple plots in one figure

# ========================================================

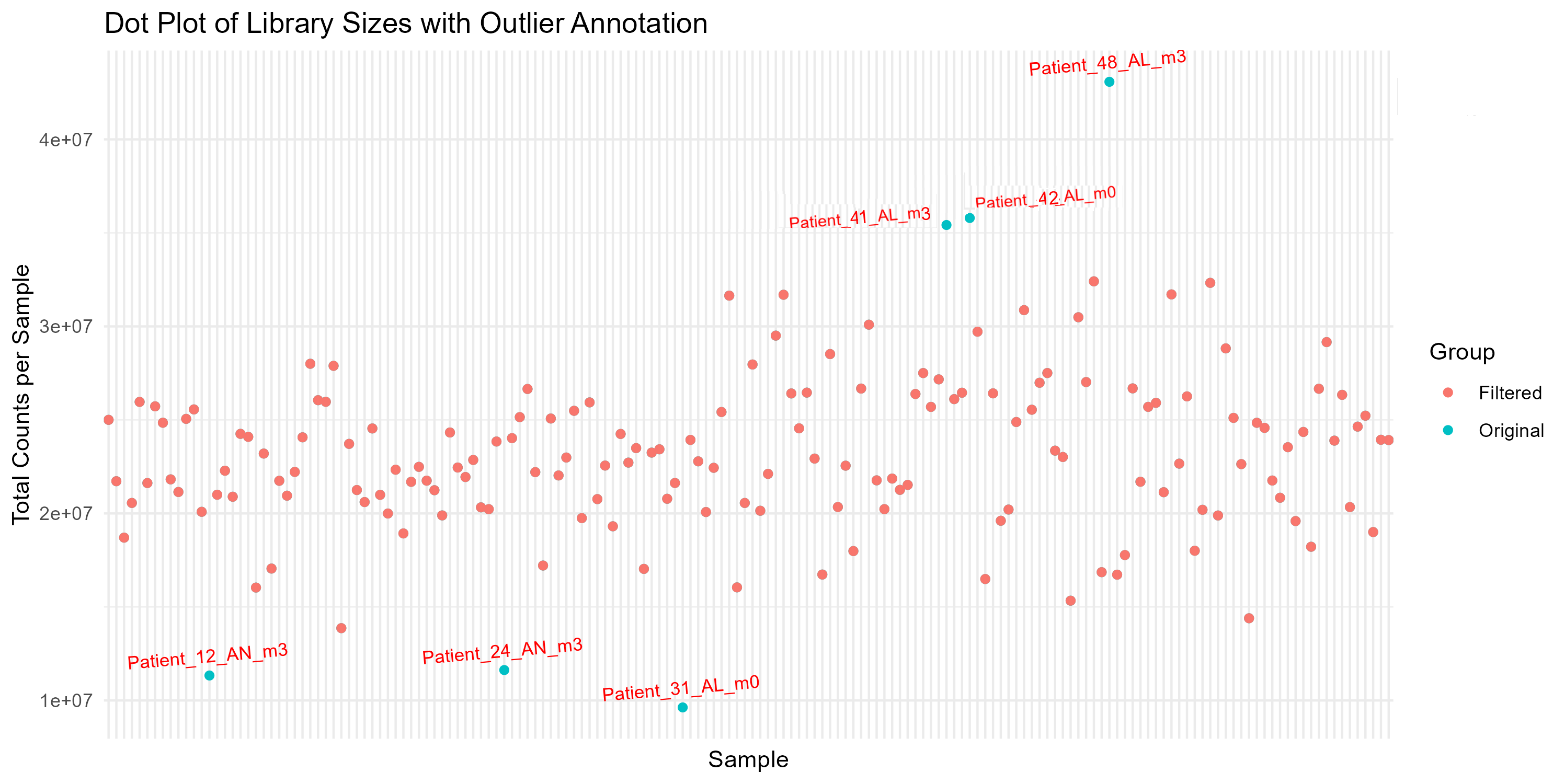

# Step 1: Calculate Library Size and Identify Outliers

# ========================================================

# Calculate library sizes

library_sizes <- colSums(counts_matrix)

# Optionally, print summary to check for anomalies

summary(library_sizes)

# Identify potential outlier samples based on library size

# Step 1.1: Set thresholds for outlier identification

# Set the lower threshold to identify samples with low counts

lower_threshold <- quantile(library_sizes, 0.25) - 1.5 * IQR(library_sizes)

# Set the upper threshold to identify samples with very high counts

upper_threshold <- quantile(library_sizes, 0.75) + 1.5 * IQR(library_sizes)

# Step 1.2: Find outlier samples

# Uncomment the corresponding line depending on whether you want to use:

# - Only the lower threshold

# - Only the upper threshold

# - Both lower and upper thresholds

# Use only the lower threshold:

# outlier_samples <- colnames(counts_matrix)[library_sizes < lower_threshold]

# Use both lower and upper thresholds:

outlier_samples <- colnames(counts_matrix)[library_sizes < lower_threshold | library_sizes > upper_threshold]

# ========================================================

# Step 2: Store Original Dimensions Before Filtering (First part for Plotting Dimensions of Count Matrix and Metadata)

# ========================================================

# Store original dimensions before filtering

counts_matrix_before <- dim(counts_matrix) # [number of genes, number of samples]

metadata_before <- dim(metadata) # [number of samples, number of features]

# ========================================================

# Step 3: Remove Outlier Samples from Data

# ========================================================

# Step 3.1: Remove outlier samples from counts matrix

counts_matrix_filtered <- counts_matrix[, !(colnames(counts_matrix) %in% outlier_samples)]

# Step 3.2: Remove outlier samples from metadata

metadata_filtered <- metadata[colnames(counts_matrix_filtered), ]

# Step 3.3: Store dimensions after filtering (Second part for Plotting Dimensions of Count Matrix and Metadata)

counts_matrix_after <- dim(counts_matrix_filtered)

metadata_after <- dim(metadata_filtered)

# Step 3.4: Calculate filtered library sizes

filtered_library_sizes <- colSums(counts_matrix_filtered)

# ========================================================

# Step 4: Plot the Filtered Library Sizes (Box, Violin, Dot, Bar, Density plots)

# ========================================================

# Step 4.1: Prepare Data for Plotting:

# Combine original and filtered library sizes into a data frame for comparison

comparison_df <- data.frame(

Sample = c(names(library_sizes), names(filtered_library_sizes)),

Library_Size = c(library_sizes, filtered_library_sizes),

Group = c(rep("Original", length(library_sizes)), rep("Filtered", length(filtered_library_sizes)))

)

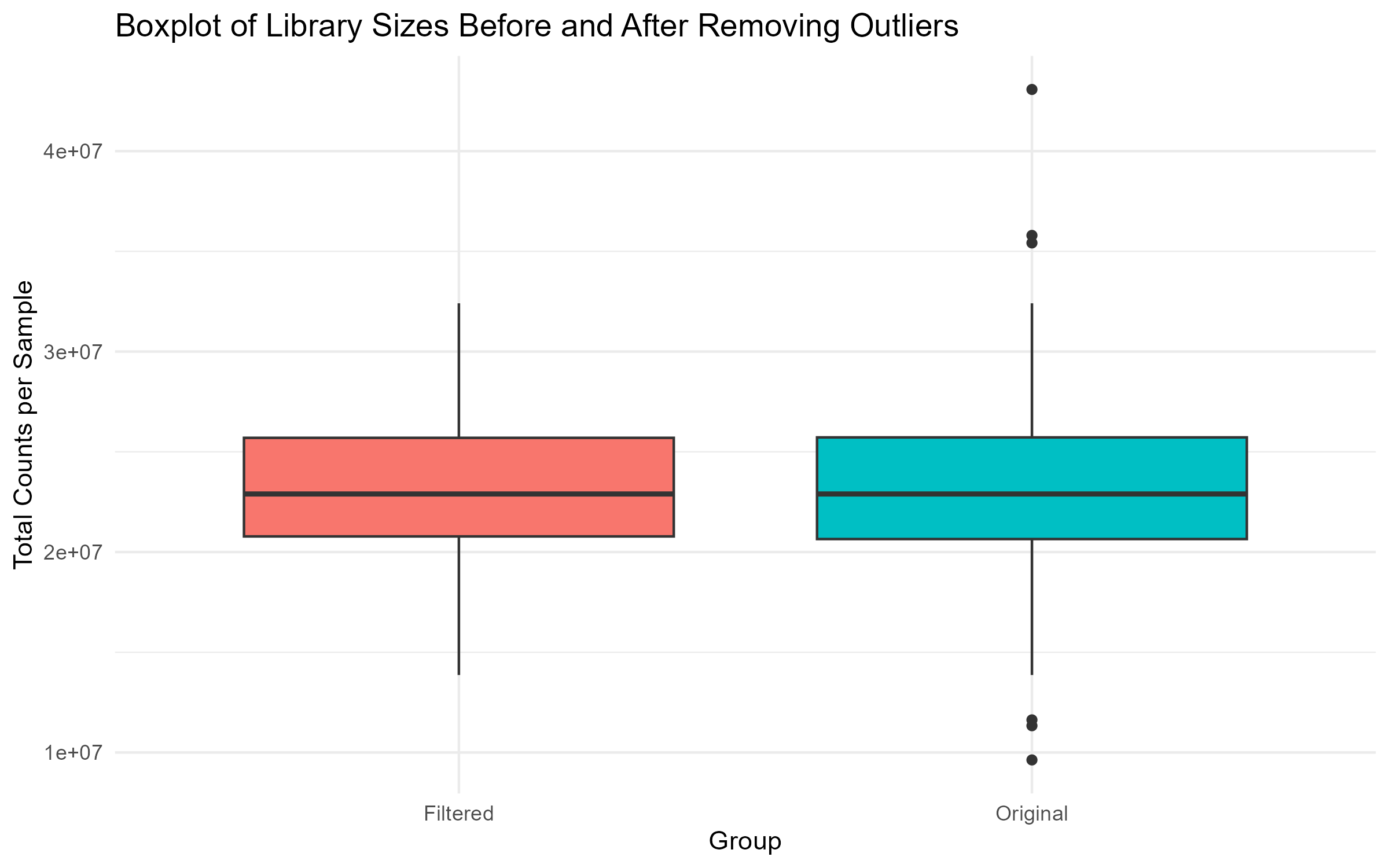

# Step 4.2: Boxplot of Library Sizes Before and After Filtering

box_plot <- ggplot(comparison_df, aes(x = Group, y = Library_Size, fill = Group)) +

geom_boxplot() +

theme_minimal() +

labs(title = "Boxplot of Library Sizes Before and After Removing Outliers",

x = "Group", y = "Total Counts per Sample") +

theme(legend.position = "none")

ggsave("Figures/2.2.1.Outlier_Samples/Figure2.2.1.3.Box_Plot_Library_Sizes.png", plot = box_plot, width = 8, height = 5, bg = "white")

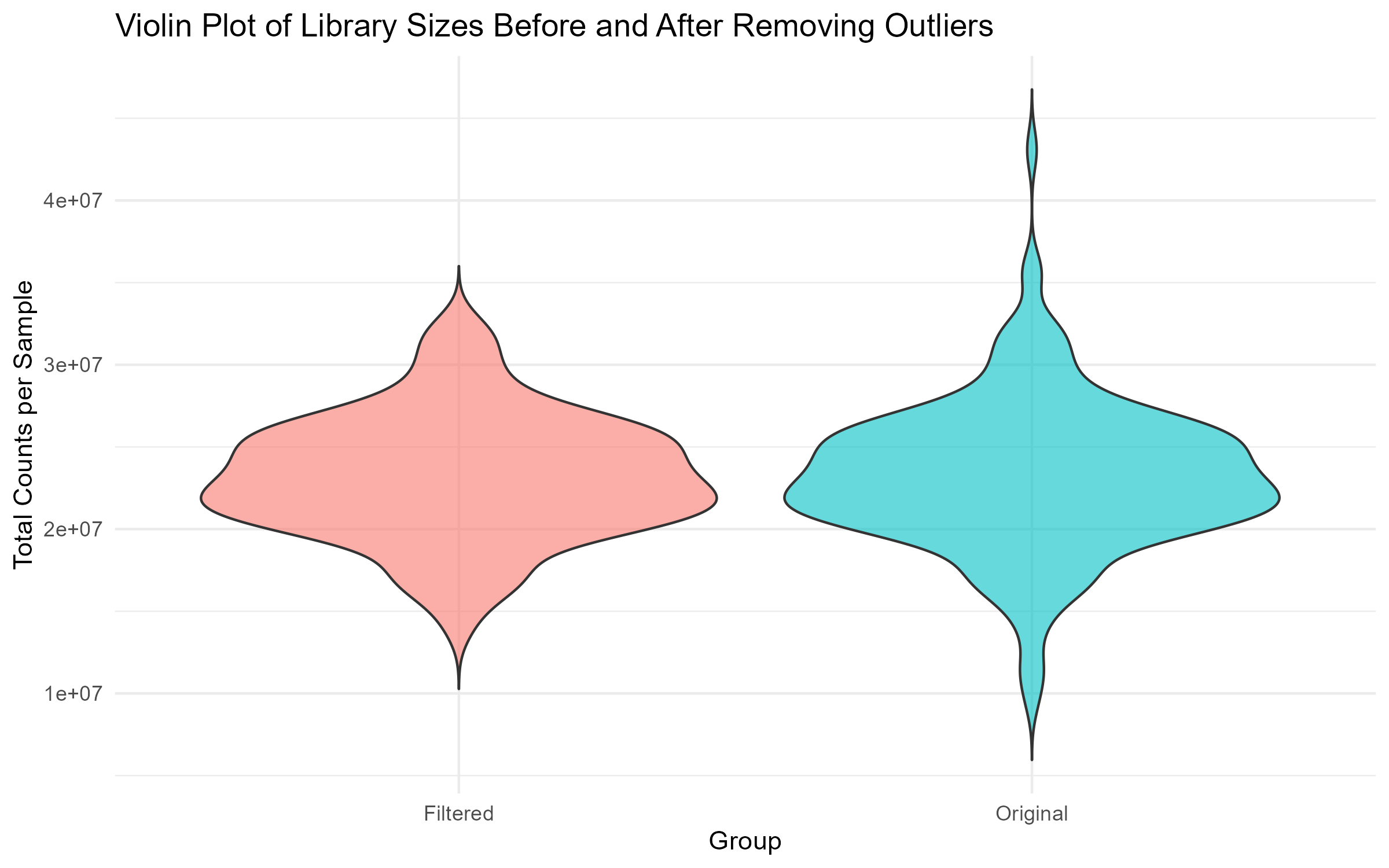

# Step 4.3: Violin Plot of Library Sizes Before and After Filtering

violin_plot <- ggplot(comparison_df, aes(x = Group, y = Library_Size, fill = Group)) +

geom_violin(trim = FALSE, alpha = 0.6) +

theme_minimal() +

labs(title = "Violin Plot of Library Sizes Before and After Removing Outliers",

x = "Group", y = "Total Counts per Sample") +

theme(legend.position = "none")

ggsave("Figures/2.2.1.Outlier_Samples/Figure2.2.1.5.Violin_Plot_Library_Sizes.png", plot = violin_plot, width = 8, height = 5, bg = "white")

# Step 4.4: Dot Plot with Annotation of Outliers

dot_plot <- ggplot(comparison_df, aes(x = Sample, y = Library_Size, color = Group)) +

geom_point() +

theme_minimal() +

labs(title = "Dot Plot of Library Sizes with Outlier Annotation",

x = "Sample", y = "Total Counts per Sample") +

theme(axis.text.x = element_blank(), axis.ticks.x = element_blank()) +

geom_text(data = subset(comparison_df, Group == "Original" & Sample %in% outlier_samples),

aes(label = Sample), vjust = -1, size = 3, color = "red", angle = 5)

ggsave("Figures/2.2.1.Outlier_Samples/Figure2.2.1.1.Dot_Plot_Library_Sizes.png", plot = dot_plot, width = 10, height = 5, bg = "white")

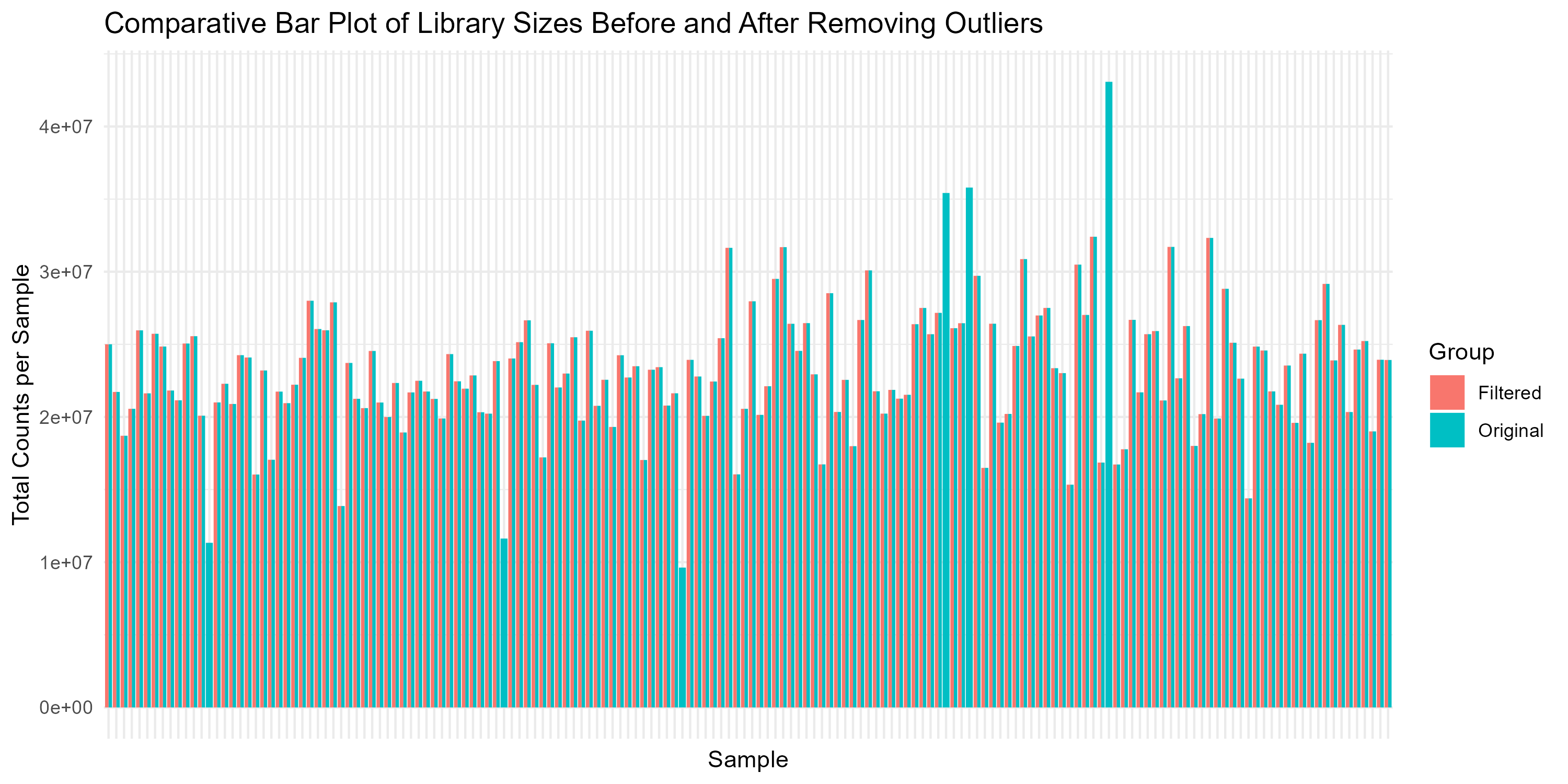

# Step 4.5: Comparative Bar Plot Before and After Filtering

bar_plot <- ggplot(comparison_df, aes(x = Sample, y = Library_Size, fill = Group)) +

geom_bar(stat = "identity", position = "dodge") +

theme_minimal() +

labs(title = "Comparative Bar Plot of Library Sizes Before and After Removing Outliers",

x = "Sample", y = "Total Counts per Sample") +

theme(axis.text.x = element_blank(), axis.ticks.x = element_blank())

ggsave("Figures/2.2.1.Outlier_Samples/Figure2.2.1.2.Bar_Plot_Library_Sizes.png", plot = bar_plot, width = 10, height = 5, bg = "white")

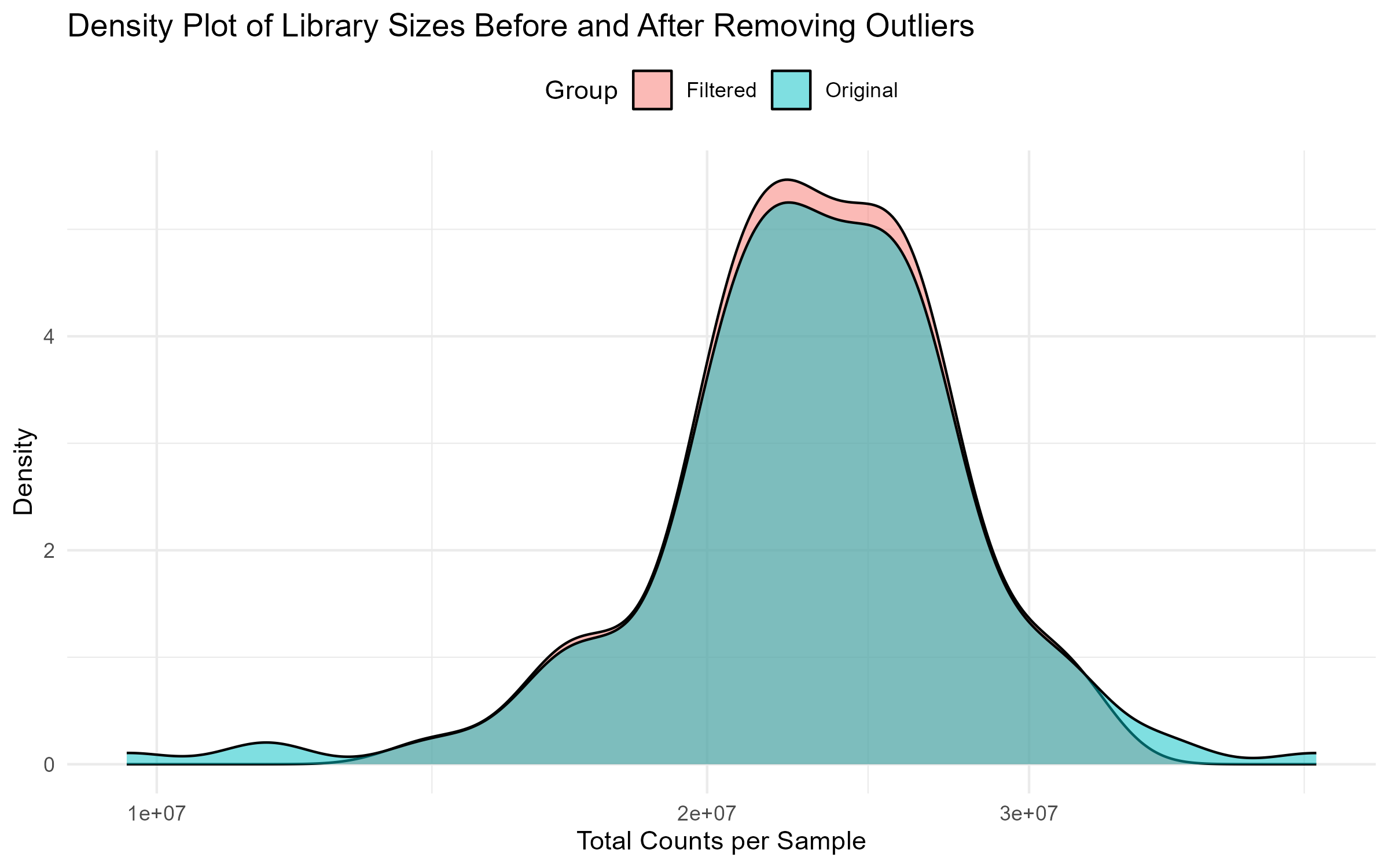

# Step 4.6: Side-by-Side Density Plot Before and After Filtering

density_plot <- ggplot(comparison_df, aes(x = Library_Size, fill = Group)) +

geom_density(alpha = 0.5) +

theme_minimal() +

labs(title = "Density Plot of Library Sizes Before and After Removing Outliers",

x = "Total Counts per Sample", y = "Density") +

scale_x_log10() +

theme(legend.position = "top")

ggsave("Figures/2.2.1.Outlier_Samples/Figure2.2.1.4.Density_Plot_Library_Sizes.png", plot = density_plot, width = 8, height = 5, bg = "white")

# ========================================================

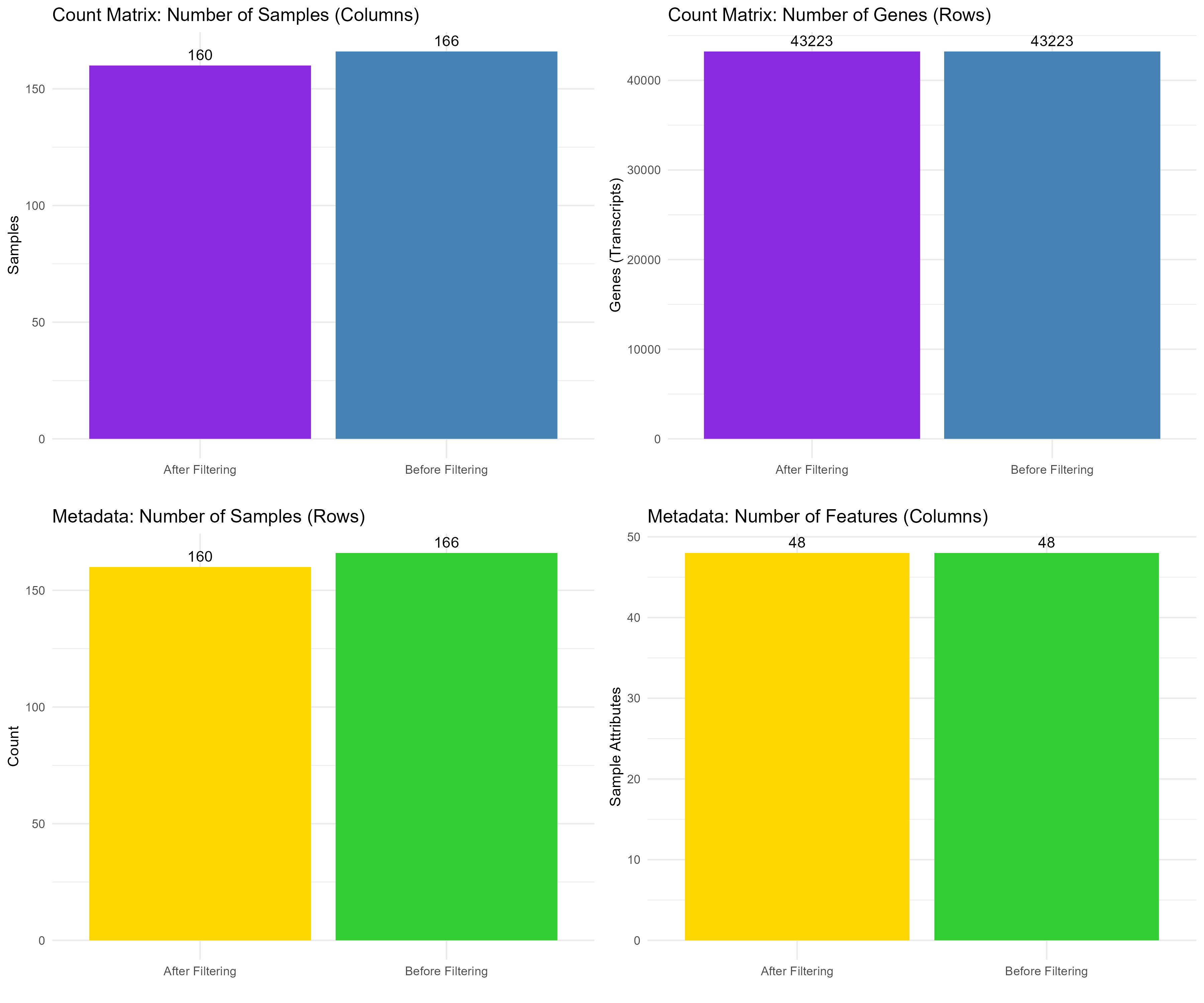

# Step 5: Plot Dimensions of Count Matrix and Metadata (Third part for Plotting Dimensions of Count Matrix and Metadata)

# ========================================================

# Step 5.1: Create Data Frames for Count Matrix Dimensions

counts_matrix_dims <- data.frame(

Measure = rep(c("Samples", "Genes"), each = 2),

Stage = rep(c("Before Filtering", "After Filtering"), 2),

Count = c(counts_matrix_before[2], counts_matrix_after[2],

counts_matrix_before[1], counts_matrix_after[1])

)

# Step 5.2: Create Data Frames for Count Matrix and Metadata Dimensions

metadata_dims <- data.frame(

Measure = rep(c("Samples", "Features"), each = 2),

Stage = rep(c("Before Filtering", "After Filtering"), 2),

Count = c(metadata_before[1], metadata_after[1],

metadata_before[2], metadata_after[2])

)

# Step 5.3: Generate and Combine Plots for Dimensions

# Plot for count matrix samples before and after filtering

count_samples_plot <- ggplot(subset(counts_matrix_dims, Measure == "Samples"), aes(x = Stage, y = Count, fill = Stage)) +

geom_bar(stat = "identity") +

geom_text(aes(label = Count), vjust = -0.5) + # Add count labels on top of bars

theme_minimal() +

labs(title = "Count Matrix: Number of Samples (Columns)", y = "Samples", x = "") +

theme(legend.position = "none") +

scale_fill_manual(values = c("Before Filtering" = "#4682B4", "After Filtering" = "#8A2BE2")) # Change colors

# Plot for count matrix genes (transcripts) before and after filtering

count_genes_plot <- ggplot(subset(counts_matrix_dims, Measure == "Genes"), aes(x = Stage, y = Count, fill = Stage)) +

geom_bar(stat = "identity") +

geom_text(aes(label = Count), vjust = -0.5) + # Add count labels on top of bars

theme_minimal() +

labs(title = "Count Matrix: Number of Genes (Rows)", y = "Genes (Transcripts)", x = "") +

theme(legend.position = "none") +

scale_fill_manual(values = c("Before Filtering" = "#4682B4", "After Filtering" = "#8A2BE2")) # Change colors

# Plot for metadata samples before and after filtering

metadata_samples_plot <- ggplot(subset(metadata_dims, Measure == "Samples"), aes(x = Stage, y = Count, fill = Stage)) +

geom_bar(stat = "identity") +

geom_text(aes(label = Count), vjust = -0.5) + # Add count labels on top of bars

theme_minimal() +

labs(title = "Metadata: Number of Samples (Rows)", y = "Count", x = "") +

theme(legend.position = "none") +

scale_fill_manual(values = c("Before Filtering" = "#32CD32", "After Filtering" = "#FFD700")) # Change colors

# Plot for metadata features before and after filtering

metadata_features_plot <- ggplot(subset(metadata_dims, Measure == "Features"), aes(x = Stage, y = Count, fill = Stage)) +

geom_bar(stat = "identity") +

geom_text(aes(label = Count), vjust = -0.5) + # Add count labels on top of bars

theme_minimal() +

labs(title = "Metadata: Number of Features (Columns)", y = "Sample Attributes", x = "") +

theme(legend.position = "none") +

scale_fill_manual(values = c("Before Filtering" = "#32CD32", "After Filtering" = "#FFD700")) # Change colors

# Arrange the four plots in a grid

combined_plot <- grid.arrange(count_samples_plot, count_genes_plot,

metadata_samples_plot, metadata_features_plot,

ncol = 2)

# Save the combined plot

ggsave("Figures/2.2.1.Outlier_Samples/Figure2.2.1.6.Dimensions_Comparison.png", plot = combined_plot, width = 12, height = 10, bg = "white")

# ========================================================

# Step 6: Reassign Filtered Data

# ========================================================

# Reassign filtered versions back to counts_matrix and metadata

counts_matrix <- counts_matrix_filtered

metadata <- metadata_filtered

# ======================================

# Step 7. Print Summary Information about the Count Matrix and Metadata

# ======================================

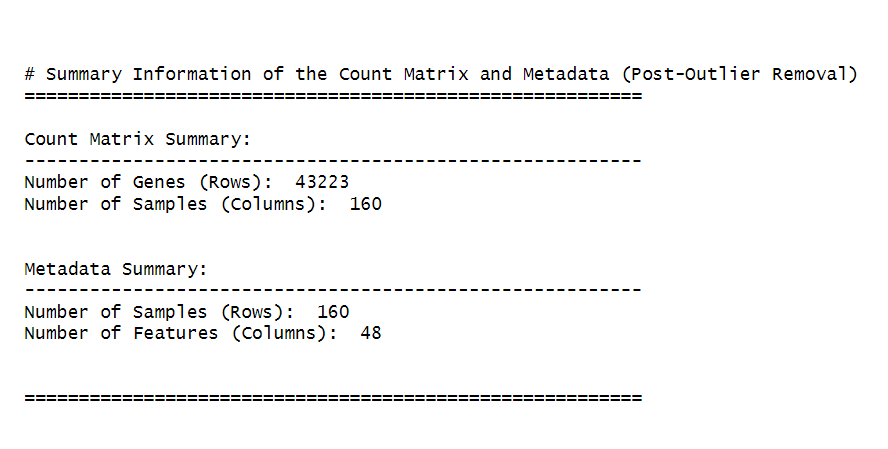

cat("\n\n# Summary Information of the Count Matrix and Metadata (Post-Outlier Removal)\n")

cat("=========================================================\n")

# Count matrix summary

cat("\nCount Matrix Summary:\n")

cat("---------------------------------------------------------\n")

cat("Number of Genes (Rows): ", nrow(counts_matrix), "\n")

cat("Number of Samples (Columns): ", ncol(counts_matrix), "\n\n")

# Metadata summary

cat("\nMetadata Summary:\n")

cat("---------------------------------------------------------\n")

cat("Number of Samples (Rows): ", nrow(metadata), "\n")

cat("Number of Features (Columns): ", ncol(metadata), "\n\n")

cat("\n=========================================================\n")

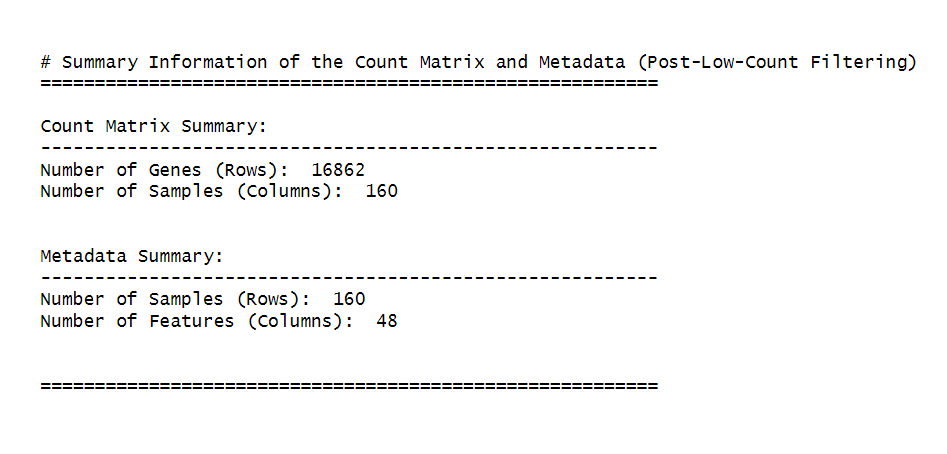

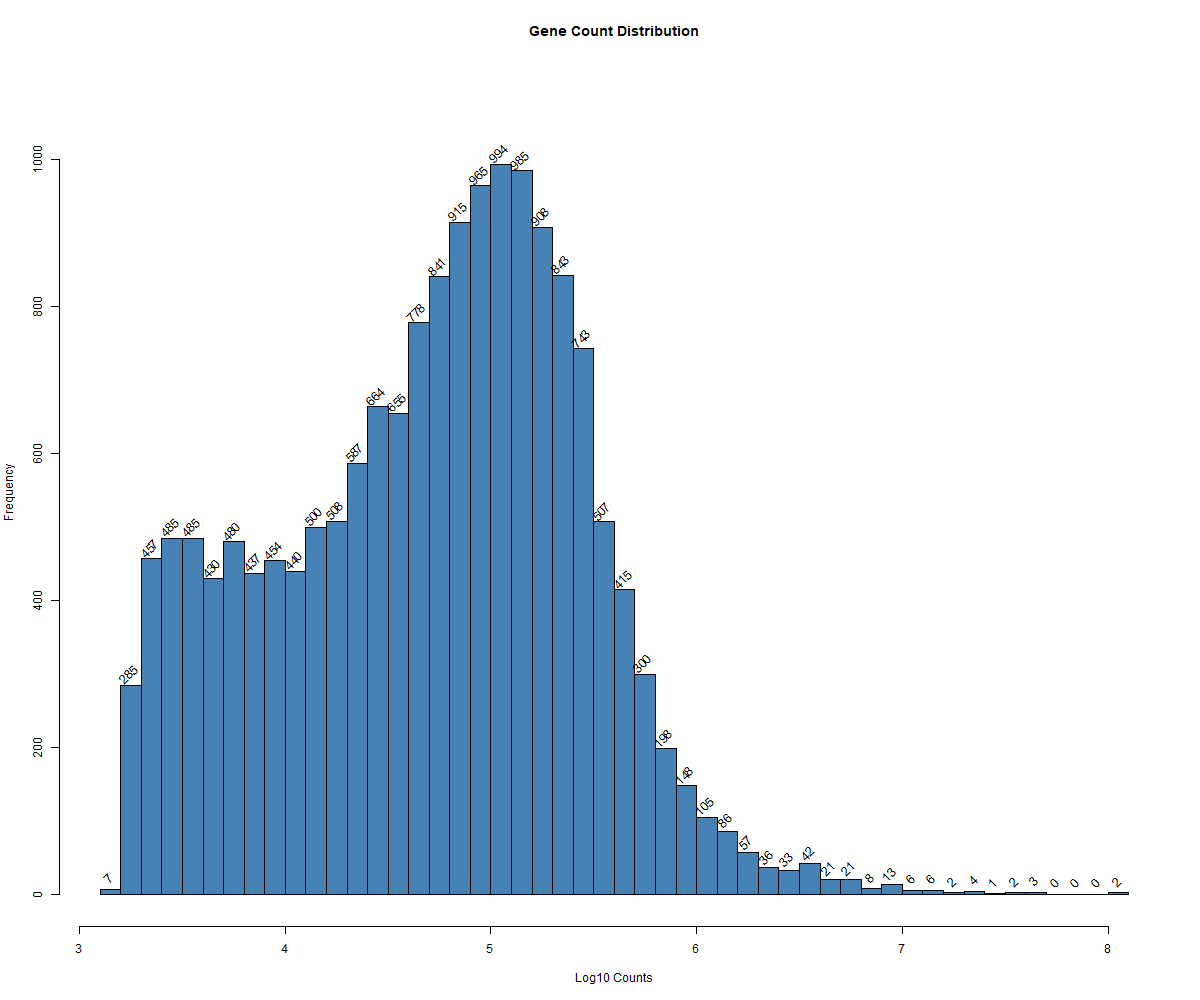

ncol(counts_matrix) / 2). This step ensures that the filtered dataset retains genes with meaningful levels of expression across a significant number of samples. After identifying the genes to keep, we filter the counts_matrix to retain only those genes, reassign the filtered matrix back to counts_matrix (Step 2), and then check the dimensions of the filtered dataset (Step 3) to confirm the number of genes and samples that remain (Figure 2.2.2).Code Block 2.2.2: Pre-filtering Low-Count Genes to Improve Data Quality

# ========================================================

# Step 1: Pre-filter low-count genes

# ========================================================

# Step 1: Create a logical matrix to check if each value in counts_matrix is >= 10 (sufficient expression).

# Step 2: Retain genes that have counts >= 10 in at least half of the samples (ncol(counts_matrix) / 2).

keep_genes <- rowSums(counts_matrix >= 10) >= (ncol(counts_matrix) / 2)

# Filter the counts matrix to retain only the genes that meet the filtering criteria

filtered_counts_matrix <- counts_matrix[keep_genes, ]

# ========================================================

# Step 2: Reassign filtered matrix to counts_matrix

# ========================================================

# Assign the filtered counts matrix back to counts_matrix for consistency in later steps

counts_matrix <- filtered_counts_matrix

# ======================================

# Step 3. Check dimensions of the filtered matrix (Summary Information after Low-Count Filtering)

# ======================================

cat("\n\n# Summary Information of the Count Matrix and Metadata (Post-Low-Count Filtering)\n")

cat("=========================================================\n")

# Count matrix summary

cat("\nCount Matrix Summary:\n")

cat("---------------------------------------------------------\n")

cat("Number of Genes (Rows): ", nrow(counts_matrix), "\n")

cat("Number of Samples (Columns): ", ncol(counts_matrix), "\n\n")

# Metadata summary

cat("\nMetadata Summary:\n")

cat("---------------------------------------------------------\n")

cat("Number of Samples (Rows): ", nrow(metadata), "\n")

cat("Number of Features (Columns): ", ncol(metadata), "\n\n")

cat("\n=========================================================\n")

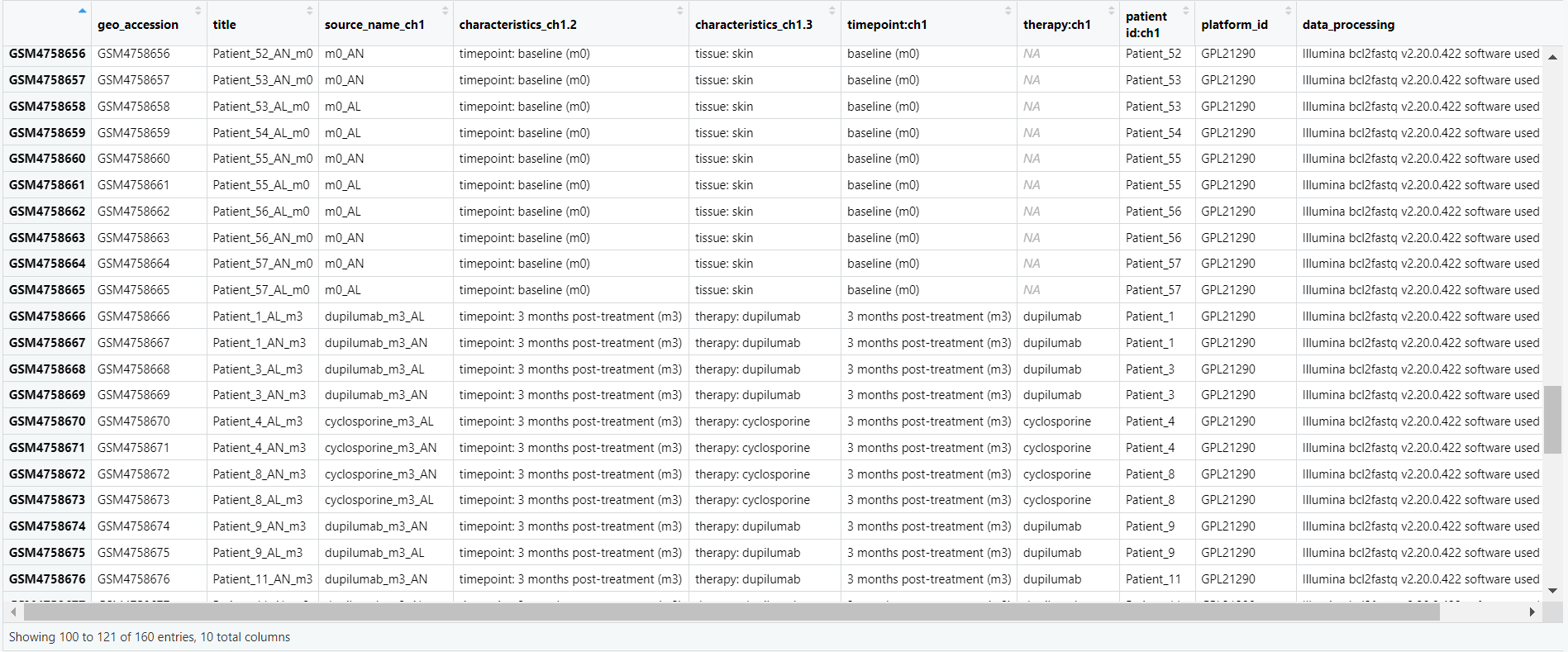

Code Block 3.1.1. Metadata Exploration

# View all column names in the metadata to select relevant columns for analysis

# This step helps us to explore the structure of the metadata and understand which columns are available.

colnames(metadata)

# Select relevant columns for analysis based on the experiment

selected_metadata <- metadata[, c("geo_accession", "title", "source_name_ch1", "characteristics_ch1.2", "characteristics_ch1.3", "timepoint:ch1", "therapy:ch1", "patient id:ch1", "platform_id", "data_processing")]

# Open the selected metadata in a viewer for further exploration

View(selected_metadata)

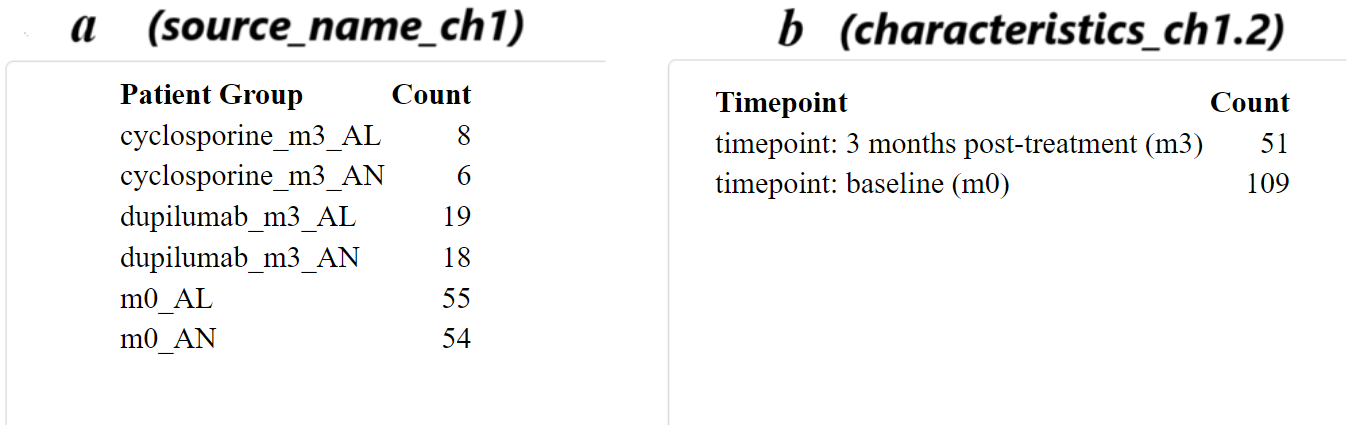

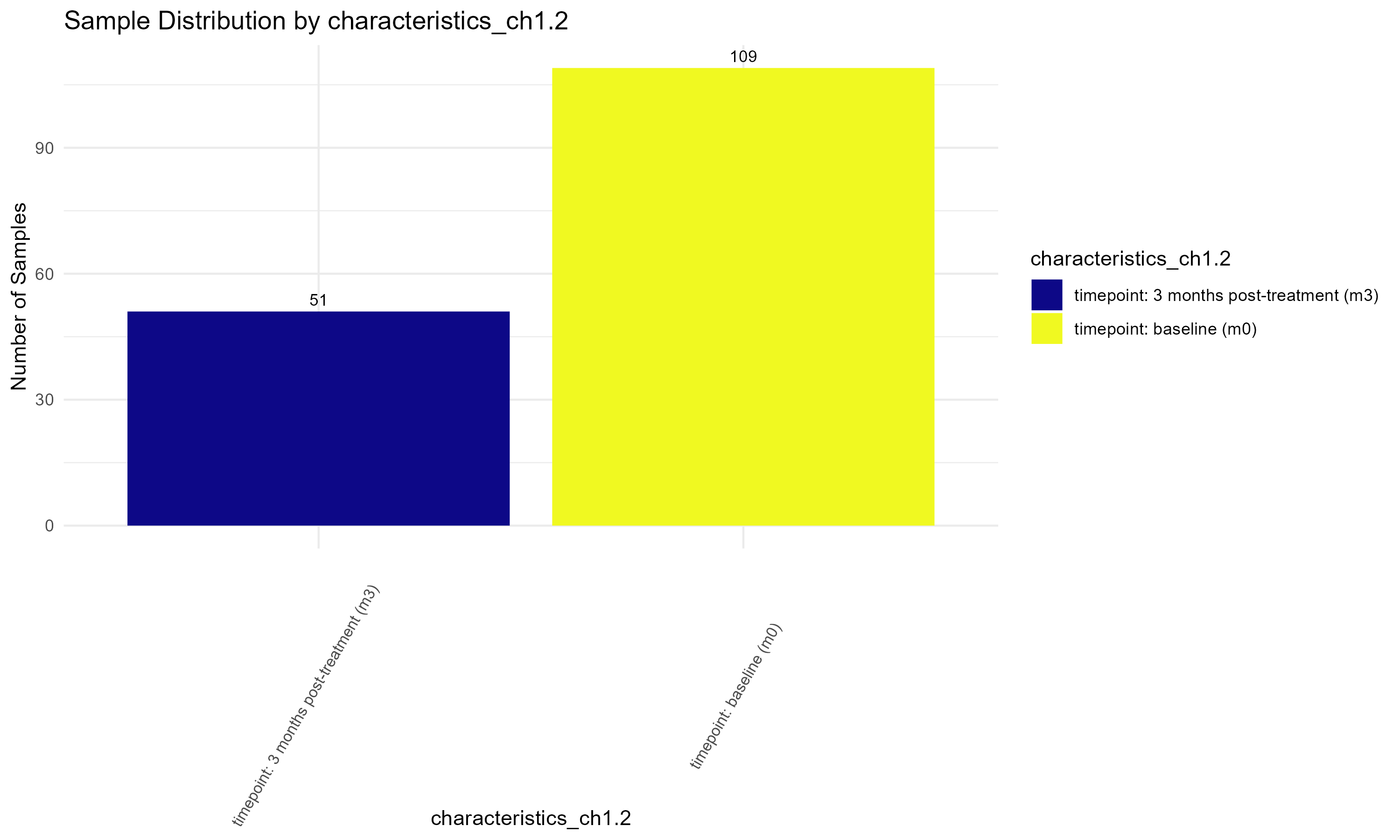

Code Block 3.1.2.1: Sample Distribution Table Generation

# Table of samples by patient group (e.g., lesional vs non-lesional)

group_table <- table(metadata$source_name_ch1)

kable(group_table, format = "html", col.names = c("Patient Group", "Count"))

# Table of samples by timepoints (e.g., baseline vs post-treatment)

timepoint_table <- table(metadata$characteristics_ch1.2)

kable(timepoint_table, format = "html", col.names = c("Timepoint", "Count"))

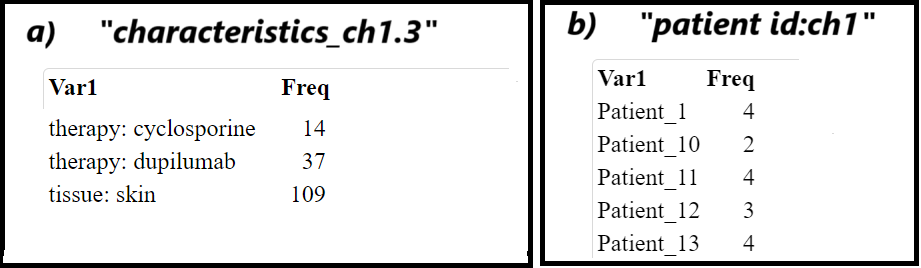

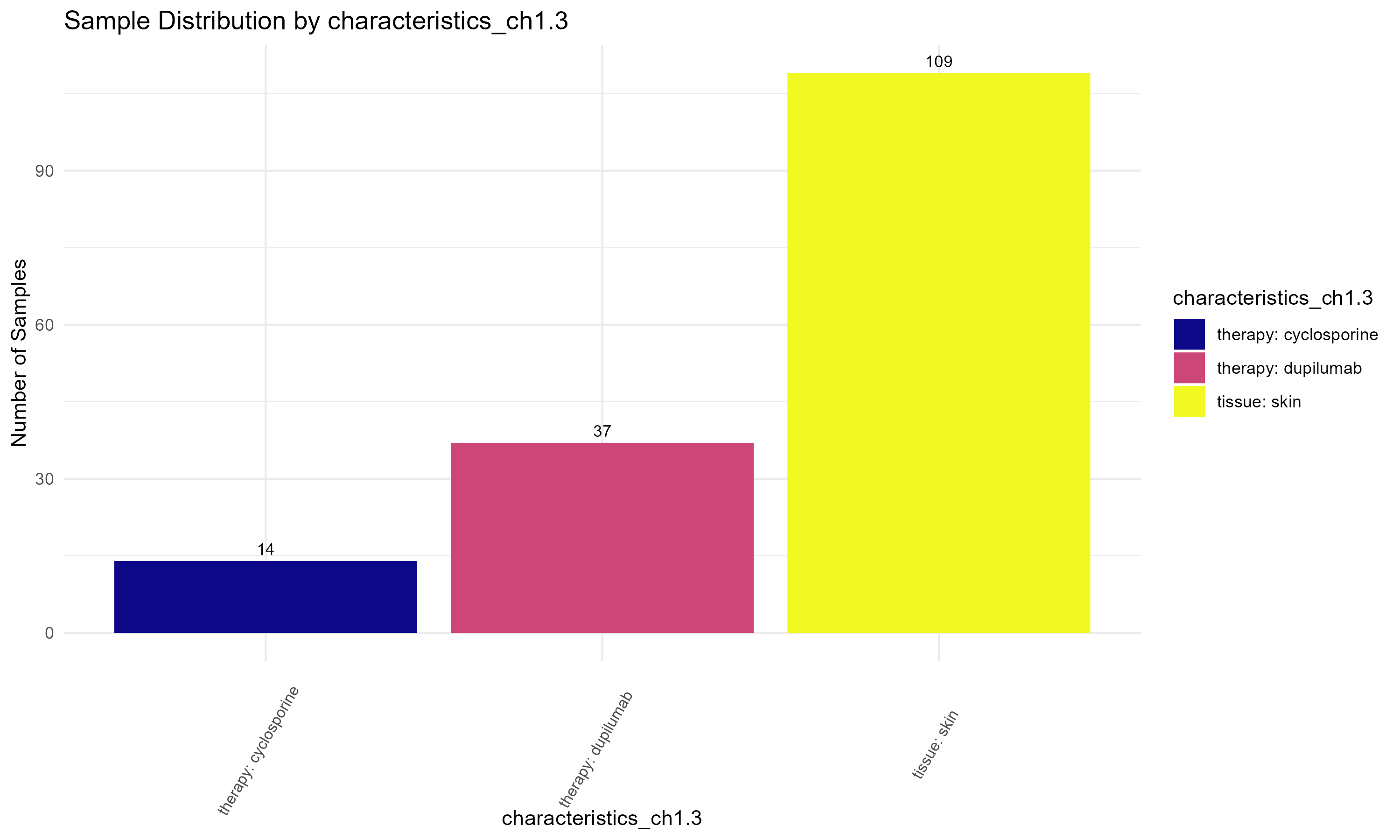

Code Block 3.1.2.2. Assessing Sample Characteristics for Experimental Design

# ======================

# List of all relevant columns

# These columns are selected based on the characteristics of the experiment,

# including metadata features like source names, patient IDs, and data processing details.

columns <- c("source_name_ch1", # Source of the sample

"characteristics_ch1.2", # Experimental characteristic 1

"characteristics_ch1.3", # Experimental characteristic 2

"therapy:ch1", # Therapy information

"patient id:ch1", # Patient ID

"platform_id", # Platform used for data generation

"extract_protocol_ch1", # Sample extraction protocol 1

"extract_protocol_ch1.1", # Sample extraction protocol 2

"data_processing", # Data processing detail 1

"data_processing.1", # Data processing detail 2

"data_processing.2", # Data processing detail 3

"data_processing.3", # Data processing detail 4

"data_processing.4", # Data processing detail 5

"data_processing.5", # Data processing detail 6

"data_processing.6" # Data processing detail 7

)

# ==========

# Loop through each column and generate a summary table for each

# The loop checks if each column exists in the 'metadata' dataframe and prints a table of its unique values and frequencies.

for (col in columns) {

if (col %in% colnames(metadata)) { # Ensure the column exists in the metadata dataframe

group_table <- table(metadata[[col]]) # Access and count the occurrences of each unique value in the column

print(kable(group_table)) # Display the table using kable for a nicely formatted output

} else {

print(paste("Column", col, "does not exist in the metadata")) # Handle cases where the column doesn't exist

}

}

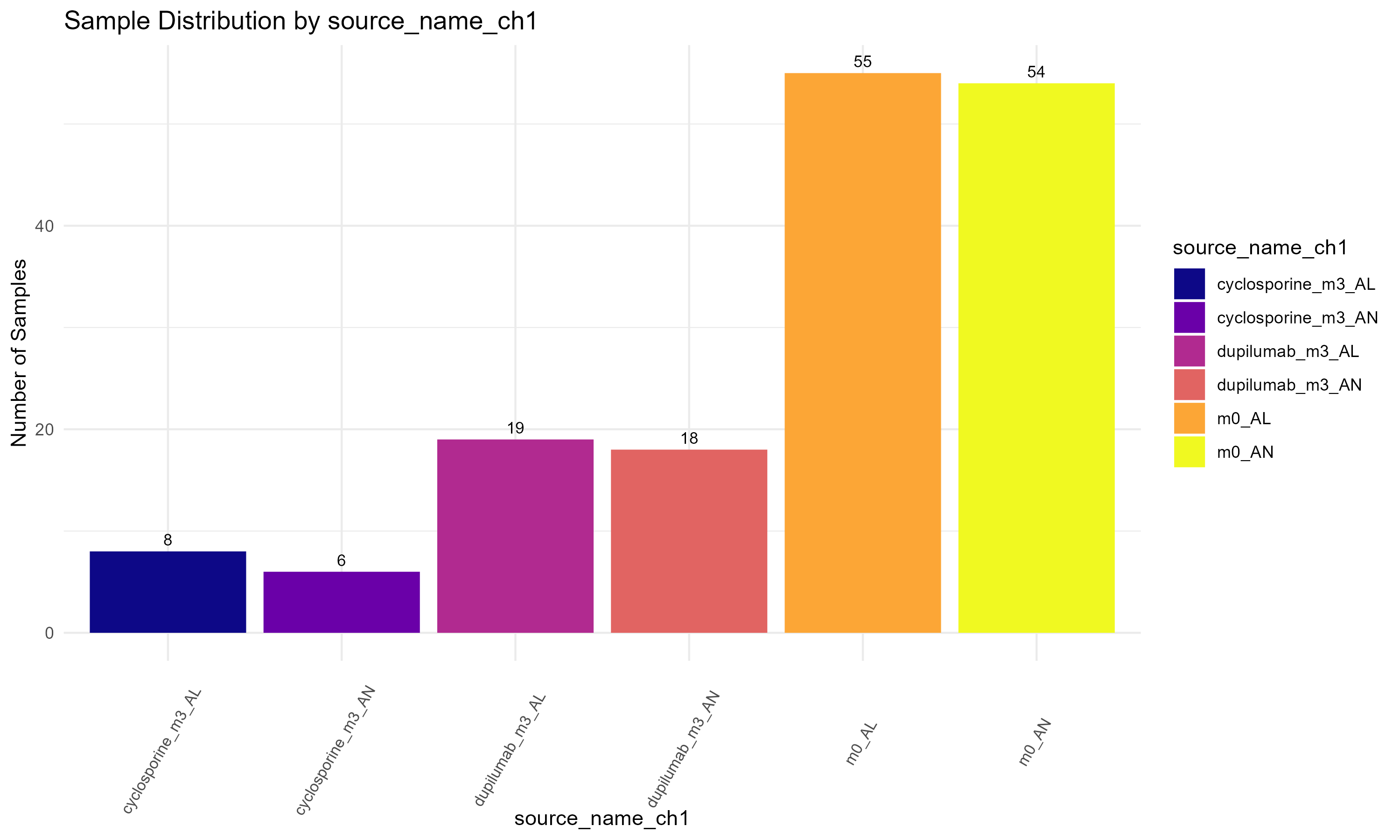

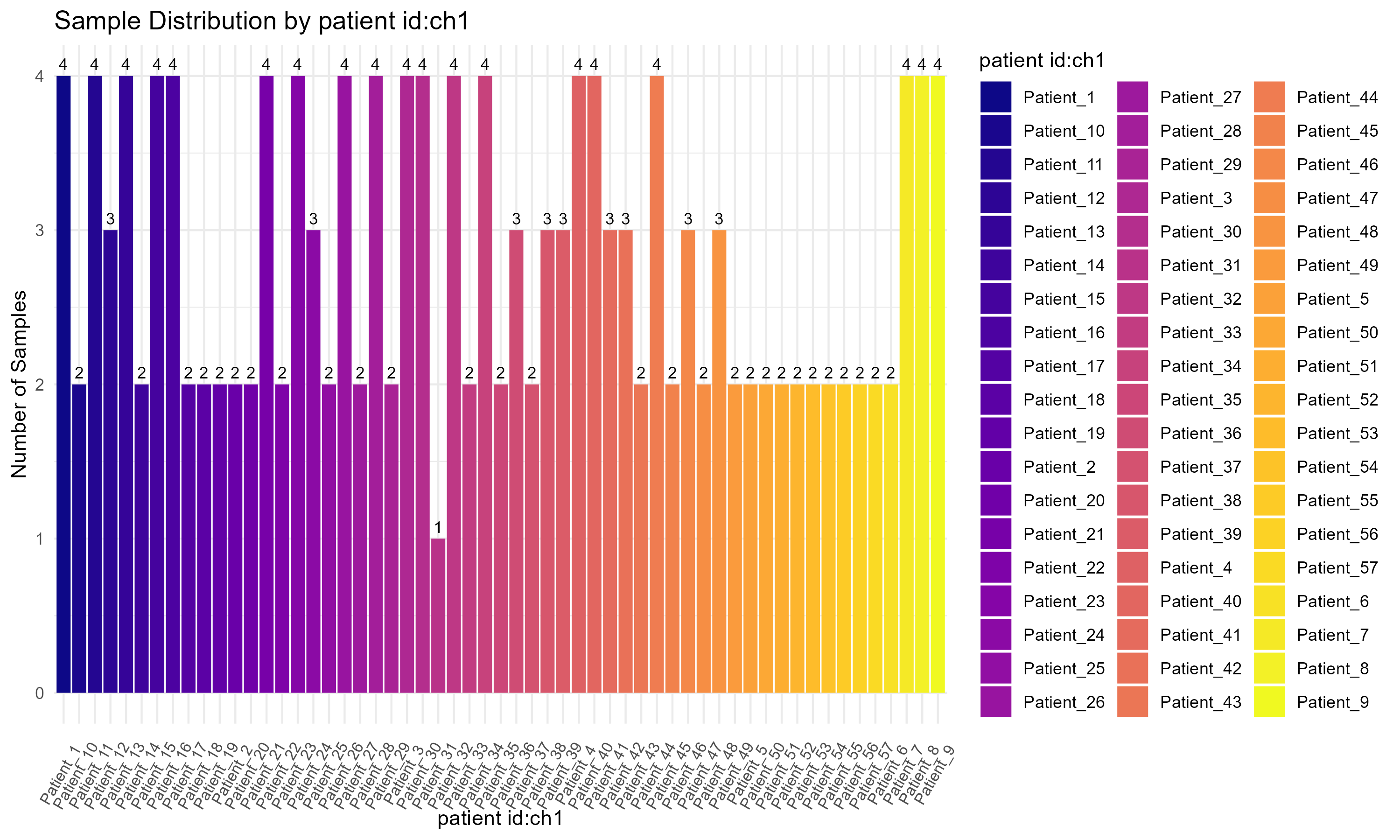

source_name_ch1, to display the sample distribution by experimental condition (Figure 3.1.3.1). However, the variable can be easily substituted with others if needed. (Step 1): The ggplot2 library is loaded for creating the plot. (Step 2): A bar plot is constructed using geom_bar() to create the bars and geom_text() to add count labels, where ..count.. refers to the computed count statistic. The plot is styled using theme_minimal() and color is applied with scale_fill_brewer(). (Step 3): Finally, the ggsave() function saves the plot to a specified location.

Code Block 3.1.3.1. Visualizing Sample Distribution by Experimental Condition

# ====================================

# Step 1: Load the necessary library

# ====================================

library(ggplot2)

# ============================================

# Step 2: Create a bar plot for sample counts

# ============================================

p <- ggplot(metadata, aes(x = `source_name_ch1`, fill = `source_name_ch1`)) +

geom_bar() + # Add bars representing sample counts

geom_text(stat = "count", aes(label = ..count..), vjust = -0.5, size = 3) + # Add count labels above bars

theme_minimal() + # Apply a minimal theme for better readability

labs(title = "Sample Distribution by Experimental Condition",

x = "Source Name",

y = "Number of Samples") +

theme(axis.text.x = element_text(angle = 60, vjust = 0.5, hjust = 0.6, size = 8)) + # Adjust axis label size and angle

scale_fill_brewer(palette = "Set3") # Apply a color palette for the bars

# The variable "source_name_ch1" in this code can be manually replaced by other variables

# such as "characteristics_ch1.2", "characteristics_ch1.3", or "patient id:ch1" to visualize their distributions.

# ==================================

# Step 3: Save the plot to a file

# ==================================

ggsave("Figures/3.1.3.1.Bar_Chart_Metadata_Variables/3.1.3.1.Sample_Distribution_by_Condition.png",

plot = p, width = 10, height = 6, dpi = 300, bg = "white")

Code Block 3.1.3.2. Visualizing Sample Distribution for Multiple Metadata Variables Using Automated Bar Charts

# =========================== Step 1: Install and Load Necessary Packages =========================== #

# Load the required packages for plotting

library(ggplot2) # For creating the bar plots

library(viridis) # For using a color palette that supports many unique colors

# =========================== Step 2: Define Columns to Visualize =========================== #

# List of columns to visualize (these correspond to the metadata columns you want to plot)

columns_to_plot <- c("source_name_ch1", "characteristics_ch1.2", "characteristics_ch1.3", "patient id:ch1")

# =========================== Step 3: Loop Through Each Column and Create Plots =========================== #

# Loop through each column and create a bar plot for sample distribution

for (col in columns_to_plot) {

# --------------------- Convert Column Name for Tidy Evaluation --------------------- #

# Convert the column name (a string) to a symbol that ggplot can interpret within aes()

col_sym <- sym(col)

# --------------------- Sanitize Column Name for Saving Files --------------------- #

# Replace ":" with "_" to create a valid filename (since ":" is not allowed in filenames)

sanitized_col <- gsub("[:]", "_", col)

# =========================== Step 4: Create Bar Plot =========================== #

# Create the bar plot for the current column

p <- ggplot(metadata, aes(x = !!col_sym, fill = !!col_sym)) + # Fill colors based on the column

geom_bar() + # Create the bar chart

geom_text(stat = "count", aes(label = ..count..), vjust = -0.5, size = 3) + # Add text labels above bars to show the count

theme_minimal() + # Use a minimal theme for a clean appearance

labs(title = paste("Sample Distribution by", col), x = col, y = "Number of Samples") + # Add title and axis labels

theme(axis.text.x = element_text(angle = 60, vjust = 0.5, hjust = 0.6, size = 8)) + # Rotate x-axis labels and adjust their size

scale_fill_viridis_d(option = "C") # Use the "viridis" color palette to ensure enough distinct colors for each category

# --------------------- Display the Plot --------------------- #

# Print the plot so it displays in the R console or notebook

print(p)

# =========================== Step 5: Save the Plot as PNG =========================== #

# Save the plot to a file in the specified directory with a relevant name

ggsave(filename = paste0("Figures/3.1.3.2.Bar_Chart_Metadata_Variables/Figure3.1.3.2_", sanitized_col, "_Sample_Distribution.png"),

plot = p, width = 10, height = 6, dpi = 300, bg = "white")

}

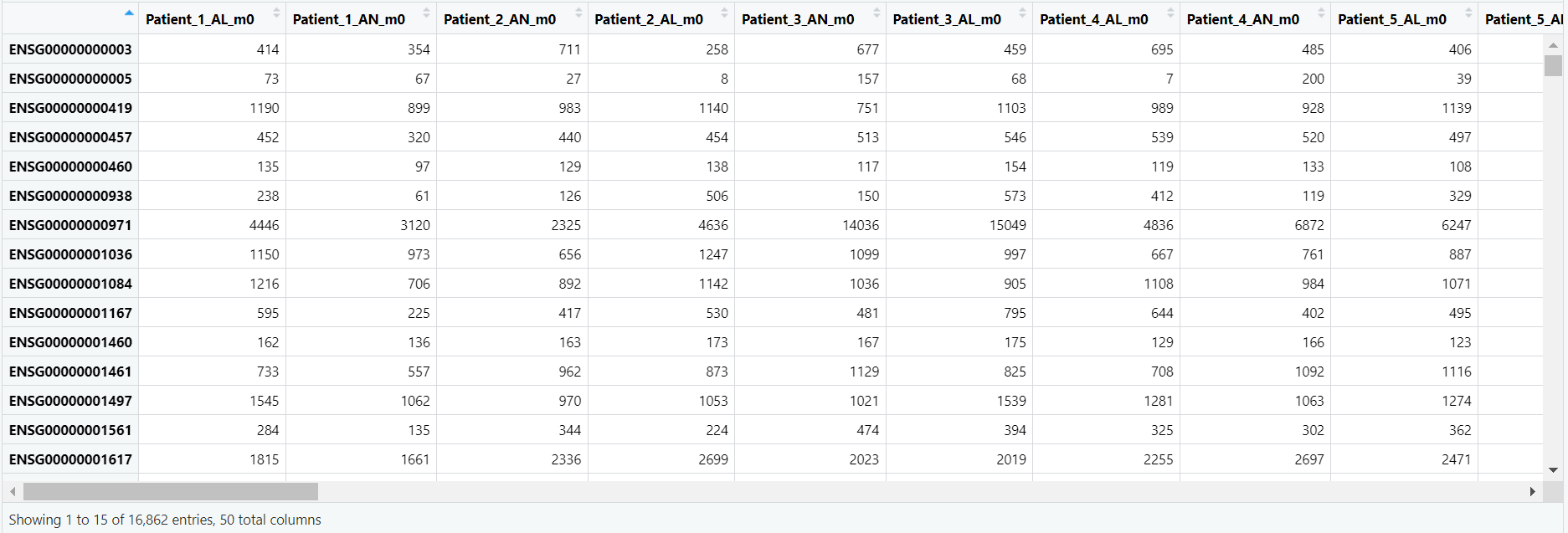

Code Block 3.2.1. Viewing the count matrix to inspect the gene expression data for each sample.

# View the first few rows of the count matrix

# This allows us to inspect the raw gene expression data for each sample.

View(counts_matrix)

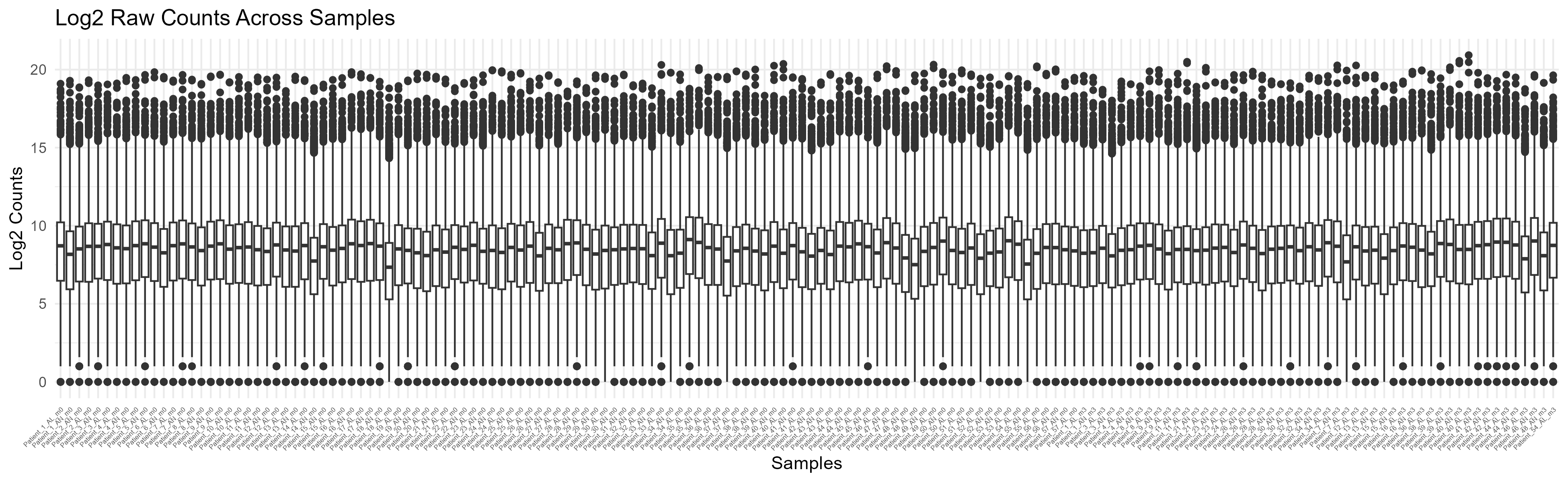

Code Block 3.2.2.1. Boxplot of Raw Counts (Uncolored)

# ============================

# Step 1: Load necessary library

# ============================

library(ggplot2)

# ============================

# Step 2: Prepare Data for Plotting

# ============================

# Convert counts_matrix to a data frame and log-transform it

df <- as.data.frame(log2(counts_matrix + 1)) # Log2 transform the counts matrix

# Add a sample column (rownames will become a new column for plotting)

df$Sample <- rownames(df)

# Reshape the data for ggplot2 (long format)

df_long <- reshape2::melt(df, id.vars = "Sample")

# ============================

# Step 3: Create Boxplot

# ============================

p <- ggplot(df_long, aes(x = variable, y = value)) +

geom_boxplot() + # Create boxplot

theme_minimal() + # Apply a minimal theme for better readability

labs(title = "Log2 Raw Counts Across Samples", x = "Samples", y = "Log2 Counts") +

theme(axis.text.x = element_text(angle = 45, hjust = 1, size = 4),

plot.background = element_rect(fill = "white", colour = NA), # Set white background

panel.background = element_rect(fill = "white", colour = NA)) # Set white background for the panel

# ============================

# Step 4: Save the Plot 3.2.2.1.Uncolored_Boxplot_Raw_Counts

# ============================

ggsave("Figures/3.2.2.Boxplot_Raw_Counts/3.2.2.1.Uncolored_Boxplot_Raw_Counts.png"",

plot = p, width = 13, height = 4)

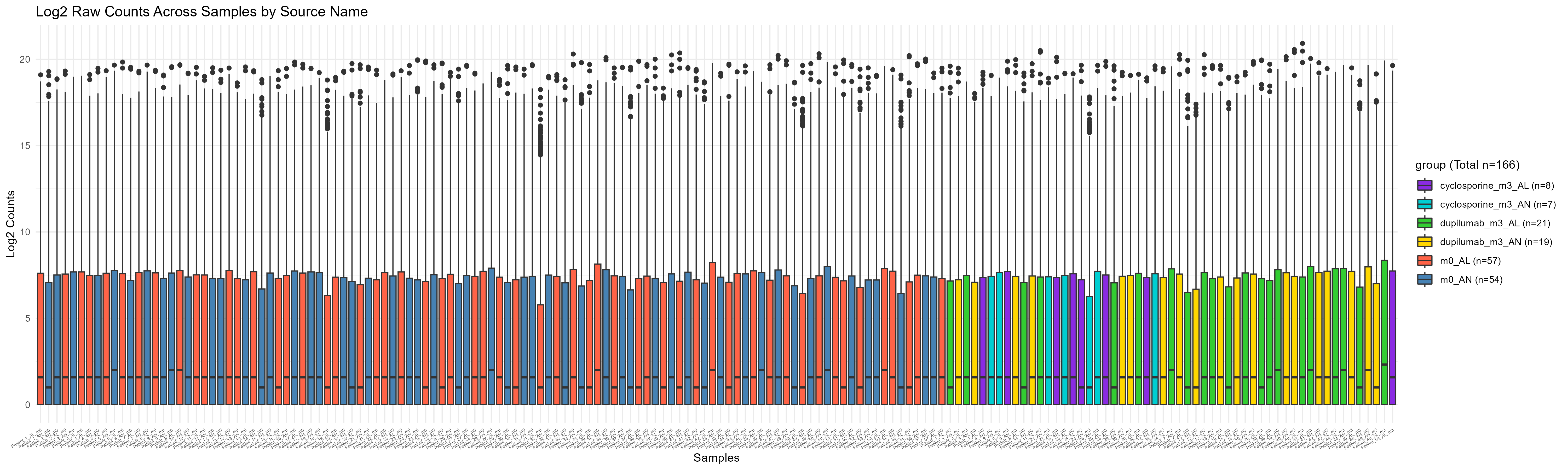

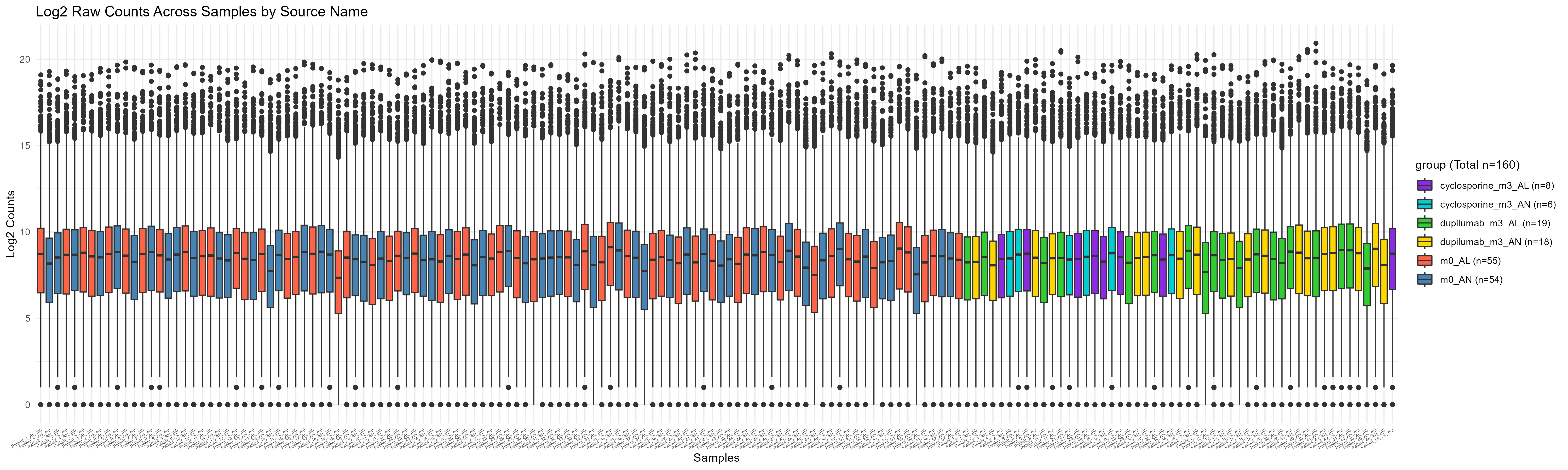

Code Block 3.2.2.2. Boxplot of Raw Counts Colored by Experimental Condition

# ============================

# Load necessary libraries

# ============================

library(ggplot2)

library(reshape2)

# ============================

# Step 1: Align Sample IDs and Metadata

# ============================

# Set the row names of metadata to the sample names (stored in metadata$title)

rownames(metadata) <- metadata$title

# Extract the sample IDs from counts_matrix (columns represent samples)

sample_ids <- colnames(counts_matrix)

# Filter metadata to contain only rows that match the sample IDs in the count matrix

metadata_filtered <- metadata[metadata$title %in% sample_ids, ]

# Reorder metadata to match the order of sample IDs in the count matrix

metadata_filtered <- metadata_filtered[match(sample_ids, rownames(metadata_filtered)), ]

# ============================

# Step 2: Prepare Data for Plotting

# ============================

# Transpose the counts matrix to make samples the rows

counts_matrix_t <- t(counts_matrix)

# Convert the transposed counts matrix to a data frame and log-transform the counts

# Add 1 to avoid log(0), which is undefined

df <- as.data.frame(log2(counts_matrix_t + 1))

# Add a sample column (rownames will become a new column for plotting)

df$Sample <- rownames(df)

# Add the group information from metadata (e.g., source_name_ch1)

df$group <- metadata_filtered$source_name_ch1[match(df$Sample, rownames(metadata_filtered))]

# Ensure `group` is a factor for proper grouping in the plot

df$group <- as.factor(df$group)

# ============================

# Step 3: Reshape the Data for ggplot2 (Long Format)

# ============================

# Melt the data frame to create a long format for ggplot2

df_long <- melt(df, id.vars = c("Sample", "group"))

# ============================

# Step 4: Define Colors for Each Unique Group and Add Sample Counts to the Labels

# ============================

# Calculate the number of samples for each group

group_counts <- table(df$group)

# Calculate the total number of samples

total_samples <- sum(group_counts)

# Create the color palette for the unique groups

unique_groups <- unique(df$group)

color_palette <- c("#FF6347", "#4682B4", "#32CD32", "#FFD700", "#8A2BE2", "#00CED1")

# Ensure the color palette has enough colors for all groups

group_colors <- setNames(color_palette[1:length(unique_groups)], unique_groups)

# ============================

# Step 5: Create the Plot with Updated Group Labels

# ============================

p <- ggplot(df_long, aes(x = factor(Sample, levels = sample_ids), y = value, fill = group)) +

geom_boxplot() +

theme_minimal() +

labs(title = "Log2 Raw Counts Across Samples by Source Name", x = "Samples", y = "Log2 Counts",

fill = paste0("group (Total n=", total_samples, ")")) + # Add total number to the legend title

theme(axis.text.x = element_text(angle = 30, hjust = 1, size = 4), # Rotate and adjust x-axis labels

plot.background = element_rect(fill = "white", colour = NA), # Set white background

panel.background = element_rect(fill = "white", colour = NA)) + # Set white background for the panel

scale_fill_manual(values = group_colors, labels = paste0(levels(df$group), " (n=", group_counts[levels(df$group)], ")")) # Use the custom color vector and add counts in the legend

# ============================

# Step 6: Save the Plot

# ============================

ggsave("Figures/3.2.2.Boxplot_Raw_Counts/3.2.2.2.Colored_Boxplot_Raw_Counts.png",

plot = p, width = 20, height = 6)

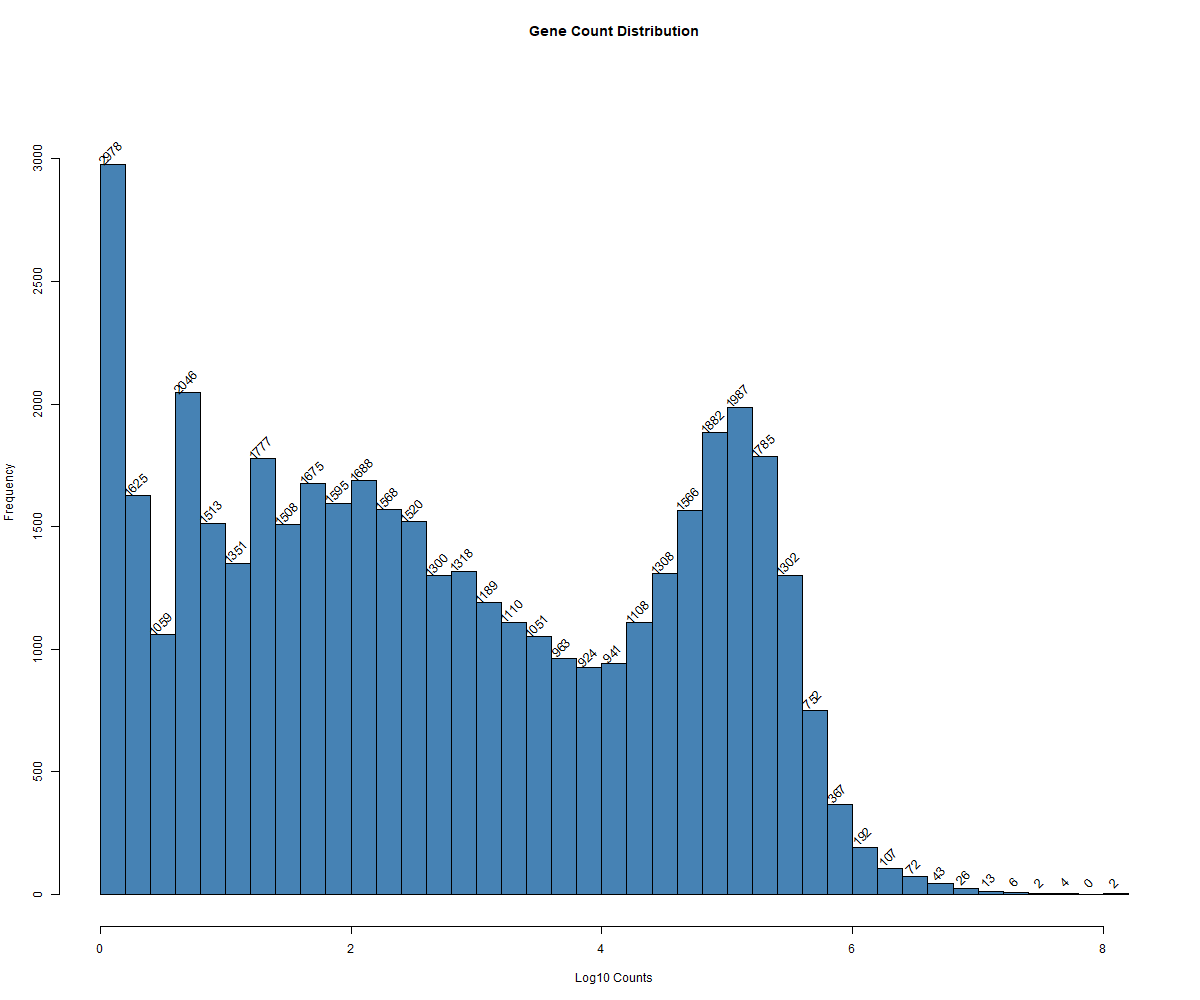

hist() function. (Step 4): To enhance the visualization, the gene count values are added as labels above each bar. Finally, (Step 5): The PNG device is closed, and the plot is saved.

Code Block 3.2.3. Histogram of Total Gene Counts Per Sample

# ============================

# Step 1: Define Output File Path

# ============================

# Define the file path where you want to save the image

output_file <- "C:/Users/Desktop/RNA_Seq_Task1/3/3_Count_Matrix_Exploration/3.2.3.a.histogram_Of_total_GeneCounts_Per_Sample_Before_Removing_LowCountGenes_AND_Outlier_Samples.png"

# ============================

# Step 2: Open PNG Device

# ============================

# Open the PNG device to save the plot

png(filename = output_file, width = 1200, height = 1000)

# ============================

# Step 3: Create Histogram of Log-Transformed Gene Counts

# ============================

# Calculate log10 of total counts per gene

log_gene_counts <- log10(rowSums(counts_matrix))

# Create the histogram and get the counts for each bin

hist_data <- hist(log_gene_counts,

breaks = 50, # Specify number of bins in the histogram

main = "Gene Count Distribution", # Title of the plot

xlab = "Log10 Counts", # Label for the x-axis

col = "steelblue") # Add color to the bars

# Update the y-axis limit to make room for labels

ylim <- c(0, max(hist_data$counts) * 1.1)

plot(hist_data, col = "steelblue", main = "Gene Count Distribution", xlab = "Log10 Counts", ylim = ylim) # cex: to change the font size of the labels on the bars

# ============================

# Step 4: Add Numbers Above Bars

# ============================

# Add the text labels above the histogram bars

text(hist_data$mids, hist_data$counts, labels = hist_data$counts, pos = 3, cex = 1, srt = 45)

# ============================

# Step 5: Close the PNG Device

# ============================

# Close the PNG device

dev.off()

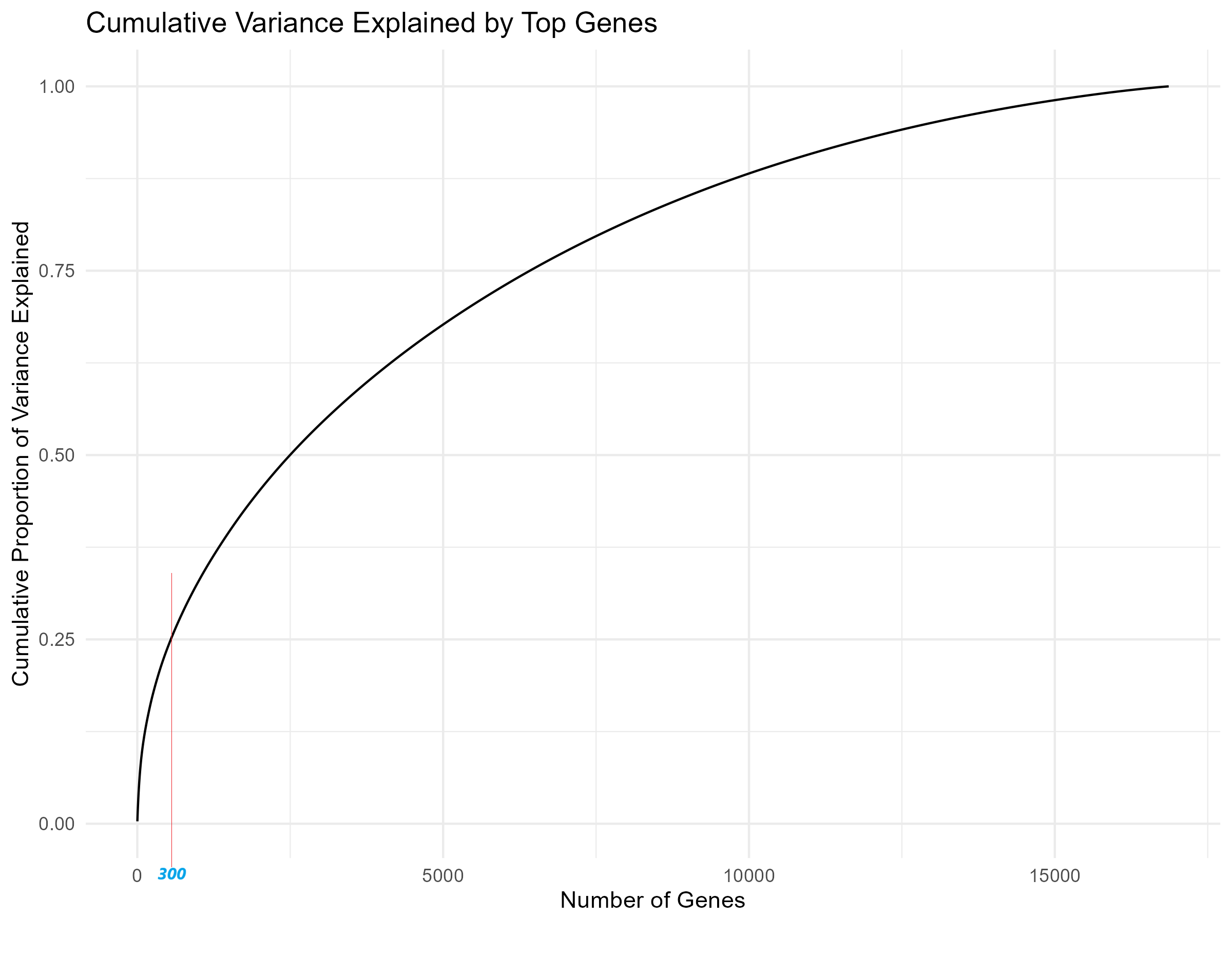

matrixStats and ggplot2 are loaded for variance calculations and plotting. (Step 2): The gene expression data in counts_matrix is log2-transformed to reduce its dynamic range, making it easier to interpret. (Step 3): Using the rowVars() function, the variance of each gene across all samples is calculated, identifying highly variable genes for further analysis. (Step 4): The cumulative variance is computed by sorting these variances and calculating their cumulative sum, which helps assess how much variability is captured by the top genes. (Step 5): Finally, a line plot is generated (Figure 3.2.4) using ggplot2 to visualize the cumulative variance explained by an increasing number of genes, aiding in the selection of the optimal number of genes for further analysis.

Code Block 3.2.4. Cumulative Variance Plot to Determine Optimal Gene Count for Heatmap

# =========== Step 1: Load Required Libraries =============

library(matrixStats) # Or DelayedMatrixStats for variance calculations

library(ggplot2) # For enhanced plotting capabilities

# =========== Step 2: Log2 Transformation =============

# Convert counts_matrix to a matrix and apply log2 transformation

# Log transformation reduces the dynamic range of the data, making it easier to interpret

counts_matrix_log <- log2(as.matrix(counts_matrix) + 1)

# =========== Step 3: Calculate Gene Variances =============

# Use the rowVars() function to calculate the variance for each gene across samples

# Gene variance is used to identify the most variable genes for further analysis

gene_variances <- rowVars(counts_matrix_log)

# =========== Step 4: Calculate Cumulative Variance =============

# Sort the variances in descending order and calculate the cumulative sum

cum_variance <- cumsum(sort(gene_variances, decreasing = TRUE)) / sum(gene_variances)

# =========== Step 5: Plot Cumulative Variance using ggplot2 for enhanced plotting =============

# Plot the cumulative proportion of variance explained as the number of genes increases

# This plot will help visualize how many top genes are needed to explain a high proportion of variance

ggplot(data.frame(Genes = 1:length(cum_variance), CumulativeVariance = cum_variance), aes(x = Genes, y = CumulativeVariance)) +

geom_line() +

labs(title = "Cumulative Variance Explained by Top Genes",

x = "Number of Genes",

y = "Cumulative Proportion of Variance Explained") +

theme_minimal()

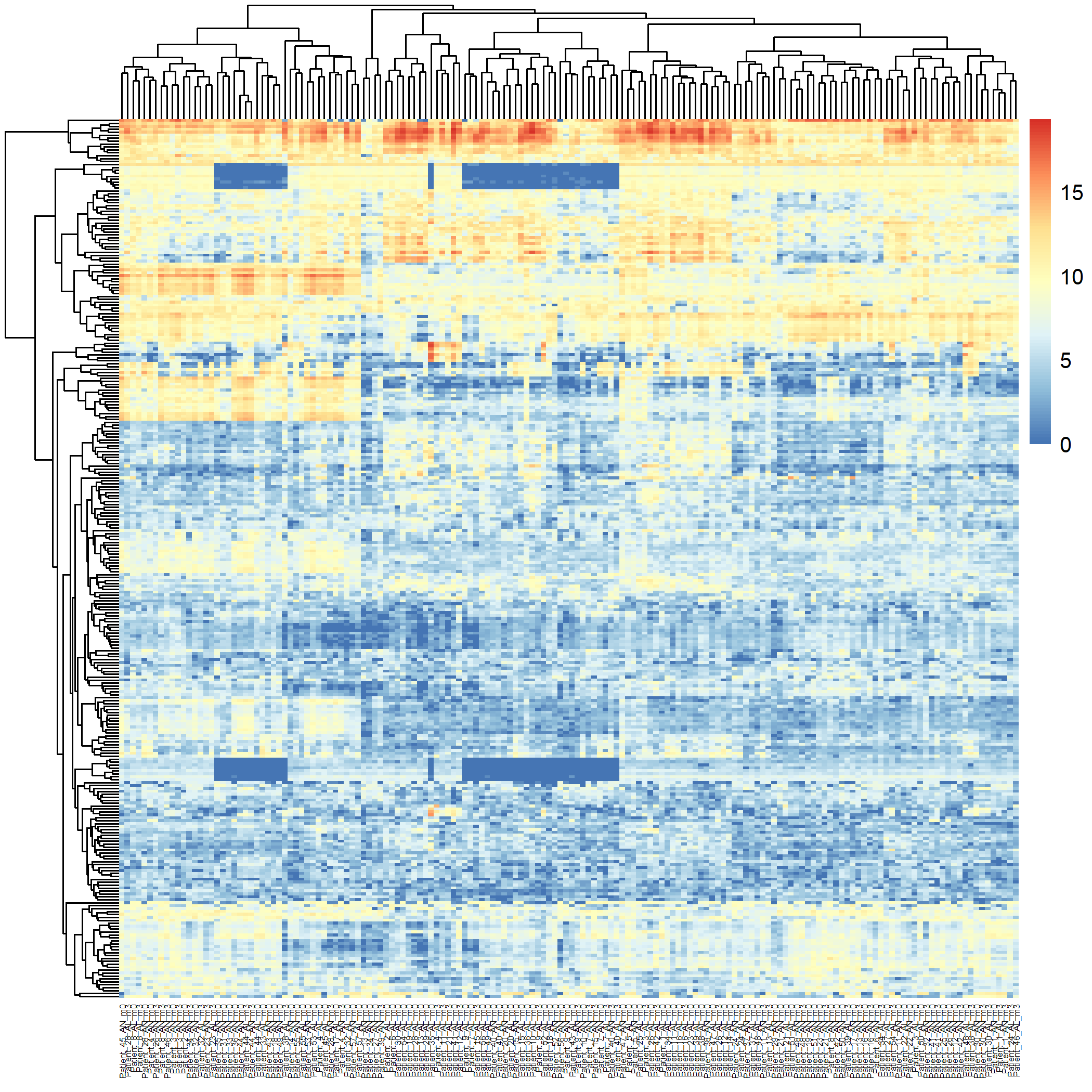

Code Block 3.2.5.1. Unsupervised Heatmap for All Samples (160 samples)

# ============ Load Required Libraries ============

# Load the required libraries for matrix operations and heatmap generation

library(matrixStats) # Ensure matrixStats is loaded for row variance calculation

library(pheatmap) # For generating heatmaps

# ============ Step 1: Convert counts_matrix to Matrix ============

# Convert counts_matrix to a matrix if it is currently a data frame

counts_matrix <- as.matrix(counts_matrix) # Ensures compatibility with downstream functions

# ============ Step 2: Log2 Transformation of Counts ============

# Apply a log2 transformation to reduce the dynamic range of the count data

counts_matrix_log <- log2(counts_matrix + 1) # Adding 1 to avoid log(0) issues

# ============ Step 3: Identify Top Variable Genes ============

# Recalculate the most variable genes based on the log-transformed counts

# We select the top 300 most variable genes using row variance

top_var_genes_log <- head(order(rowVars(counts_matrix_log), decreasing = TRUE), 300)

# ============ Step 4: Generate Heatmap and Save ============

# Create the heatmap for all 166 samples based on the top 300 most variable genes

# Adjust clustering and visual parameters such as label angles and font sizes

pheatmap(counts_matrix_log[top_var_genes_log, ],

cluster_rows = TRUE, cluster_cols = TRUE,

show_rownames = FALSE, show_colnames = TRUE,

angle_col = 90, # Rotate x-axis labels for better readability

fontsize_col = 4, # Reduce font size for the column labels

filename = "Figures/3.2.5.Exploratory_Heatmaps/3.2.5.1.Unsupervised_Heatmap_160_Samples.png") # Save the heatmap as an image

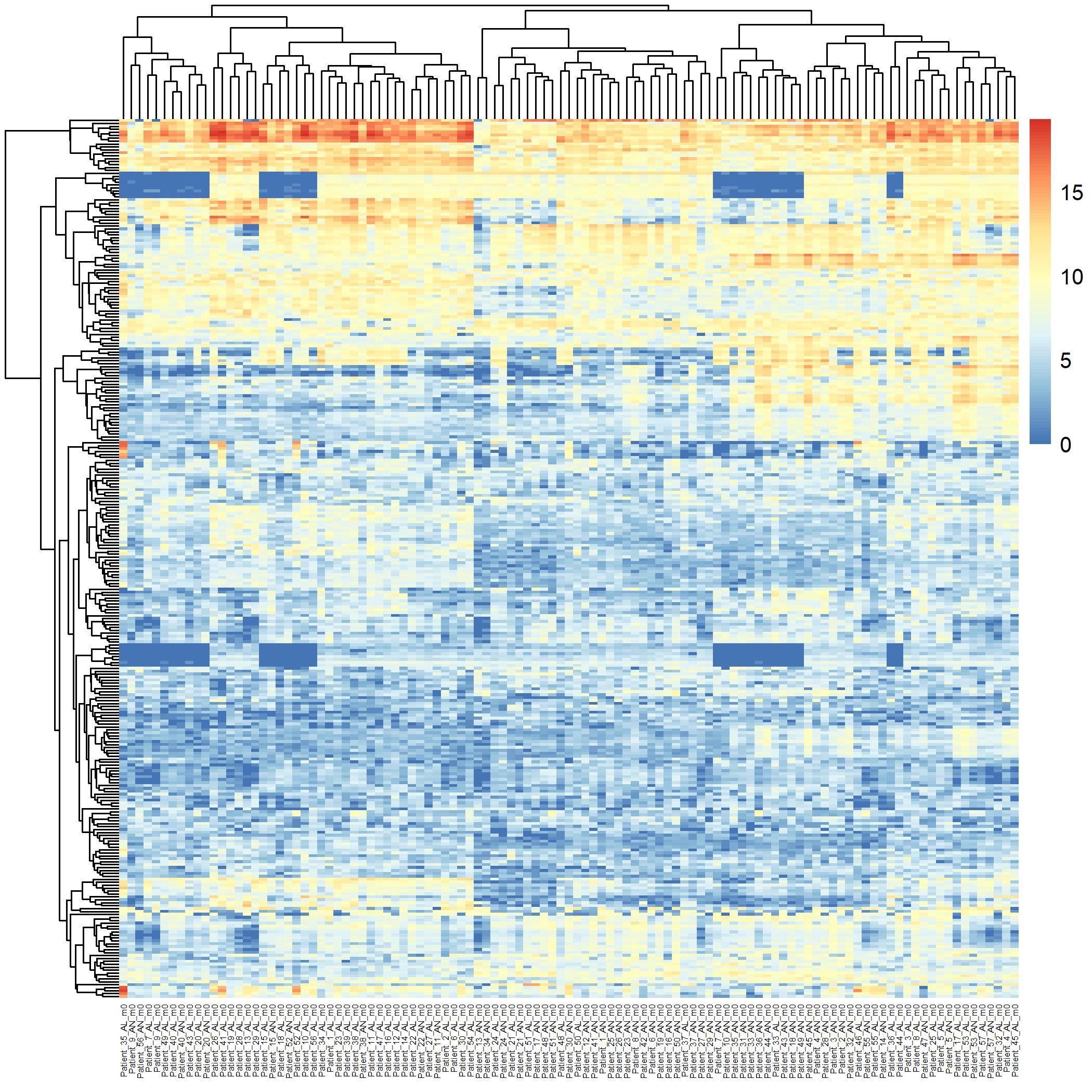

matrixStats and pheatmap libraries are loaded for matrix operations and heatmap generation. (Step 1): The metadata is subset to include only the baseline lesional (AL_m0) and non-lesional (AN_m0) samples by filtering based on metadata$title. These subsets are combined into one dataset for comparison. (Step 2): The counts_matrix is filtered to include only the lesional and non-lesional samples based on the sample names in metadata$title. (Step 3): A log2 transformation is applied to the filtered count matrix using log2() to reduce the dynamic range. (Step 4): The top 300 most variable genes are identified based on variance using rowVars(). (Step 5): A heatmap is generated (Figure 3.2.5.2) using pheatmap, with rows and columns clustered to visualize the relationship between samples and gene expression. Adjustments are made for label angles and font size, and the heatmap is saved as an image file.

Code Block 3.2.5.2. Unsupervised Heatmap for Baseline Lesional and Non-lesional Samples (109 samples)

# ============ Load Required Libraries ============

# Load the required libraries for matrix operations and heatmap generation

library(matrixStats) # Ensure matrixStats is loaded for row variance calculation

library(pheatmap) # For generating heatmaps

# ============ Step 1: Subset Metadata for Lesional and Non-lesional Samples ============

# Subset the metadata to include only the baseline lesional (AL_m0) and non-lesional (AN_m0) samples

lesional_samples <- metadata[grepl("AL_m0", metadata$title), ] # Filter metadata for lesional samples

non_lesional_samples <- metadata[grepl("AN_m0", metadata$title), ] # Filter metadata for non-lesional samples

# Combine lesional and non-lesional samples into one dataset for comparison

selected_samples <- rbind(lesional_samples, non_lesional_samples)

# ============ Step 2: Subset the Count Matrix ============

# Subset the count matrix to include only the lesional and non-lesional samples

# Assuming the sample names (columns) in counts_matrix match those in metadata$title

selected_sample_names <- selected_samples$title

counts_matrix_filtered <- counts_matrix[, selected_sample_names] # Filter the count matrix for the selected samples

# ============ Step 3: Log2 Transformation of Filtered Counts ============

# Apply a log2 transformation to the filtered count matrix to reduce the dynamic range

counts_matrix_log <- log2(as.matrix(counts_matrix_filtered) + 1) # Adding 1 to avoid log(0) issues

# ============ Step 4: Identify Top Variable Genes ============

# Recalculate the most variable genes based on the log-transformed counts

# Select the top 300 most variable genes

top_var_genes_log <- head(order(rowVars(counts_matrix_log), decreasing = TRUE), 300)

# ============ Step 5: Generate Heatmap and Save ============

# Create a heatmap for the lesional and non-lesional samples based on the top 300 most variable genes

# Adjust clustering and visual parameters such as label angles and font sizes

pheatmap(counts_matrix_log[top_var_genes_log, ],

cluster_rows = TRUE, cluster_cols = TRUE,

show_rownames = FALSE, show_colnames = TRUE,

angle_col = 90, # Rotate x-axis labels for better readability

fontsize_col = 4, # Reduce font size for the column labels

filename = "Figures/3.2.5.Exploratory_Heatmaps/3.2.5.2.Unsupervised_Heatmap_Baseline_Samples.png") # Save the heatmap as an image

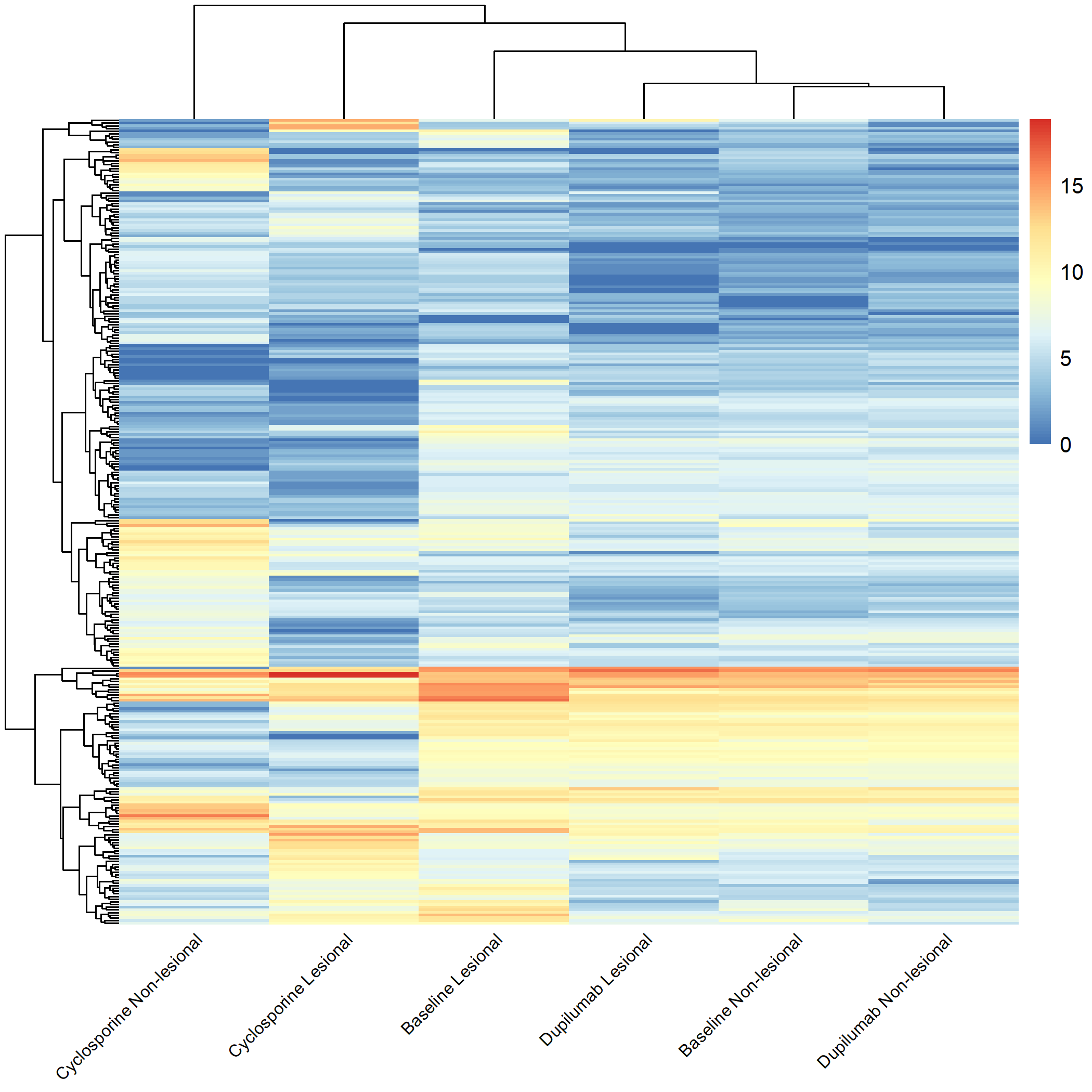

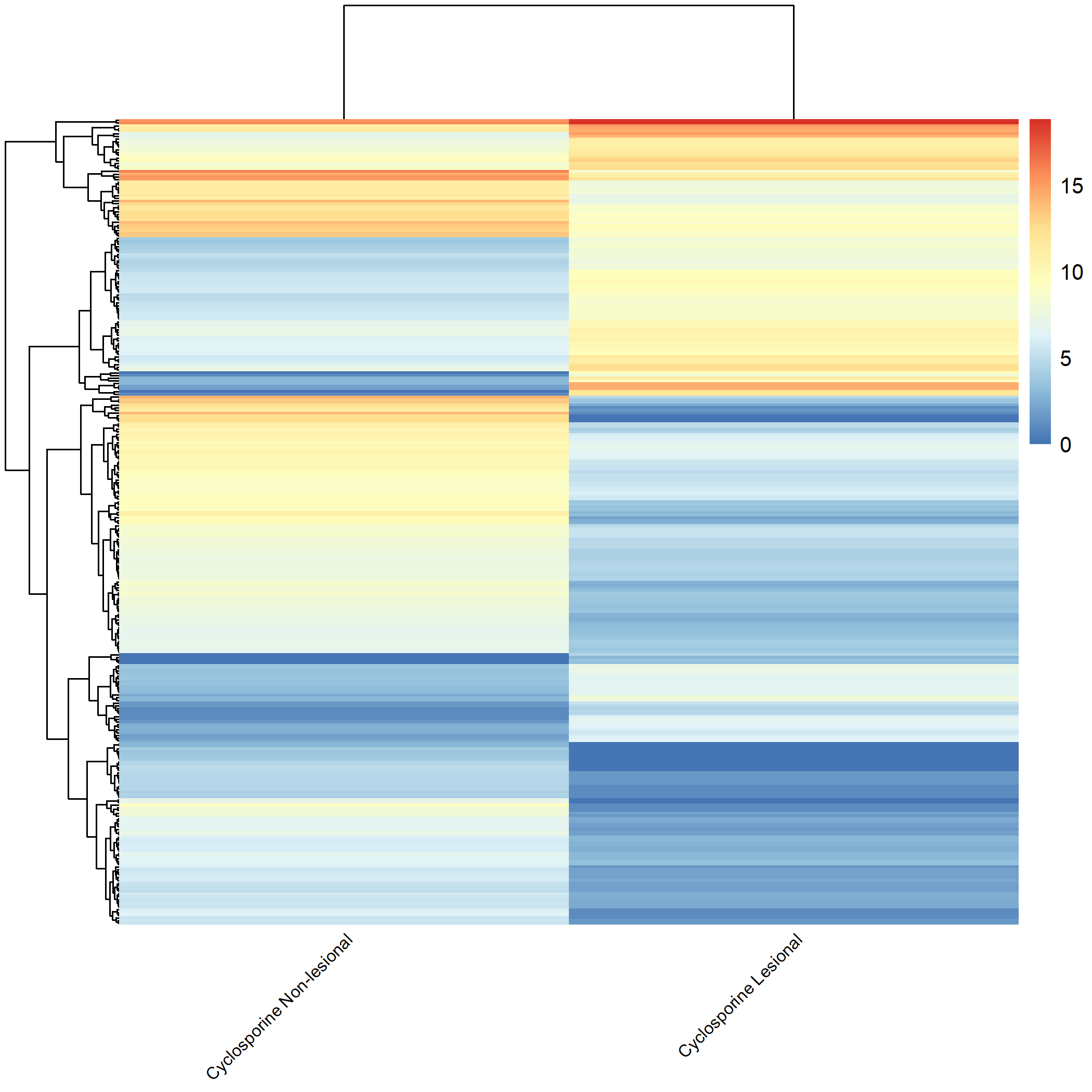

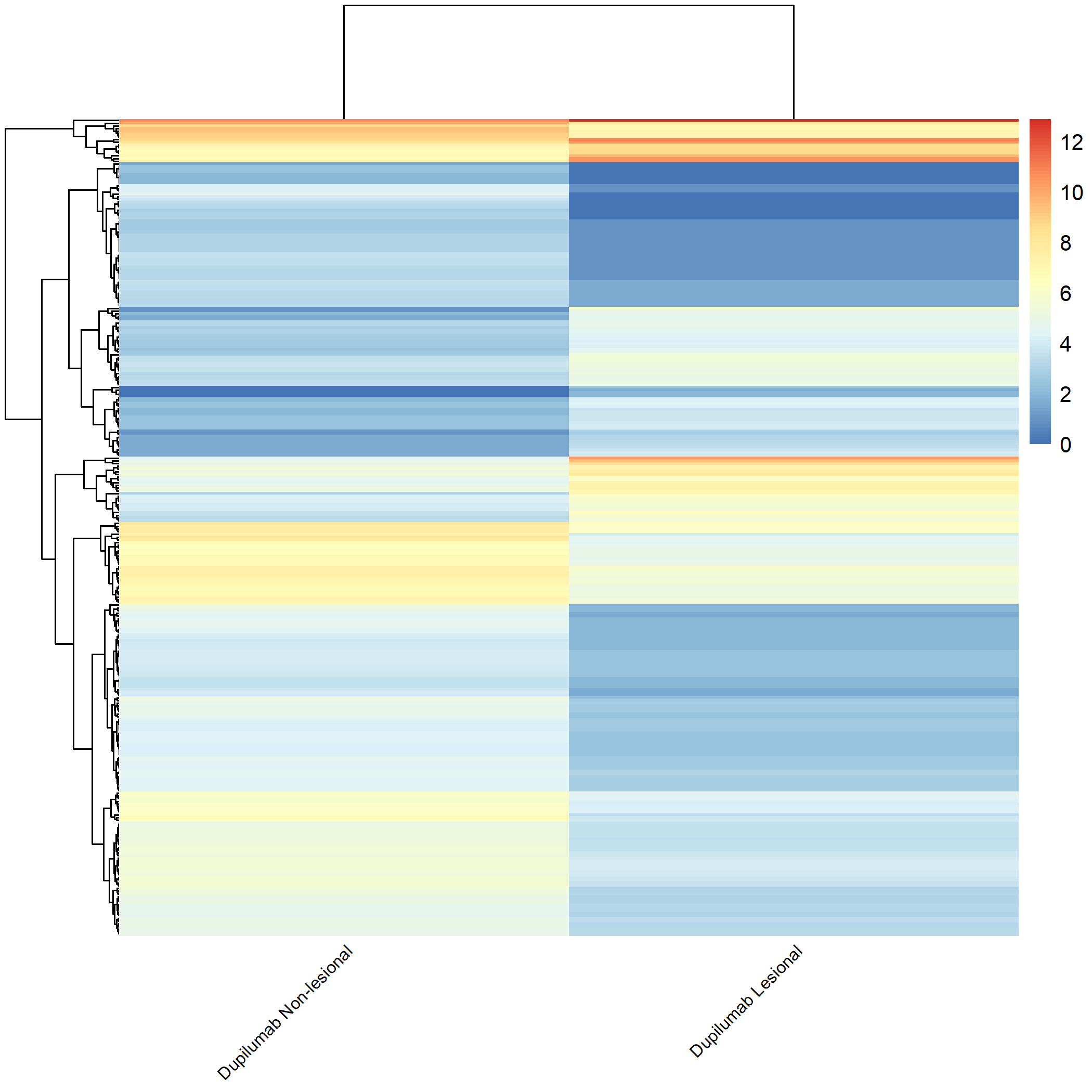

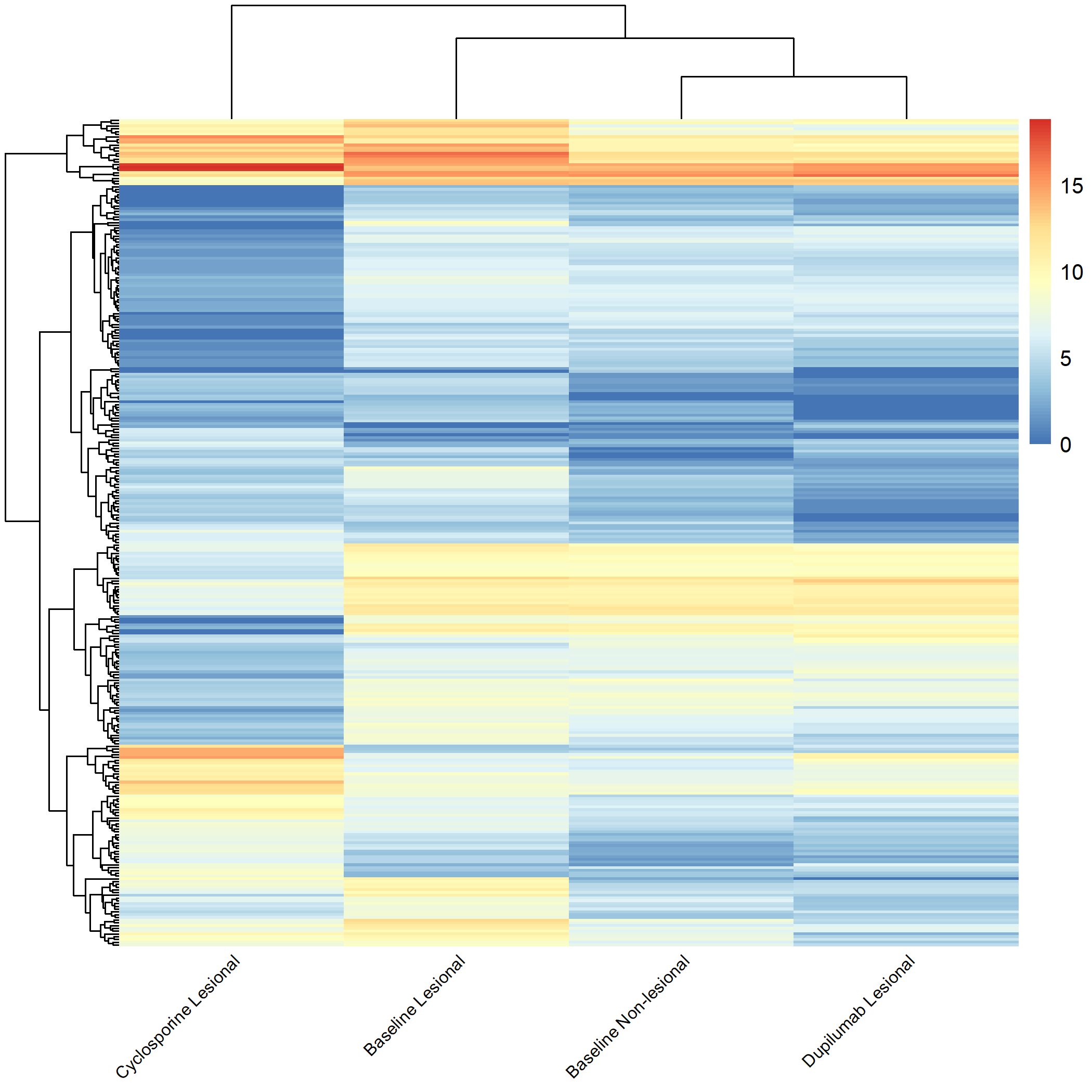

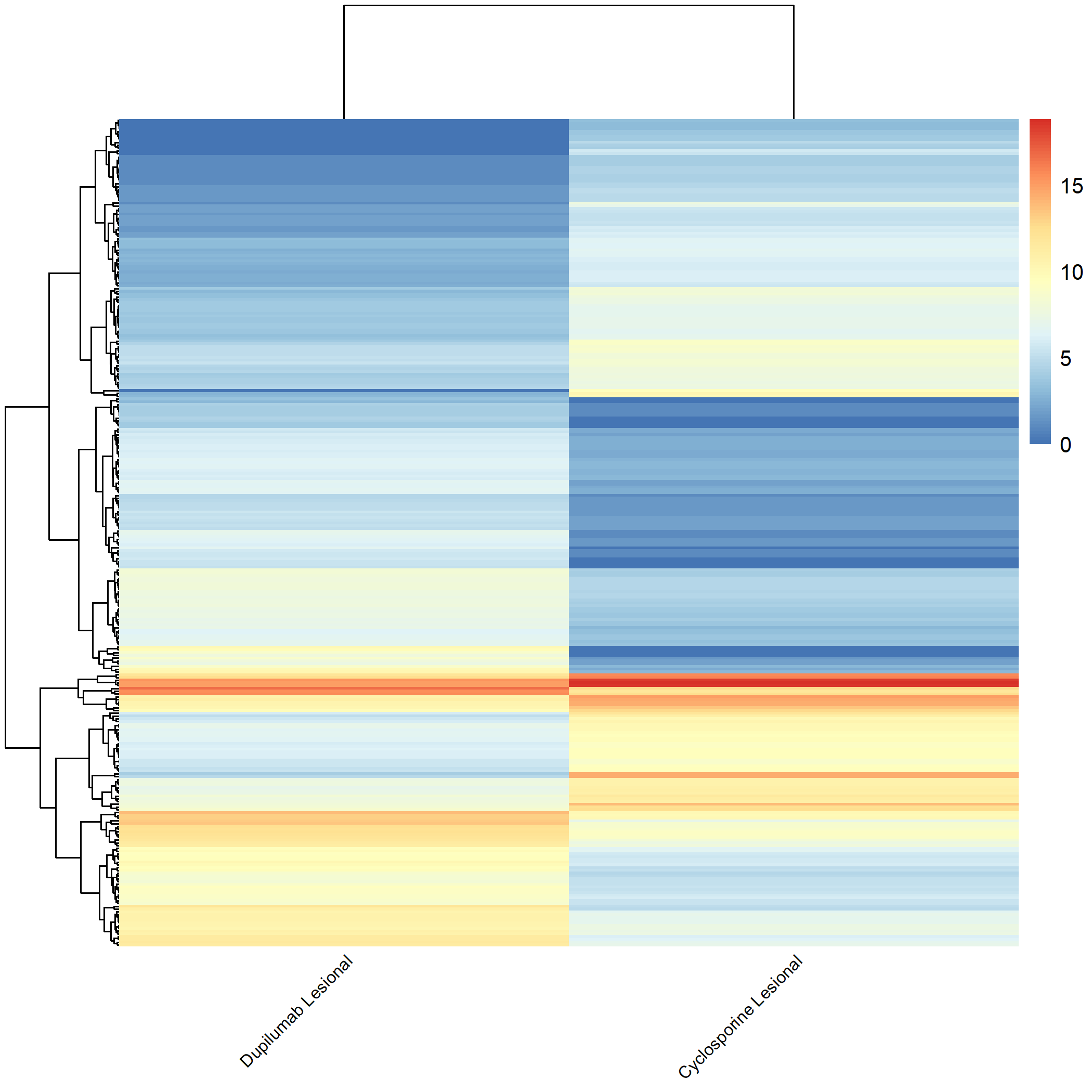

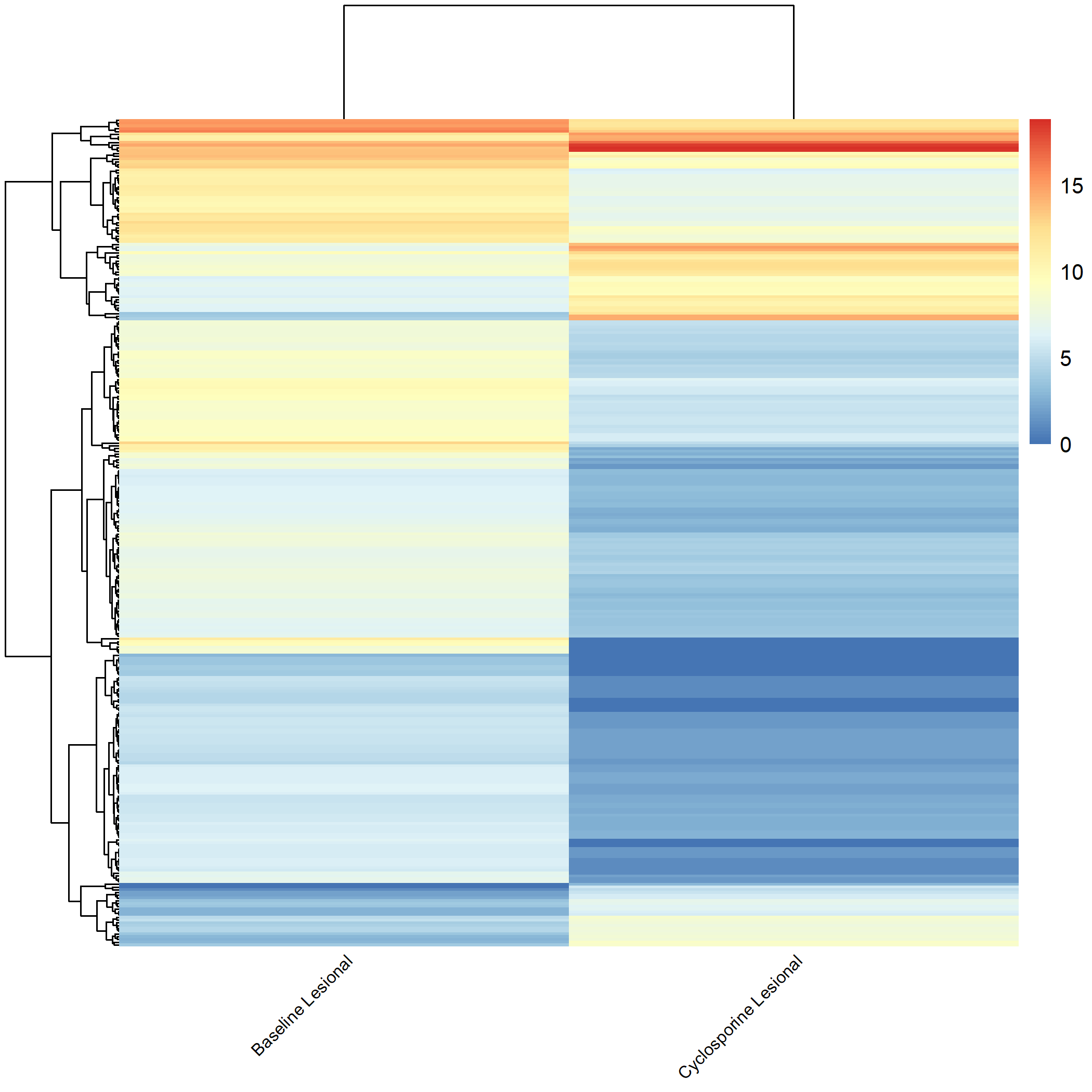

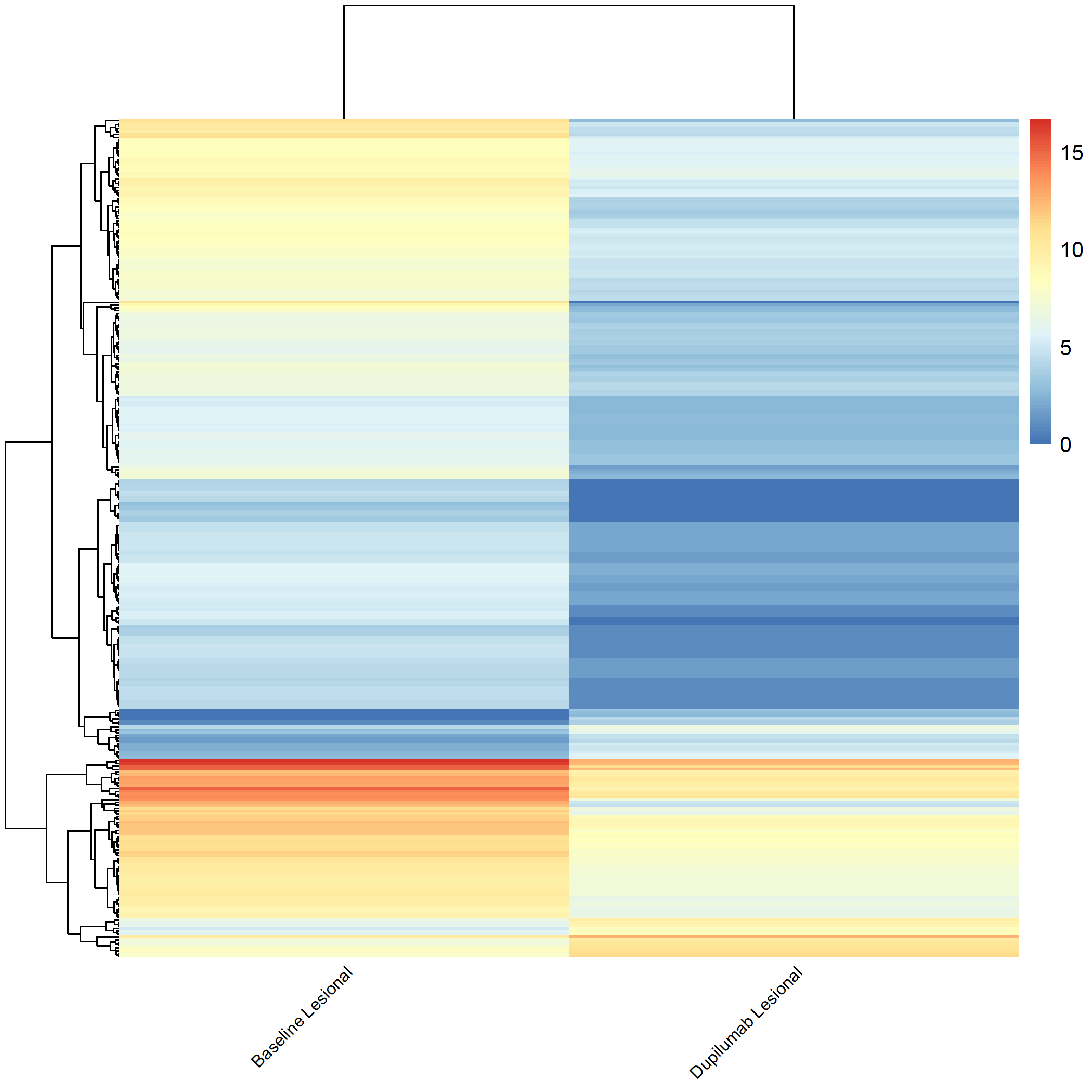

Code Block 3.2.5.3. Grouped Heatmap for All Groups

# =========== Load Required Libraries ===========

library(matrixStats)

library(pheatmap)

# =========== Step 1: Apply Log2 Transformation ===========

counts_matrix_log <- log2(as.matrix(counts_matrix) + 1)

# =========== Step 2: Extract Sample Grouping Information ===========

sample_groups <- metadata$source_name_ch1

# =========== Step 3: Define Descriptive Labels ===========

new_labels <- c(

"m0_AN" = "Baseline Non-lesional",

"cyclosporine_m3_AN" = "Cyclosporine Non-lesional",

"dupilumab_m3_AN" = "Dupilumab Non-lesional",

"m0_AL" = "Baseline Lesional",

"cyclosporine_m3_AL" = "Cyclosporine Lesional",

"dupilumab_m3_AL" = "Dupilumab Lesional"

)

# =================================================================================

# =========== Step 3.1: Select Groups for Analysis ===========

selected_groups <- c("m0_AN", "cyclosporine_m3_AN", "dupilumab_m3_AN", "m0_AL", "cyclosporine_m3_AL", "dupilumab_m3_AL")

# ===== we can replace the above selected_groups with one of the bellow =====

# 1. Heatmap for all groups:

# c("m0_AN", "cyclosporine_m3_AN", "dupilumab_m3_AN", "m0_AL", "cyclosporine_m3_AL", "dupilumab_m3_AL")

# 2. (Lesional vs. Non-lesional) Compare "Baseline Lesional" with "Baseline Non-lesional":

# c("m0_AN", "m0_AL")

# 3. (Lesional vs. Non-lesional) Compare Cyclosporine Lesional with Cyclosporine Non-lesional:

# c("cyclosporine_m3_AN", "cyclosporine_m3_AL")

# 4. (Lesional vs. Non-lesional) Compare Dupilumab Lesional with Dupilumab Non-lesional:

# c("dupilumab_m3_AN", "dupilumab_m3_AL")

# 5. (Therapy Comparisons) Compare Baseline Lesional, Cyclosporine Lesional, and Dupilumab Lesional (compared to Baseline Non-Lesional).

# c("m0_AN", "m0_AL", "cyclosporine_m3_AL", "dupilumab_m3_AL")

# 6. (Therapy Comparisons) Compare Dupilumab Lesional and Cyclosporine Lesional.

# c("dupilumab_m3_AL", "cyclosporine_m3_AL")

# 7. (Pre-treatment vs. Post-treatment) Compare Baseline Lesional & Cyclosporine Lesional.

# c("m0_AL", "cyclosporine_m3_AL")

# 8. (Pre-treatment vs. Post-treatment) Compare Baseline Lesional & Dupilumab Lesional.

# c("m0_AL", "dupilumab_m3_AL")

# =================================================================================

# =========== Step 4: Reorder Columns Based on Group Selection ===========

desired_order <- selected_groups

reordered_columns <- match(desired_order, sample_groups)

counts_matrix_log <- counts_matrix_log[, reordered_columns]

colnames(counts_matrix_log) <- new_labels[sample_groups[reordered_columns]]

# =========== Step 5: Calculate Top Variable Genes ===========

top_var_genes_log <- head(order(rowVars(counts_matrix_log), decreasing = TRUE), 300)

# =========== Step 6: Generate the Heatmap ===========

pheatmap(counts_matrix_log[top_var_genes_log, ],

cluster_rows = TRUE, cluster_cols = TRUE,

show_rownames = FALSE, show_colnames = TRUE,

angle = 45, vjust = 0.5, hjust = 0.6,

fontsize_col = 8,

filename = "Figures/3.2.5.Exploratory_Heatmaps/3.2.5.3.Grouped_Heatmap_All_Groups.png")

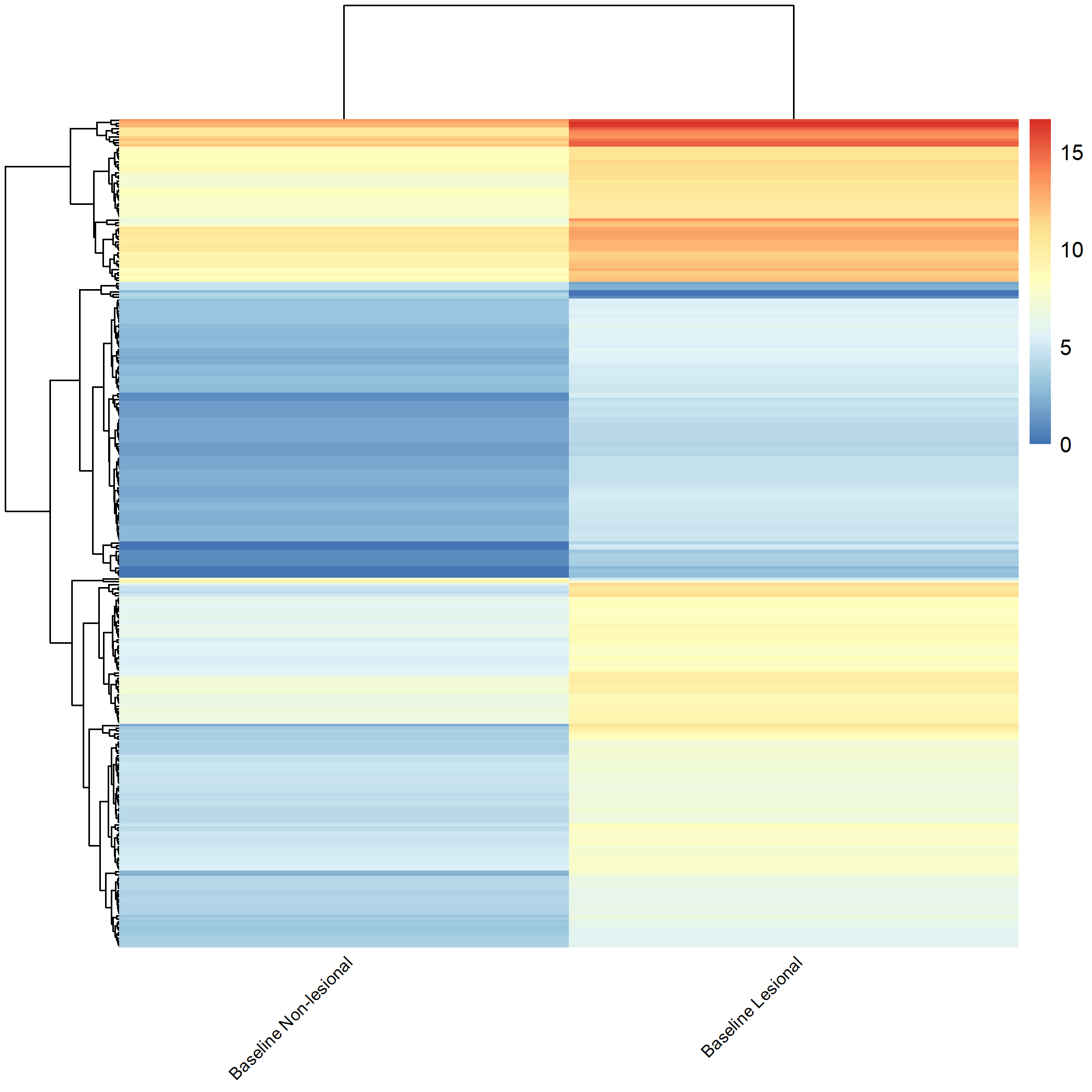

Code Block 3.2.5.4. Grouped Heatmap: Compare Baseline Lesional with Baseline Non-lesional

# ============================

selected_groups <- c("m0_AN", "m0_AL")

# ============================

Code Block 3.2.5.5. Grouped Heatmap: Compare Cyclosporine Lesional vs. Non-lesional

# ============================

selected_groups <- c("cyclosporine_m3_AN", "cyclosporine_m3_AL")

# ============================

Code Block 3.2.5.6. Grouped Heatmap: Compare Dupilumab Lesional vs. Dupilumab Non-lesional

# ============================

selected_groups <- c("dupilumab_m3_AN", "dupilumab_m3_AL")

# ============================

Code Block 3.2.5.7. Grouped Heatmap: Compare Baseline Non-Lesional vs. Baseline Lesional, Cyclosporine Lesional, and Dupilumab Lesional

# ============================

selected_groups <- c("m0_AN", "m0_AL", "cyclosporine_m3_AL", "dupilumab_m3_AL")

# ============================

Code Block 3.2.5.8. Grouped Heatmap: Compare Dupilumab Lesional vs. Cyclosporine Lesional

# ============================

selected_groups <- c("dupilumab_m3_AL", "cyclosporine_m3_AL")

# ============================

Code Block 3.2.5.9. Grouped Heatmap: Compare Pre-treatment vs. Post-treatment (Baseline Lesional & Cyclosporine Lesional)

# ============================

selected_groups <- c("m0_AL", "cyclosporine_m3_AL")

# ============================

Code Block 3.2.5.10. Grouped Heatmap: Compare Pre-treatment vs. Post-treatment (Baseline Lesional & Dupilumab Lesional)

# ============================

selected_groups <- c("m0_AL", "dupilumab_m3_AL")

# ============================

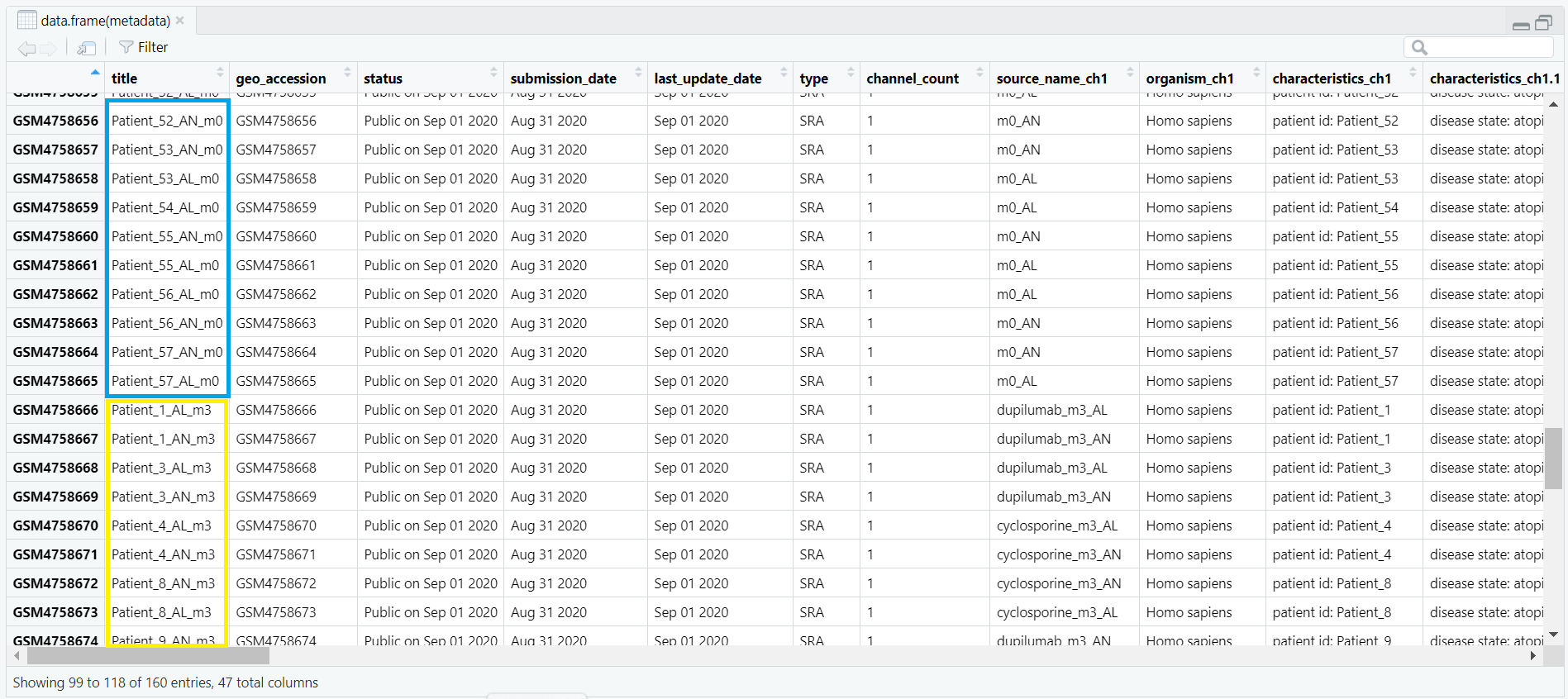

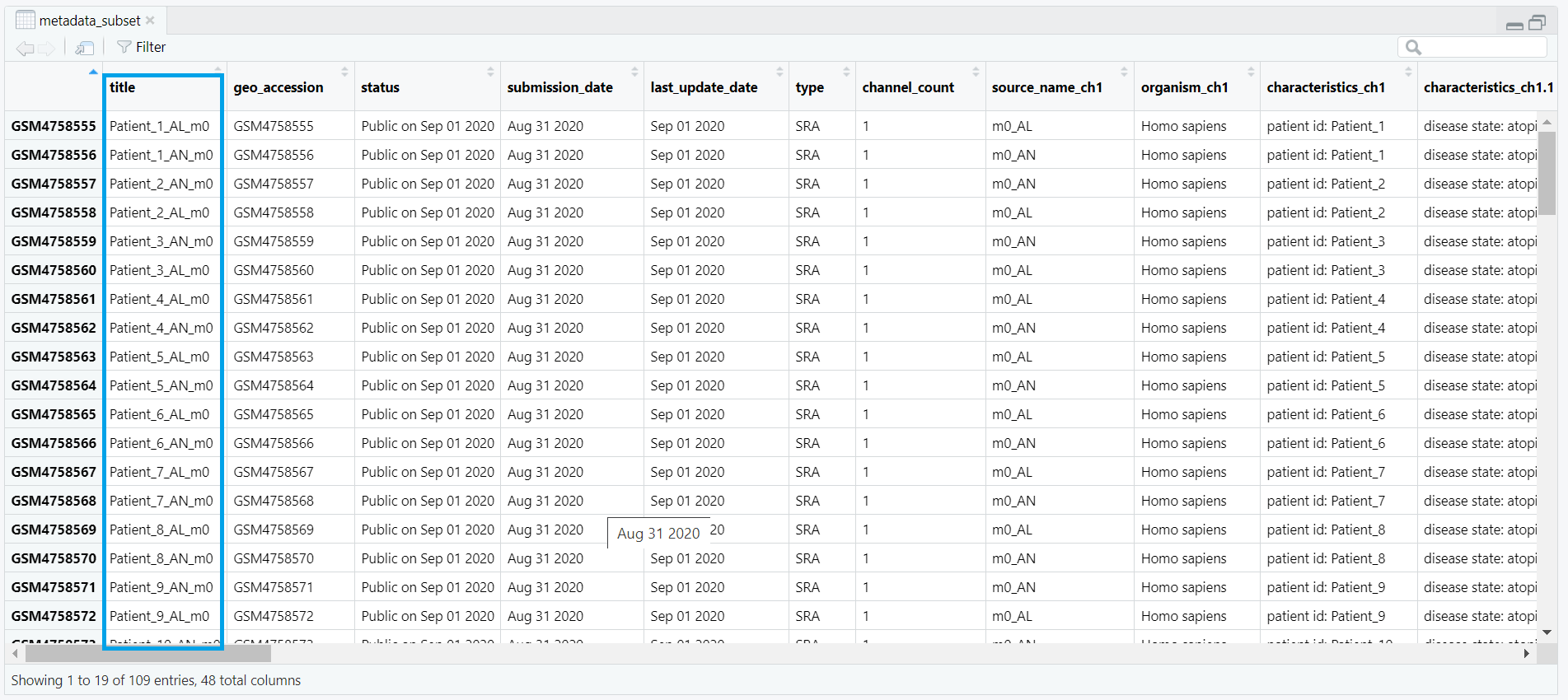

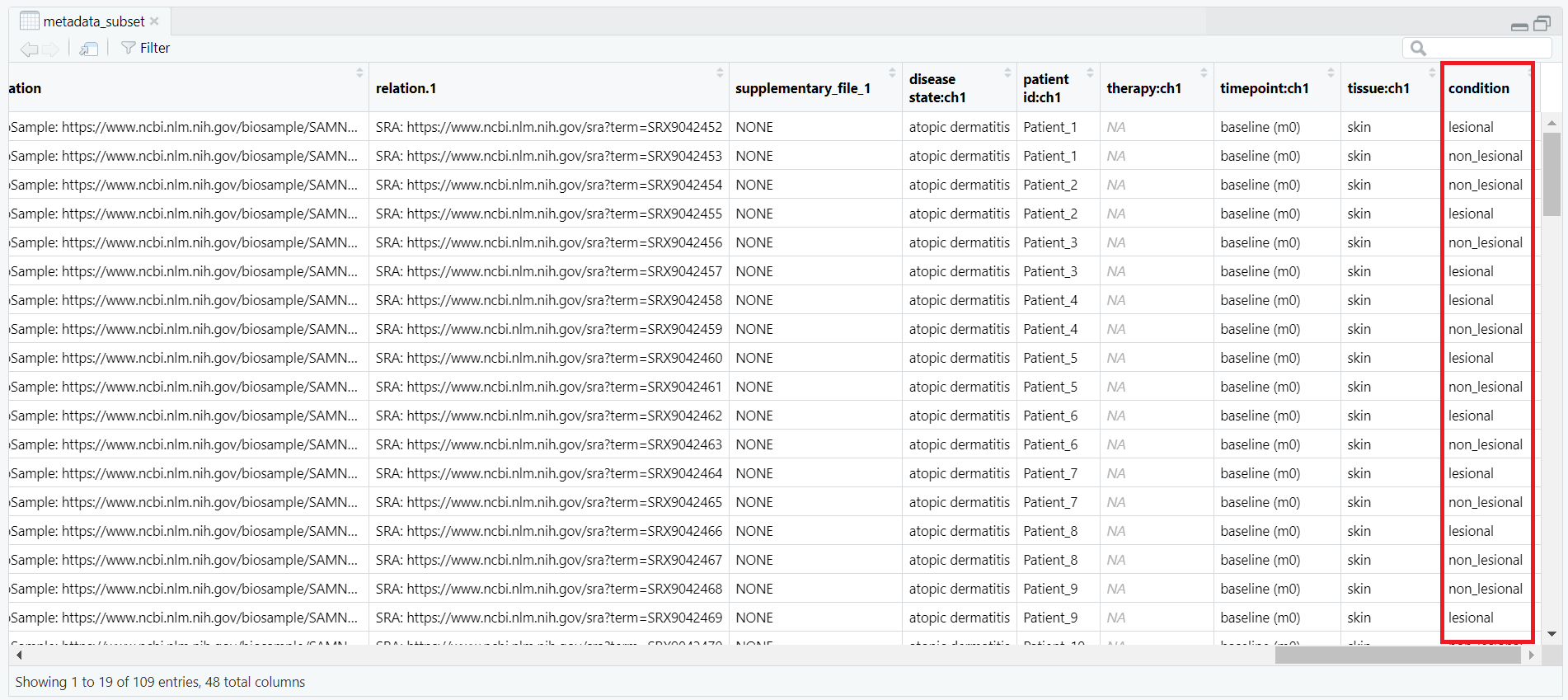

metadata data frame, keeping only rows where the value in the source_name_ch1 column is either "m0_AL" (lesional) or "m0_AN" (non-lesional). This ensures that only baseline samples are retained for analysis. metadata_subset <- metadata[metadata$source_name_ch1 %in% c("m0_AL", "m0_AN"), ]

counts_matrix) is filtered to keep only those columns whose names match the title values from metadata_subset. This ensures that the count data corresponds exactly to the subsetted metadata. keep <- intersect(colnames(counts_matrix), metadata_subset$title) counts_subset <- counts_matrix[, keep, drop = FALSE] metadata_subset so that its rows match the exact order of the columns in counts_subset. This step prevents potential mismatches during DESeq2 object creation. metadata_subset <- metadata_subset[match(colnames(counts_subset), metadata_subset$title), ] condition is created based on the source_name_ch1 values. Samples labeled m0_AL are assigned as "lesional", while m0_AN samples are labeled as "non_lesional". The condition is then converted into a factor with "non_lesional" set as the reference level for downstream DESeq2 differential expression analysis. metadata_subset$condition <- ifelse(metadata_subset$source_name_ch1 == "m0_AL", "lesional", "non_lesional") metadata_subset$condition <- factor(metadata_subset$condition, levels = c("non_lesional", "lesional")) condition, which classifies each sample as either lesional or non-lesional, as shown in Figure 4.3.

condition column, showing classification of each sample as lesional or non-lesional.

all(colnames(counts_subset) == metadata_subset$title) TRUE, the data are properly aligned and ready for DESeq2 object creation. Code Block 4. Filtering and Subsetting Samples for DESeq2 Analysis

# Step 4: Subset baseline lesional/non‑lesional (m0) and align with counts

# 4.1 keep only m0_AL / m0_AN

metadata_subset <- metadata[metadata$source_name_ch1 %in% c("m0_AL", "m0_AN"), ]

# 4.2 subset counts by titles (your counts colnames are titles)

keep <- intersect(colnames(counts_matrix), metadata_subset$title)

counts_subset <- counts_matrix[, keep, drop = FALSE]

# 4.3 reorder metadata to match counts columns

metadata_subset <- metadata_subset[match(colnames(counts_subset), metadata_subset$title), ]

# 4.4 condition factor

metadata_subset$condition <- ifelse(metadata_subset$source_name_ch1 == "m0_AL", "lesional", "non_lesional")

metadata_subset$condition <- factor(metadata_subset$condition, levels = c("non_lesional", "lesional"))

DESeqDataSet (dds). The following steps create this dataset object (dds) and verify that all required inputs are properly formatted.

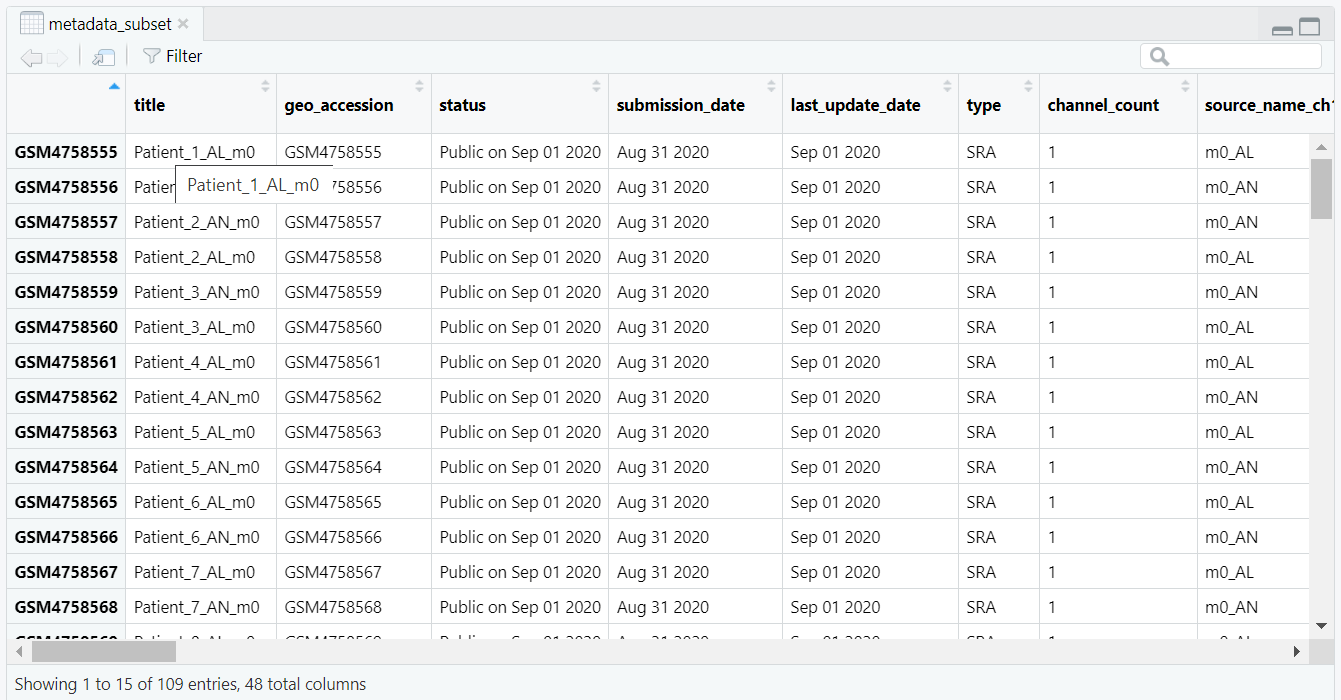

rownames(metadata_subset) <- metadata_subset$title then sets the row names of the metadata to the corresponding sample titles.

DESeq2 requires that each row of the metadata has a unique identifier matching the column names of the count matrix.

After this step, the metadata is correctly indexed by sample titles, as illustrated in Figure 5.2.

rownames(metadata_subset) <- metadata_subset$title

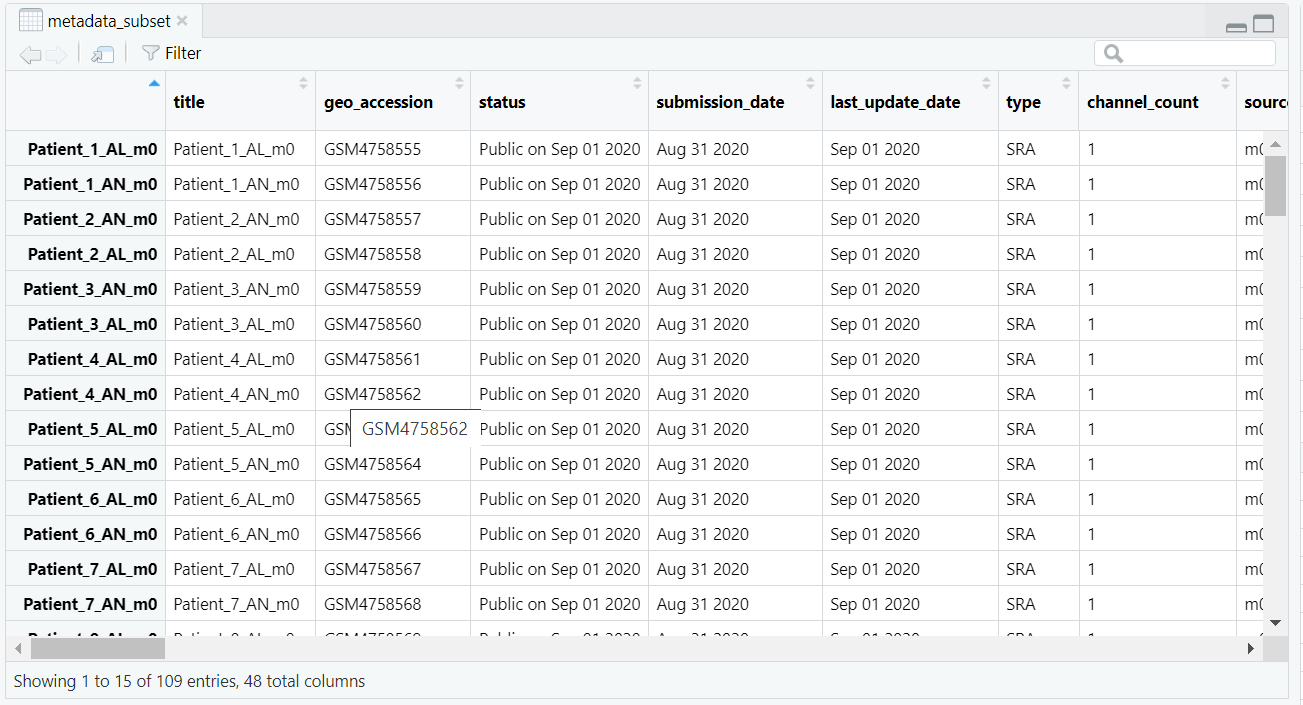

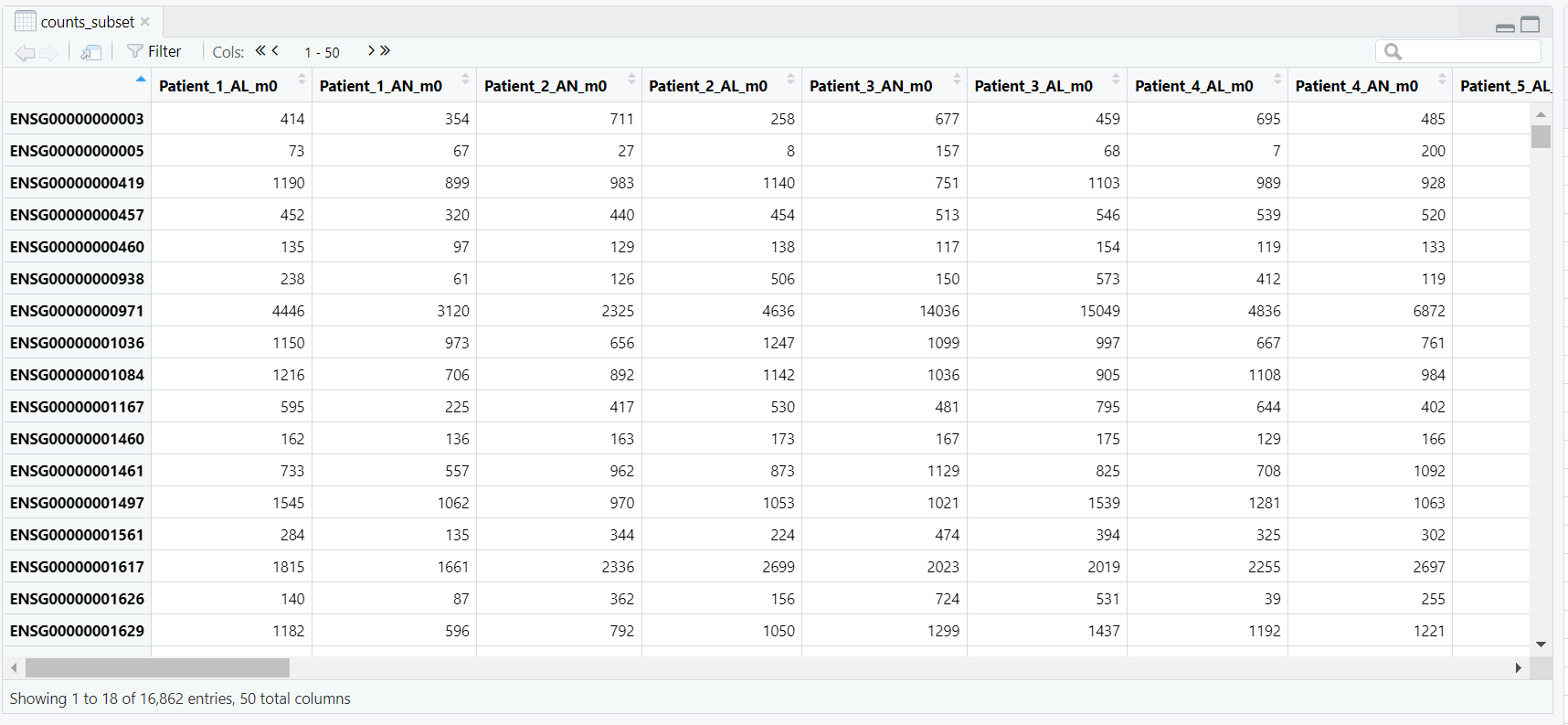

as.matrix() function converts the data frame to a base matrix, and storage.mode() explicitly sets the data type to integer. The resulting count matrix, now ready for DESeq2 input, is illustrated in Figure 5.2.

counts_subset <- as.matrix(counts_subset)

storage.mode(counts_subset) <- "integer"

DESeqDataSetFromMatrix() constructs the DESeq2 dataset. It takes three arguments:

countData — the integer matrix of gene counts (rows = genes, columns = samples) (Figure 5.3);colData — the metadata table containing sample information, including our condition variable; anddesign — a formula describing the experimental design, here ~ condition, which tells DESeq2 to model expression changes based on the lesional vs. non-lesional grouping.dds. The resulting DESeq2 dataset object contains multiple components such as the design formula, row ranges, assays, and sample metadata (colData), as illustrated in Figure 5.4.

dds <- DESeqDataSetFromMatrix(

countData = counts_subset,

colData = metadata_subset,

design = ~ condition

)

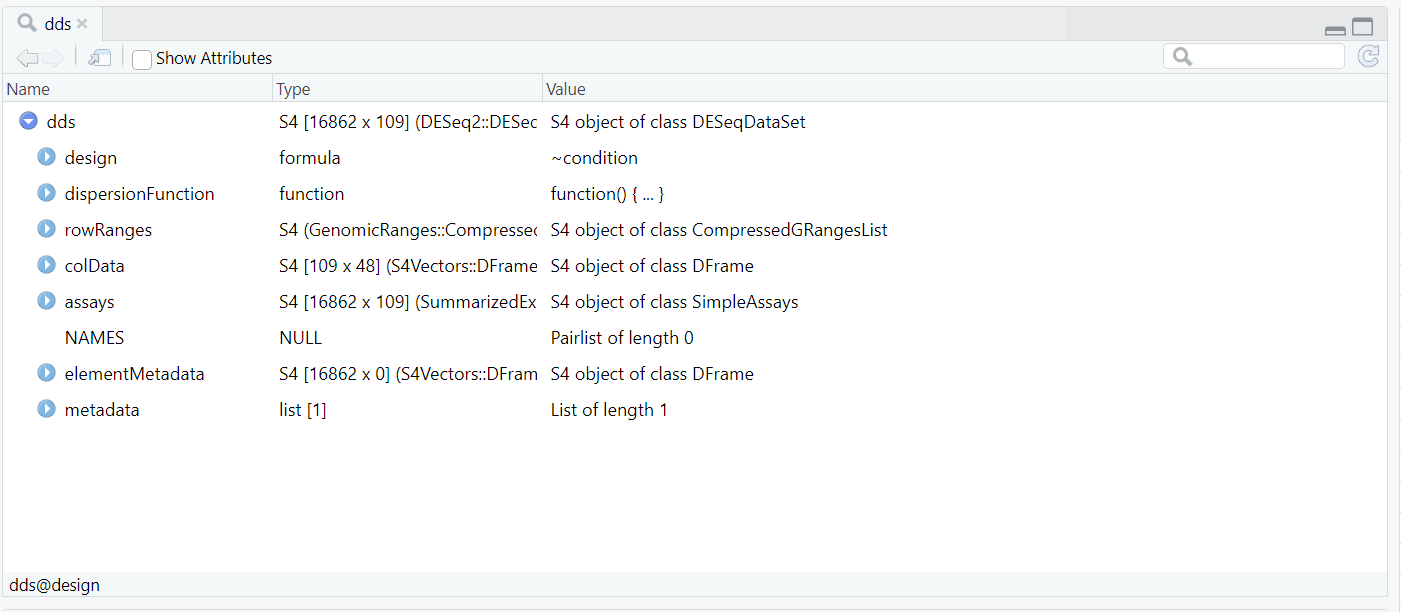

dds object created using DESeqDataSetFromMatrix(), showing key components such as the design formula, dispersion function, row ranges, assay data, and sample metadata (colData).

dds is an S4 object of class DESeqDataSet. It integrates gene-level counts and sample-level metadata into a single container. The main internal components include:

assays(dds) — the raw (and later normalized) count matrices;colData(dds) — sample annotations such as condition;rowRanges(dds) — genomic feature information for each gene; anddesign(dds) — the model formula ~ condition used in downstream analysis.Code Block 5.1.1. Creating the DESeq2 Dataset Object (dds)

# Step 5 – Create DESeq2 Dataset Object

# Step 5.1 — Assign row names to metadata

rownames(metadata_subset) <- metadata_subset$title

# Step 5.2 — Convert count matrix to integer mode

counts_subset <- as.matrix(counts_subset)

storage.mode(counts_subset) <- "integer"

# Step 5.3 — Create the DESeq2 dataset object

dds <- DESeqDataSetFromMatrix(

countData = counts_subset,

colData = metadata_subset,

design = ~ condition

)

DESeqDataSet object (dds). Earlier, in Section 3 (Exploratory Data Analysis — Before dds), we explored all samples to understand metadata structure, sample distributions, and the raw count matrix prior to modelling. Here, we shift focus to the selected analysis subset (baseline lesional and non-lesional samples) and perform post-dds quality checks to ensure the data are biologically consistent and free from technical artefacts or outliers before running DESeq().Code Block 6.1. Variance Stabilizing Transformation (VST) Applied to DESeq2 Dataset

# Apply variance stabilizing transformation (VST) to the DESeq2 dataset for downstream visualization

vsd <- vst(dds, blind=TRUE)

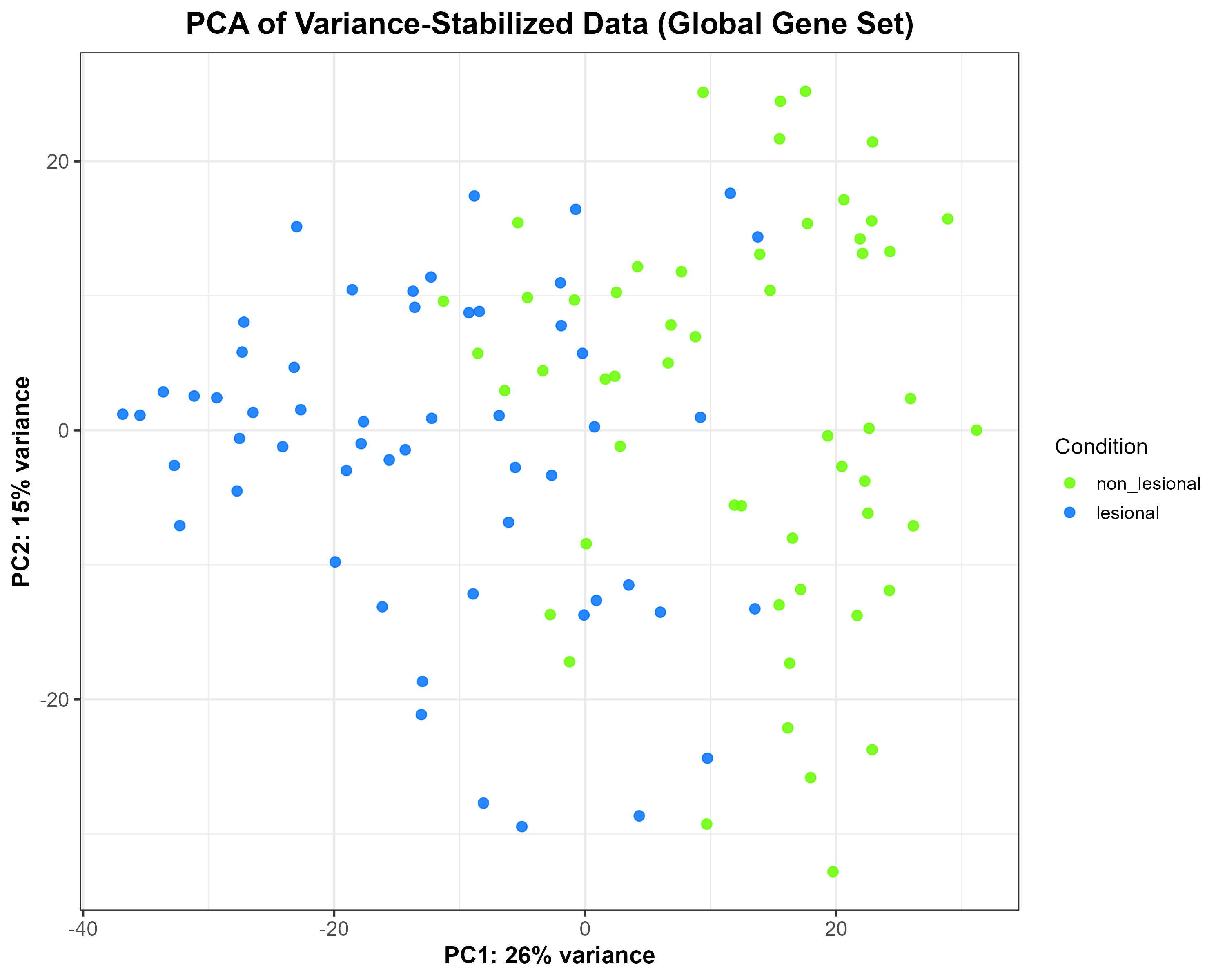

vsd), showing moderate separation between lesional and non-lesional samples along PC1 (26% variance) and PC2 (15% variance). Each point represents one sample, coloured according to condition.

Code Block 6.2. PCA Plot of Variance-Stabilized Data Grouped by Condition

# ==============================================================

# 6.2. PCA of Variance-Stabilized Data (Global Overview)

# ==============================================================

library(ggplot2)

library(DESeq2)

# Extract PCA data from the variance-stabilized matrix (vsd)

pca_df <- plotPCA(vsd, intgroup = "condition", returnData = TRUE)

percentVar <- round(100 * attr(pca_df, "percentVar"))

# Build PCA plot manually for full control over aesthetics

p_pca_global <- ggplot(pca_df, aes(x = PC1, y = PC2, colour = condition)) +

geom_point(size = 2.5, alpha = 0.85) +

scale_colour_manual(values = c("lesional" = "#0072FF",

"non_lesional" = "#66FF00")) +

xlab(paste0("PC1: ", percentVar[1], "% variance")) +

ylab(paste0("PC2: ", percentVar[2], "% variance")) +

labs(title = "PCA of Variance-Stabilized Data (Global Gene Set)",

colour = "Condition") +

theme_bw(base_size = 14) +

theme(

plot.title = element_text(size = 18, face = "bold", hjust = 0.5),

axis.title = element_text(size = 14, face = "bold"),

axis.text = element_text(size = 12),

legend.title = element_text(size = 13),

legend.text = element_text(size = 11)

)

# Save as high-resolution PNG

ggsave("C:/Users/YOUR/PATH/Figure_6.2.png",

plot = p_pca_global, width = 10, height = 8, dpi = 300)

~ patient + condition) to control for within-patient variability.

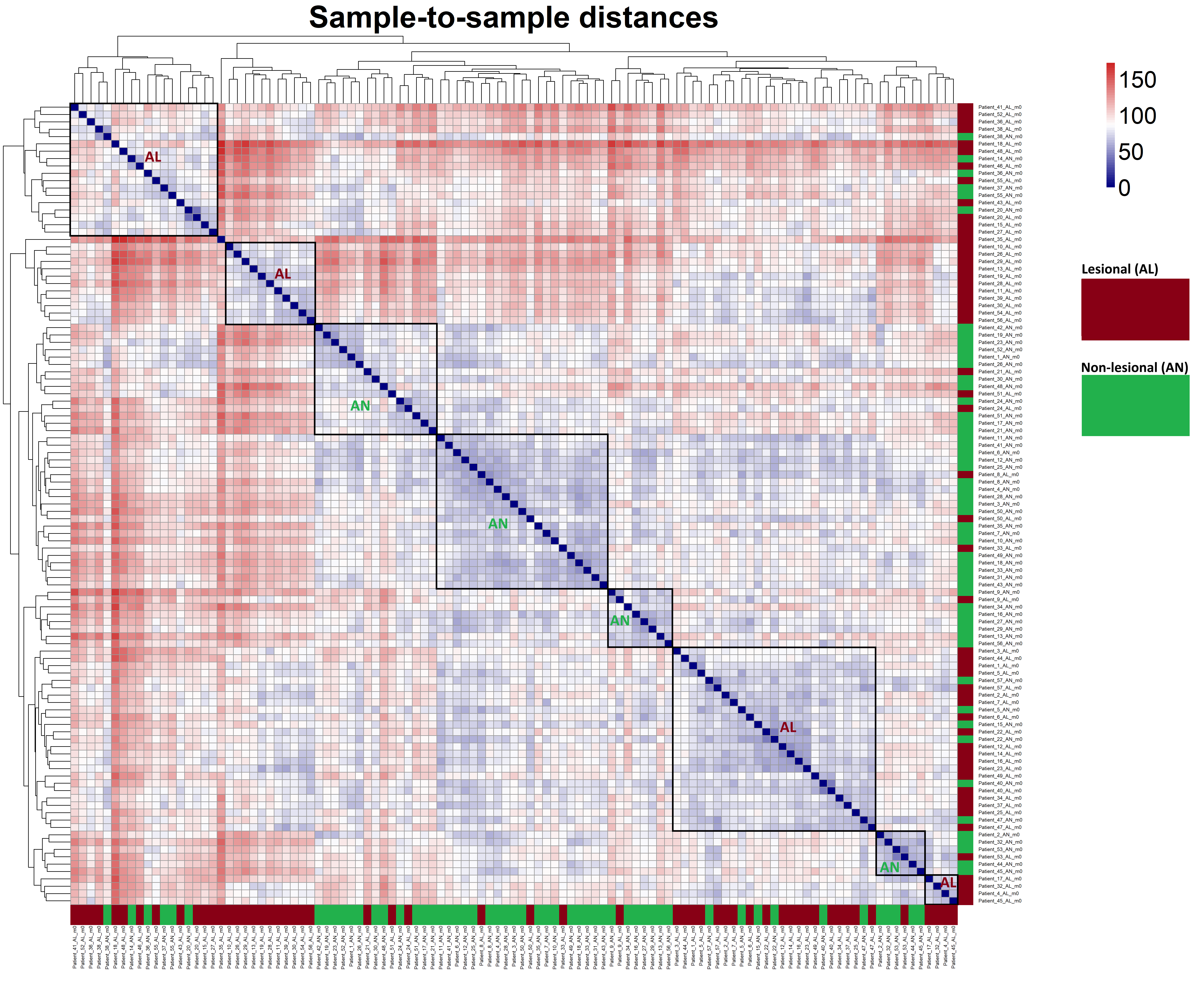

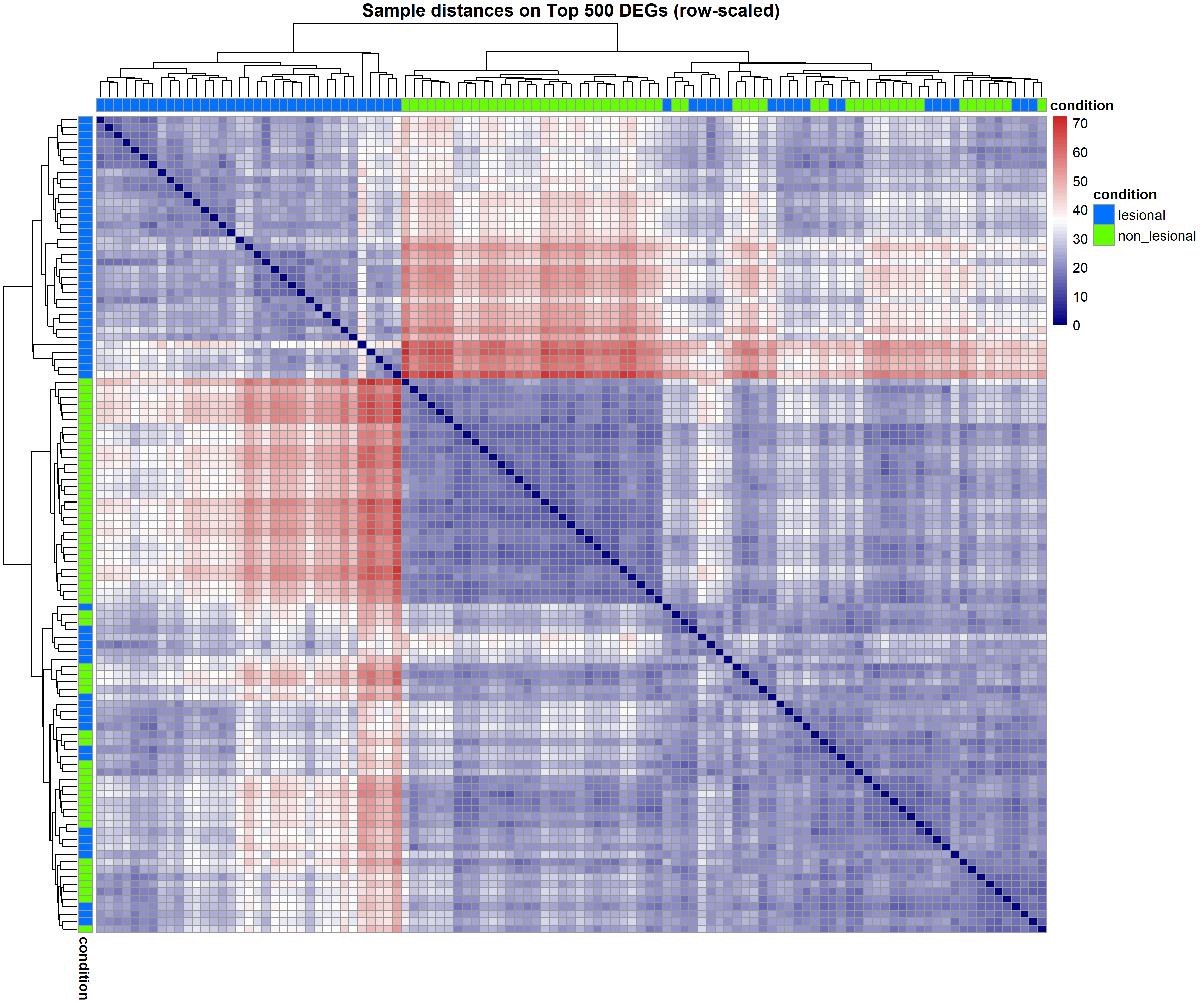

Code Block 6.4. Generate and Visualize Sample-to-Sample Distance Heatmap Using VST-Transformed Counts

# ==============================================================

# 6.4. Sample-to-Sample Distance Heatmap

# ==============================================================

# ========= Load Required Package =========

library(pheatmap)

# ========= Compute Pairwise Sample Distances =========

# Calculate distances between samples using VST-transformed counts.

# Transpose the assay matrix so that distance is computed across samples.

dists <- dist(t(assay(vsd)))

# Convert distance object to a full matrix for visualization

mat <- as.matrix(dists)

# ========= Generate and Save Heatmap =========

# Create a sample-to-sample distance heatmap and save as a high-resolution PNG.

# This heatmap helps visualize overall similarity patterns between samples.

pheatmap(

mat,

clustering_distance_rows = dists, # hierarchical clustering for rows

clustering_distance_cols = dists, # hierarchical clustering for columns

main = "Sample-to-sample distances", # heatmap title

color = colorRampPalette(c("navy", "white", "firebrick3"))(50), # blue-white-red color scale

# ========= Font and Label Settings =========

fontsize = 28, # controls general font size (including title)

fontsize_row = 6, # smaller font for row names

fontsize_col = 6, # smaller font for column names

angle_col = 90, # rotate column labels for better visibility

# ========= Dendrogram and Legend Settings =========

treeheight_row = 80, # row dendrogram height

treeheight_col = 80, # column dendrogram height

legend = TRUE, # display color legend

# ========= Display and Output Settings =========

show_rownames = TRUE, # show sample names on rows

show_colnames = TRUE, # show sample names on columns

filename = "C:/Users/omidm/OneDrive/Desktop/RNA_Seq_Task1/6.EDA/Figure_6.4.png", # output path

width = 18, height = 16 # figure size in inches for crisp rendering

)

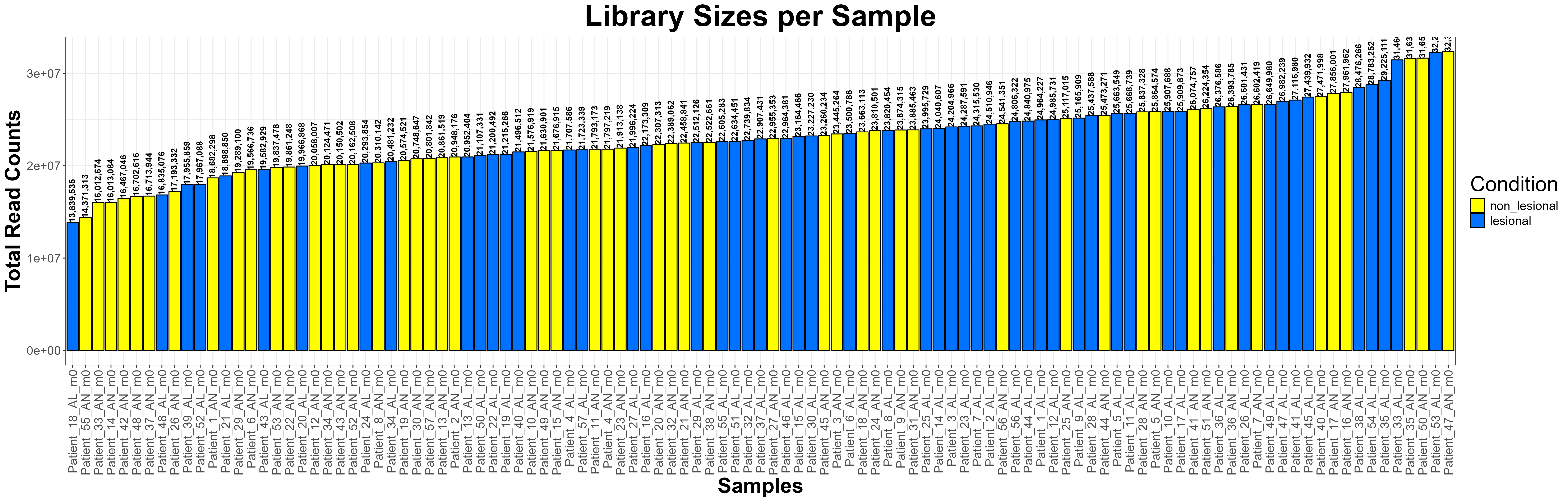

Code Block 6.5. Visualizing Library Sizes Across Samples Using ggplot2

# ==============================================================

# 6.5. Library Size Check (Enhanced Visualization)

# ==============================================================

library(ggplot2)

# Extract library sizes (total read counts per sample)

library_sizes <- colSums(counts(dds))

# Create a data frame for plotting

library_df <- data.frame(

Sample = names(library_sizes),

LibrarySize = library_sizes,

Condition = colData(dds)$condition # assumes condition column exists

)

# Order samples by library size for visual clarity

library_df$Sample <- factor(library_df$Sample, levels = library_df$Sample[order(library_df$LibrarySize)])

# Generate the bar plot

ggplot(library_df, aes(x = Sample, y = LibrarySize, fill = Condition)) +

geom_bar(stat = "identity", color = "black", width = 0.9) +

geom_text(aes(label = scales::comma(LibrarySize)),

vjust = 0.4, hjust= 0,size = 3.5, angle = 90, fontface = "bold") + # add labels

scale_fill_manual(values = c("lesional" = "#0072FF", "non_lesional" = "#FFFF00")) +

theme_bw() +

theme(

axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1, size = 14),

axis.text.y = element_text(size = 14),

axis.title = element_text(size = 24, face = "bold"),

plot.title = element_text(size = 34, face = "bold", hjust = 0.5),

legend.title = element_text(size = 24),

legend.text = element_text(size = 14)

) +

labs(

title = "Library Sizes per Sample",

x = "Samples",

y = "Total Read Counts",

fill = "Condition"

)

# Optional: Save as a high-resolution PNG

ggsave("C:/Users/omidm/OneDrive/Desktop/RNA_Seq_Task1/6.EDA/Figure_6.5.png",

width = 25, height = 8, dpi = 300)

ggplot2 for building publication-quality figures and reshape2 for converting matrices/data frames into a long format that ggplot understands. This keeps the plotting pipeline tidy and consistent across both approaches.

library(ggplot2)

library(reshape2)

DESeqDataSet. At this stage nothing is normalised; the values reflect the number of reads assigned to each gene per sample.

raw_counts <- counts(dds)

log_counts <- log2(raw_counts + 1)

log_counts_df <- as.data.frame(log_counts)

log_counts_df$Gene <- rownames(log_counts_df)

log_counts_long <- melt(

log_counts_df,

id.vars = "Gene",

variable.name = "Sample",

value.name = "Log2Counts"

)

log_counts_long$Condition <- colData(dds)$condition[

match(log_counts_long$Sample, rownames(colData(dds)))

]

log₂(counts + 1): We plot the log₂ counts per sample with boxes coloured by condition. Outliers are shown lightly to reduce clutter on wide plots. Labels and theme choices aim for legibility with many samples.

p1 <- ggplot(log_counts_long, aes(x = Sample, y = Log2Counts, fill = Condition)) +

geom_boxplot(outlier.alpha = 0.1, lwd = 0.3) +

scale_fill_manual(values = c("lesional" = "#0072FF", "non_lesional" = "#FFFF00")) +

labs(

title = "Pre-normalization Count Distributions (log2(counts + 1))",

x = "Samples",

y = "Log2 Expression (Raw Counts)",

fill = "Condition"

) +

theme_bw() +

theme(

plot.title = element_text(size = 16, face = "bold", hjust = 0.5),

axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1, size = 6),

axis.title = element_text(size = 12, face = "bold"),

legend.title= element_text(size = 10),

legend.text = element_text(size = 9)

)

ggsave("C:/Users/YOUR/PATH/Figure_6.6.1.png",

plot = p1, width = 18, height = 8, dpi = 300)

vsd with vst(dds, blind = TRUE), we pull the variance-stabilised matrix. VST removes library-size effects and stabilises variance across the mean, making samples comparable.

vst_mat <- assay(vsd)

vst_df <- as.data.frame(vst_mat)

vst_df$Gene <- rownames(vst_df)

vst_long <- melt(

vst_df,

id.vars = "Gene",

variable.name = "Sample",

value.name = "VST"

)

vst_long$Condition <- colData(dds)$condition[

match(vst_long$Sample, rownames(colData(dds)))

]

sample_order <- aggregate(VST ~ Sample, data = vst_long, median)

vst_long$Sample <- factor(vst_long$Sample,

levels = sample_order$Sample[order(sample_order$VST)])

global_median <- median(vst_long$VST, na.rm = TRUE)

p2 <- ggplot(vst_long, aes(x = Sample, y = VST, fill = Condition)) +

geom_boxplot(outlier.alpha = 0.1, lwd = 0.3) +

geom_hline(yintercept = global_median, linetype = "dashed", linewidth = 0.4, alpha = 0.6) +

scale_fill_manual(values = c("lesional" = "#0072FF", "non_lesional" = "#FFFF00")) +

labs(

title = "Post-normalization Count Distributions (VST)",

x = "Samples (ordered by median VST)",

y = "VST Expression (log2-like Units)",

fill = "Condition"

) +

theme_bw() +

theme(

plot.title = element_text(size = 16, face = "bold", hjust = 0.5),

axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1, size = 6),

axis.title = element_text(size = 12, face = "bold"),

legend.title= element_text(size = 10),

legend.text = element_text(size = 9)

)

ggsave("C:/Users/YOUR/PATH/Figure_6.6.2.png",

plot = p2, width = 18, height = 8, dpi = 300)

Code Block 6.6.Inspecting Count Distributions — Comparing Raw and VST-Transformed Data

# ==============================================================

# 6.6. Count Distribution — Pre- and Post-Normalization Comparison

# ==============================================================

library(ggplot2)

library(reshape2)

# -------------------------

# Step 1: Raw log2(counts + 1)

# -------------------------

# Extract raw counts from DESeqDataSet

raw_counts <- counts(dds)

# Log2 transform with +1 to avoid log(0)

log_counts <- log2(raw_counts + 1)

# Convert to data frame (samples as columns)

log_counts_df <- as.data.frame(log_counts)

log_counts_df$Gene <- rownames(log_counts_df)

# Melt to long format for ggplot2

log_counts_long <- melt(log_counts_df, id.vars = "Gene",

variable.name = "Sample", value.name = "Log2Counts")

# Add condition information

log_counts_long$Condition <- colData(dds)$condition[match(log_counts_long$Sample, rownames(colData(dds)))]

# Create pre-normalization boxplot

p1 <- ggplot(log_counts_long, aes(x = Sample, y = Log2Counts, fill = Condition)) +

geom_boxplot(outlier.alpha = 0.1, lwd = 0.3) +

scale_fill_manual(values = c("lesional" = "#0072FF", "non_lesional" = "#FFFF00")) +

labs(

title = "Pre-normalization Count Distributions (log2(counts + 1))",

x = "Samples",

y = "Log2 Expression (Raw Counts)",

fill = "Condition"

) +

theme_bw() +

theme(

plot.title = element_text(size = 16, face = "bold", hjust = 0.5),

axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1, size = 6),

axis.title = element_text(size = 12, face = "bold"),

legend.title= element_text(size = 10),

legend.text = element_text(size = 9)

)

# Save pre-normalization plot

ggsave("C:/Users/YOUR/PATH/ / / /Figure_6.6.1_PreNormalization.png",

plot = p1, width = 18, height = 8, dpi = 300)

# -------------------------

# Step 2: Post-normalization (VST)

# -------------------------

# Compute variance-stabilized data if not already done

# vsd <- vst(dds, blind = TRUE)

vst_mat <- assay(vsd)

# Convert to data frame for ggplot2

vst_df <- as.data.frame(vst_mat)

vst_df$Gene <- rownames(vst_df)

# Melt to long format

vst_long <- melt(vst_df, id.vars = "Gene",

variable.name = "Sample", value.name = "VST")

# Add condition info

vst_long$Condition <- colData(dds)$condition[match(vst_long$Sample, rownames(colData(dds)))]

# Compute median per sample for ordering

sample_order <- aggregate(VST ~ Sample, data = vst_long, median)

vst_long$Sample <- factor(vst_long$Sample, levels = sample_order$Sample[order(sample_order$VST)])

# Global median line

global_median <- median(vst_long$VST, na.rm = TRUE)

# Create post-normalization boxplot

p2 <- ggplot(vst_long, aes(x = Sample, y = VST, fill = Condition)) +

geom_boxplot(outlier.alpha = 0.1, lwd = 0.3) +

geom_hline(yintercept = global_median, linetype = "dashed", linewidth = 0.4, alpha = 0.6) +

scale_fill_manual(values = c("lesional" = "#0072FF", "non_lesional" = "#FFFF00")) +

labs(

title = "Post-normalization Count Distributions (VST)",

x = "Samples (ordered by median VST)",

y = "VST Expression (log2-like Units)",

fill = "Condition"

) +

theme_bw() +

theme(

plot.title = element_text(size = 16, face = "bold", hjust = 0.5),

axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1, size = 6),

axis.title = element_text(size = 12, face = "bold"),

legend.title= element_text(size = 10),

legend.text = element_text(size = 9)

)

# Save post-normalization plot

ggsave("C:/Users/YOUR/PATHS/Figure_6.6.2_PostNormalization.png",

plot = p2, width = 18, height = 8, dpi = 300)

DESeq() on the prepared dds object. DESeq2 estimates size factors, dispersions, and fits a negative binomial GLM per gene based on the design (~ condition). The fitted model is then used for statistical testing in the next steps. Code Block 7.1. Run DESeq to estimate size factors, dispersions, and fit the GLM

# ==============================================================

# 7.1. Fit the DESeq2 model

# ==============================================================

# The DESeq() function performs:

# - normalization (size factors)

# - dispersion estimation per gene

# - negative binomial GLM fitting

dds <- DESeq(dds)

Code Block 7.2. Get results for the target contrast and select significant DEGs

# ==============================================================

# 7.2. Results extraction and significance filtering

# ==============================================================

# Contrast definition: log2(lesional / non_lesional)

res <- results(dds, contrast = c("condition", "lesional", "non_lesional"))

# Define thresholds

alpha <- 0.05 # adjusted p-value cutoff

lfc_cut <- 1 # absolute log2FC threshold

# Filter significant genes

res_sig <- res[

!is.na(res$padj) &

res$padj < alpha &

abs(res$log2FoldChange) >= lfc_cut,

]

# Summarize and print number of DEGs

summary(res)

cat("Significant genes (padj <", alpha, ", |log2FC| >=", lfc_cut, "):",

nrow(res_sig), "\n")

#======================== Output: ==================

out of 16862 with nonzero total read count

adjusted p-value < 0.1

LFC > 0 (up) : 5085, 30%

LFC < 0 (down) : 4924, 29%

outliers [1] : 0, 0%

low counts [2] : 0, 0%

(mean count < 9)

[1] see 'cooksCutoff' argument of ?results

[2] see 'independentFiltering' argument of ?results

Significant genes (padj < 0.05 , |log2FC| >= 1 ): 515

apeglm shrinkage stabilizes effect sizes while keeping direction and relative ranking useful for interpretation and plotting. We identify the correct coefficient name from resultsNames(dds), apply lfcShrink(), then reapply the same significance thresholds to obtain a shrunk set of DEGs. Code Block 7.3. LFC shrinkage with apeglm and re-filter significant DEGs

# ==============================================================

# 7.3. LFC shrinkage with apeglm

# ==============================================================

# Load required library

library(apeglm)

# Check available coefficients to locate the target contrast

resultsNames(dds)

# Identify coefficient for "lesional vs non_lesional"

coef_name <- resultsNames(dds)[

grep("condition_lesional_vs_non_lesional", resultsNames(dds))

]

# Perform DE analysis (non-shrunk)

res <- results(dds, contrast = c("condition", "lesional", "non_lesional"))

# Apply shrinkage

res_shrunk <- lfcShrink(dds, coef = coef_name, type = "apeglm")

# Filter significant genes using the same thresholds

alpha <- 0.05

lfc_cut <- 1

res_sig <- res_shrunk[

!is.na(res_shrunk$padj) &

res_shrunk$padj < alpha &

abs(res_shrunk$log2FoldChange) >= lfc_cut,

]

# Summary

summary(res)

cat("Significant genes after shrinkage:",

nrow(res_sig), "\n")

# ----------------- Output --------------------

out of 16862 with nonzero total read count

adjusted p-value < 0.1

LFC > 0 (up) : 5085, 30%

LFC < 0 (down) : 4924, 29%

outliers [1] : 0, 0%

low counts [2] : 0, 0%

(mean count < 9)

[1] see 'cooksCutoff' argument of ?results

[2] see 'independentFiltering' argument of ?results

Significant genes: 515

adjusted P value and |log2FC|); constructs a tidy results data frame from the shrunken DESeq2 results; brings the VST expression matrix into scope for plotting; and prepares helper functions to map Ensembl gene IDs to human gene symbols. Keeping these shared pieces at the top prevents duplication and makes it easy to change a setting (for example, the colour for “lesional” or the |log2FC| cut-off) without chasing it through multiple blocks.suppressPackageStartupMessages({ ... }) to keep the console clean. DESeq2 supplies assay(vsd) and the shrunken results object we created in Section 7. ggplot2 is used for the PCA, volcano, and density figures. pheatmap builds the heatmaps; RColorBrewer provides palettes for them. reshape2 contributes melt() when we reshape matrices to long format for ggplot. dplyr powers the data-wrangling verbs (mutate(), filter(), case_when(), relocate()). readr writes the CSVs. Finally, AnnotationDbi + org.Hs.eg.db perform the Ensembl→HGNC symbol mapping used in plot labels and exported tables.out_dir defines where Section-8 outputs are saved and is created if missing; cond_cols fixes a single, consistent mapping from condition values to colours. The shared thresholds alpha (adjusted P value) and lfc_cut (absolute log2 fold change) are declared once and reused everywhere so filtering logic is consistent across the section.res_df is the tidy data frame most plots read from. We start by coercing the shrunken DESeq2 results (res_shrunk) to a data frame, then add a gene column from the row names (mutate(gene = rownames(...))). We remove rows without an adjusted P value (filter(!is.na(padj))). Next, case_when() creates a simple categorical status: “Up” if padj < alpha and log2FoldChange >= lfc_cut; “Down” if padj < alpha and log2FoldChange <= -lfc_cut; otherwise “NS” (not significant). This three-way label drives the volcano plot colouring and helps with quick summaries.vsd_mat <- assay(vsd) pulls in the variance-stabilised expression matrix (rows = genes, columns = samples) prepared earlier; it’s the common input for the DEG-focused PCA, distance heatmaps, and expression density panels. For human-readable labels, the mapping helpers strip Ensembl version suffixes (strip_ver() turns ENSG000001234.5 into ENSG000001234) and then look up symbols with AnnotationDbi::mapIds() from org.Hs.eg.db. The wrapper ens_to_symbol() converts any vector of Ensembl IDs to symbols, falling back to the original Ensembl ID if a symbol is unavailable. Finally, we append a gene_symbol column to res_df so symbols are available to every downstream plot and export without repeating the mapping step.Code Block 8.0. Setup & Common Helpers — libraries, thresholds, results frame, VST matrix, and ID mapping

# ==================================================================

# 8.0 Setup & Common Helpers

# ==================================================================

suppressPackageStartupMessages({

library(DESeq2)

library(ggplot2)

library(pheatmap)

library(RColorBrewer)

library(reshape2)

library(dplyr)

library(readr)

library(AnnotationDbi) # mapping

library(org.Hs.eg.db) # human gene symbols

})

# ============= Output & Colors =============

out_dir <- "C:/Users/omidm/OneDrive/Desktop/RNA_Seq_Task1/8.DEG_Visualization"

if (!dir.exists(out_dir)) dir.create(out_dir, recursive = TRUE)

# Use one consistent palette everywhere

cond_cols <- c("lesional" = "#0072FF", "non_lesional" = "#66ff00")

# ============= Shared thresholds =============

alpha <- 0.05

lfc_cut <- 1

# ============= Results frame from Section 7 (with shrinkage) =============

# Expects 'res_shrunk' from Section 7.

res_df <- as.data.frame(res_shrunk) %>%

mutate(gene = rownames(res_shrunk)) %>%

filter(!is.na(padj)) %>%

mutate(

sig = case_when(

padj < alpha & log2FoldChange >= lfc_cut ~ "Up",

padj < alpha & log2FoldChange <= -lfc_cut ~ "Down",

TRUE ~ "NS"

)

)

# ============= VST matrix from Section 6 =============

vsd_mat <- assay(vsd)

# ============= Ensembl -> HGNC mapping helpers =============

strip_ver <- function(x) sub("\\..*$", "", x) # remove version suffixes

all_ens <- unique(c(rownames(vsd_mat), res_df$gene))

sym_map <- AnnotationDbi::mapIds(

org.Hs.eg.db,

keys = unique(strip_ver(all_ens)),

column = "SYMBOL",

keytype = "ENSEMBL",

multiVals = "first"

)

ens_to_symbol <- function(ids) {

ids_stripped <- strip_ver(ids)

syms <- unname(sym_map[ids_stripped])

syms[is.na(syms) | syms == ""] <- ids[is.na(syms) | syms == ""]

syms

}

# Also store symbols on the results frame for later plots/tables

res_df <- res_df %>% mutate(gene_symbol = ens_to_symbol(gene))

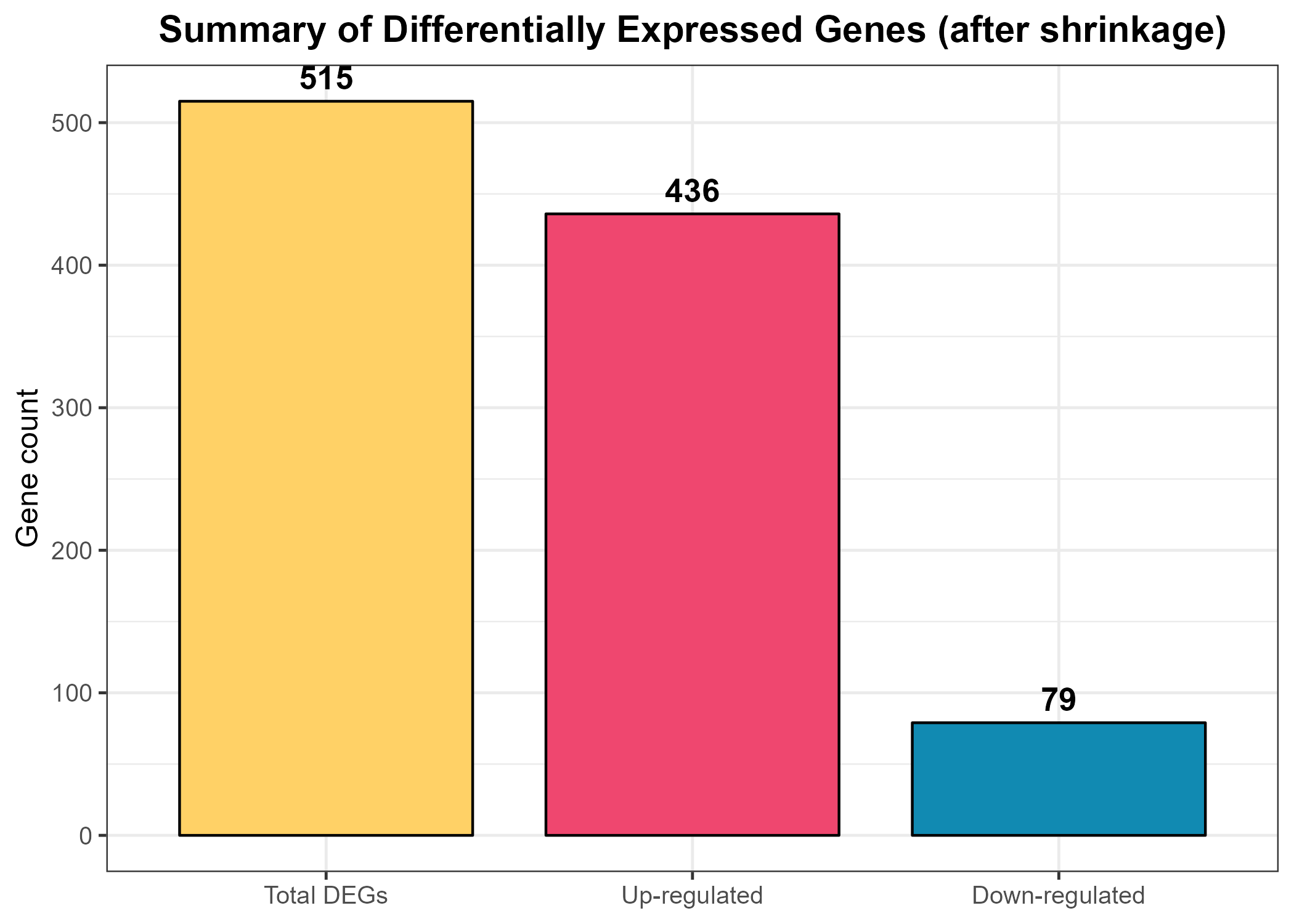

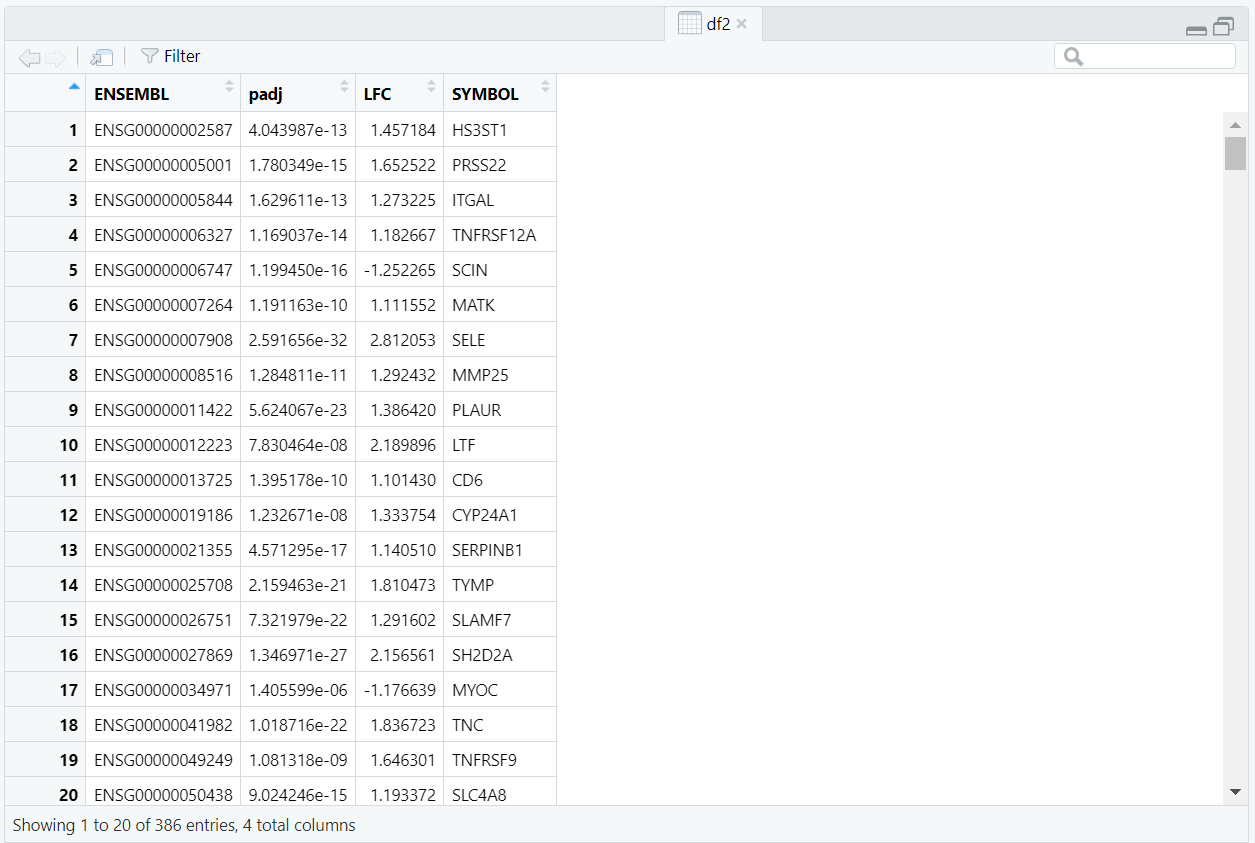

res_df, the processed results data frame created earlier. Using sum() in combination with logical conditions, it computes: deg_total – the total number of significant DEGs (res_df$sig != "NS").deg_up – the number of up-regulated genes (res_df$sig == "Up").deg_down – the number of down-regulated genes (res_df$sig == "Down").cat() command then prints this summary directly to the console, providing a quick textual check of the number of DEGs before moving to graphical representation.deg_df that stores the directions (“Up-regulated” and “Down-regulated”) along with their respective counts. The ggplot2 function geom_col() is used to generate vertical bars whose heights correspond to the number of genes in each category. The scale_fill_manual() line assigns fixed colours to the two groups—pink (#EF476F) for up-regulated and blue (#118AB2) for down-regulated genes—ensuring visual consistency across all figures.

theme_bw() function applies a clean white background for clarity, and the theme() call centers the title while adjusting font sizes for readability. The resulting plot, titled “Significant DEGs (after shrinkage)”, provides a quick visual impression of the dataset’s directionality—whether up-regulation dominates, down-regulation dominates, or the balance is roughly equal. Finally, ggsave() exports the figure as a high-resolution PNG file (Figure_8.1_DEG_Summary_Bar.png) to the designated out_dir folder for documentation and reporting.Code Block 8.1. Overview & Summary of DEG Results

# ==================================================================

# 8.1 Overview & Summary of DEG Results

# ==================================================================

# ============= Counts & Console Summary =============

deg_total <- sum(res_df$sig != "NS")

deg_up <- sum(res_df$sig == "Up")

deg_down <- sum(res_df$sig == "Down")

cat("DEG summary (shrinkage applied):\n",

" Total significant:", deg_total, "\n",

" Up-regulated :", deg_up, "\n",

" Down-regulated :", deg_down, "\n")

# ============= Bar Plot Summary (Figure 8.1) =============

deg_df <- data.frame(

Category = factor(c("Total DEGs", "Up-regulated", "Down-regulated"),

levels = c("Total DEGs", "Up-regulated", "Down-regulated")),

Count = c(deg_total, deg_up, deg_down)

)

p_deg_bar <- ggplot(deg_df, aes(Category, Count, fill = Category)) +

geom_col(color = "black", width = 0.8) +

geom_text(aes(label = Count),

vjust = -0.5, size = 4.5, fontface = "bold") + # display numbers above bars

scale_fill_manual(values = c(

"Total DEGs" = "#FFD166", # golden yellow

"Up-regulated" = "#EF476F", # pink/red

"Down-regulated" = "#118AB2" # blue

)) +

theme_bw(base_size = 12) +

labs(

title = "Summary of Differentially Expressed Genes (after shrinkage)",

x = NULL,

y = "Gene count"

) +

theme(

plot.title = element_text(hjust = 0.5, face = "bold"),

legend.position = "none" # hide redundant legend

)

ggsave(file.path(out_dir, "Figure_8.1_DEG_Summary_Bar.png"),

p_deg_bar, width = 7, height = 5, dpi = 300)

Code Block 8.2. DEG-Focused Diagnostic Visualizations (QC & Data Patterns)

# ==================================================================

# 8.2. DEG-Focused Diagnostic Visualizations (QC & Data Patterns)

# (PCA and sample–sample distances using top DEGs)

# ==================================================================

# ============= Select Top DEGs by padj =============

top_k <- 500

top_deg_ids <- as.data.frame(res_shrunk) %>%

mutate(gene = rownames(res_shrunk)) %>%

filter(!is.na(padj)) %>%

arrange(padj) %>%

slice(1:min(top_k, n())) %>%

pull(gene)

# VST expression restricted to top DEGs

vst_top <- vsd_mat[top_deg_ids, , drop = FALSE]

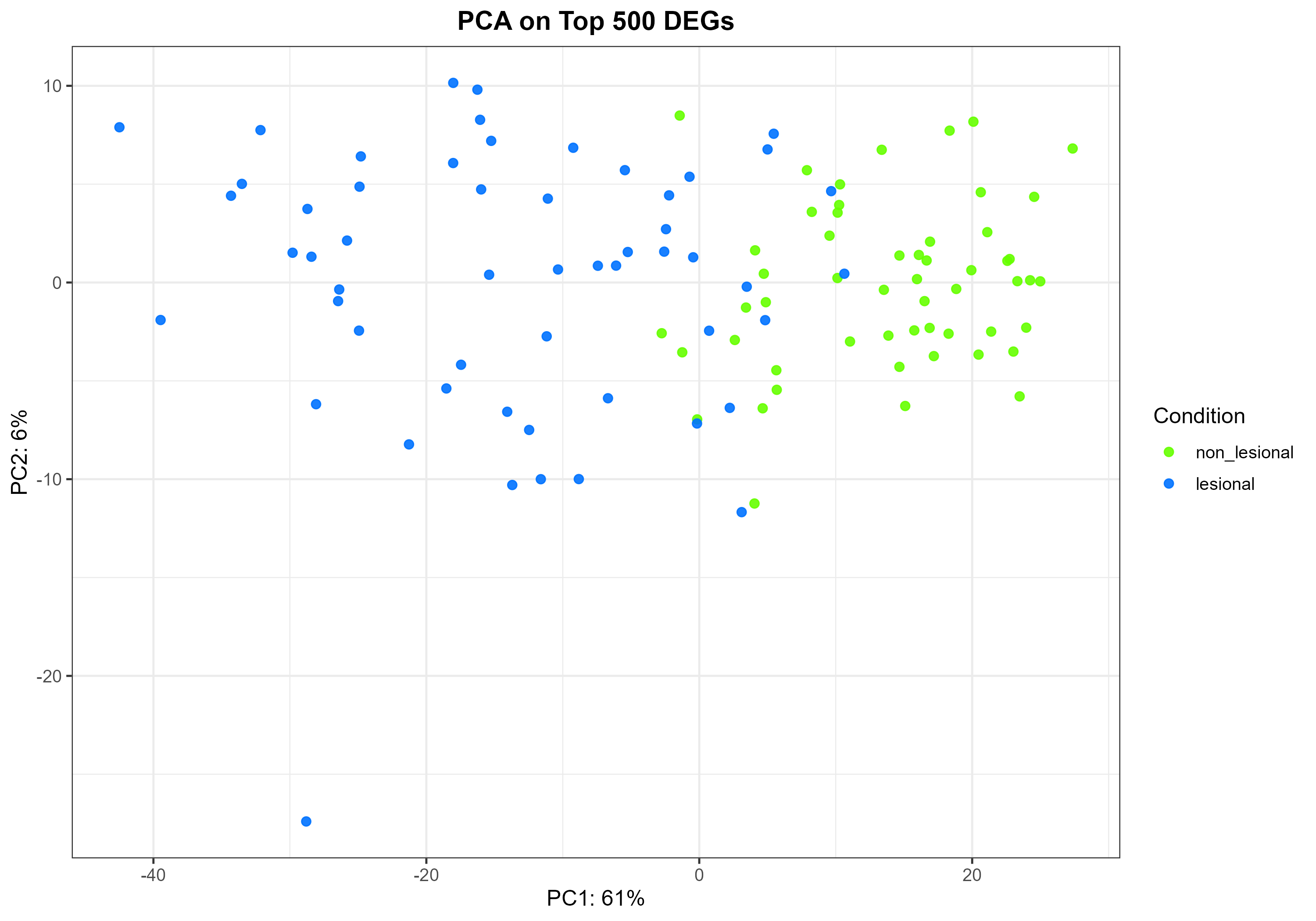

Code Block 8.2.1. PCA Plot on Top DEGs

# ============= 8.2.1 PCA on Top DEGs =============

pca <- prcomp(t(vst_top), scale. = TRUE)

var_expl <- round(100 * (pca$sdev^2 / sum(pca$sdev^2)))[1:2]

pca_df2 <- data.frame(

PC1 = pca$x[, 1],

PC2 = pca$x[, 2],

Sample = colnames(vst_top),

condition = colData(vsd)$condition[match(colnames(vst_top), rownames(colData(vsd)))]

)

p_pca_deg <- ggplot(pca_df2, aes(PC1, PC2, colour = condition)) +

geom_point(size = 2, alpha = 0.9) +

scale_colour_manual(values = cond_cols) +

xlab(paste0("PC1: ", var_expl[1], "%")) +

ylab(paste0("PC2: ", var_expl[2], "%")) +

theme_bw(base_size = 12) +

labs(title = paste0("PCA on Top ", length(top_deg_ids), " DEGs"),

colour = "Condition") +

theme(plot.title = element_text(hjust = 0.5, face = "bold"))

ggsave(file.path(out_dir, "Figure_8.2.1_PCA_TopDEGs.png"),

p_pca_deg, width = 10, height = 7, dpi = 300)